EA metamodel – the big picture (and the small picture too)

In the various previous posts about EA metamodels, we’ve been exploring some of the detailed structures for toolsets and the like at a very, very low-level. But what’s the big-picture here? What’s the point?

So let’s step back for a moment, and look at real-world EA practice.

Much of our work consists of conversations with people, and getting people to talk together, so as to get the various things and processes and activities and everything else in the organisation and shared-enterprise to work better together.

To support those conversations, and to help sense-making and decision-making, we create models. Lots and lots of models. Different models, in different notations, for different different stakeholders, and different contexts.

Lots and lots of different ways to describe things that are often essentially the same, but happen to be seen from different directions.

Yet keeping track of the samenesses and and togethernesses and relationships and dependencies of everything in all those different portrayals is often a real nightmare.

Finding a way to resolve that nightmare is what this is all about.

A bit of context

Look around at your own context. If you’re doing any kind of architecture-work, or even just explaining things to other people, you’ll be doing lots of diagrams and drawings and models. Some will be hand-drawn, some will be done on a drawing-package such as Visio or Dia or LucidChart, and some may be done within a purpose-built modelling tool such as ArgoUML or Agilian or Sparx Enterprise Architect or Troux Metis. Lots of different ways of doing the same sort of thing with different levels of formal-rigour.

But if we look at it with a more abstract eye, what we’re using is different types of notation. Some will be too freeform to describe as a ‘notation’ as such, though the point is that it’s still used for sense-making and decision-making. Once we get to a certain level, we tend to use some fairly standard notation such as UML or BPMN or Porter Value-Chain or Business Motivation Model or Business Model Canvas, simply because it’s easier to develop shared understanding with a shared model-notation.

And again with an abstract eye, each notation consists of the following:

- a bunch of ‘things’ – the entities of the notation

- a bunch of connections-between-things – the relations

- a bunch of rules or guidelines about how and when and why and with-what things may be related to other things – the semantics that identify the meanings of things and their relations and interdependencies

- often, a graphic backplane, parts of which may be semantically significant as ‘containers‘ for things (such as the ‘building blocks’ in Business Model Canvas)

(Often a notation will also be linked to various methods of how to use the notation, or to change-management processes that relate to or guide the use of the notation. That’s something we’ll need to note and include as we go deeper into the usage of metamodels within EA and the like.)

Each notation describes entities and relations and semantics in a different way. But often they’re actually the same entities that we see in another notation. What we need, then, is a way to keep track of entities (and some of the relations and semantics) as we switch between different notations.

UML (Unified Modelling Language) does this already for software-modelling and software-architecture: a bunch of different ways to look at the ‘areas of concern’ for software-architecture and the like. That’s a very good example here: entities that we develop in a Structure Diagram can be made available (‘re-used’) in any of the other dozen or so diagram-types (notations) within the overall UML.

Yet UML only deals with the software-development aspects of the context. For example, there’s no direct means to link it to an Archimate model, to show how it maps to business processes in an architectural sense. There’s certainly no means to link it to Business Motivation Model, to show dependencies on business-drivers; there’s no means to link it Business Model Canvas, to rethink the overall business-model (and what part software might or might not play in a revised business-model). Those may not be of much concern to software-architecture – but they are of very real concern to entrprise-architecture or any other architecture that needs to intersect with software-architecture and place it within the overall business or enterprise context.

Hence what we’re talking about here is a much-larger-scale equivalent of UML. It needs to accept that every notation is different – in other words there’s no possibility of “One Notation To Rule Them All”, a single notation that would cover every possible need at every level. Instead, like UML, it would aim to be able to maintain and update entities and relations as they move between different notations – in other words, something quite close to “One Metamodel To Link Them All”.

The catch is that we need to go a long way below the surface to make it work. For UML, that underlying support is provided by MOF (OMG Meta-Object Facility), which is also shared with other OMG specifications such as BPMN (and perhaps also OMG BMM – Business Motivation Model?). Yet MOF only applies to the OMG (Object Management Group) specifications: what about all the other models and notations and everything else that’s defined and maintained by everyone else, often not even in a formal metamodel format? To link across that huge scope of ‘the everything’, we need something that goes at least one level deeper again: and that’s what this is all about.

The simplest start-point is that pair of questions from Graeme Burnett, that would apply to any entity or relation or almost anything:

- Tell me about yourself?

- Tell me what you’re associated with, and why?

I’d suggest that that’s where we need to start: with something that is integral enough to be described as ‘yourself’, and to which we could apply those questions. That’s our root-level – a kind of UML for UML-and-everything-else.

Yet that really is at a very low level – deeper than MOF, which is deeper than UML, which is deeper than the UML Structure Diagram, which is deeper than any class-structure model that we might build on that metamodel for UML Structure Diagram.

As we go down through all of those layers, it needs to get simpler and simpler, in order to identify and support the commonality between all those disparate surface-elements. At the kind of level we’re talking about here – what’s known as the ‘M3/M4 metamodel layer’ – it needs to be very simple indeed – which makes it a bit difficult to describe what’s going on, especially by the time we’ve worked our way back up all the way to the surface again.

And, yes, this is where it gets a bit technical…

Building on motes

At this point I’ll link back to a reply to a comment by Peter Bakker on the previous post ‘EA metamodel – a possible structure‘.

Some while back, someone said in a comment to one of the previous that the metamodel I’ve been describing is “like going back to assembly language”. Which, in a sense, it is – I’ll freely admit that.

The commenter didn’t mean it politely – he evidently thought that this was a fatal design flaw, one that invalidated the whole exercise.

But he’d actually missed the point: down at the root – in software, at least – everything is assembly-language. (Or machine-code, to be pedantic, but that’s described here too, of course.)

For this layer we’re talking about here – a layer even deeper than MOF, a layer that is shared across every possible notation – it is the equivalent of assembly-language. It has to be: there’s no choice about that.

And the point here is that if we don’t get the thinking right at this level, we’re not going to get the interoperability and versatility that we need at the surface-level. But this ‘M3/M4 layer’ really is the equivalent of assembly-language. M2/M3 is the equivalent of the compiler, M1/M2 (the surface-ish metamodel) is the high-level language, and M0/M1 (the modelling that we actually work with) is the equivalent of the software-application. It’s essential not to get confused about the layering here: this is a very deep level indeed, far deeper than most people would usually need to go. But we need to get it right here.

Peter said in his comment:

I pretend that I want to start my own Tubemapping Promotion Enterprise

Therefore I will sketch three models:

1. a mindmap for the brainstorm part

2. a business model canvas for the business model derived/extrapolated from the mindmap

3. a tubemap to visualize the routes from here (the idea) to there (a running business)

I keep them as simple as possible of course.Next step is to see if I can metamodel/model all three models together with motes following my perception of your guidelines above.

So when we’re developing a multi-notation model – such as Peter’s tubemapping ‘app’, in this case – we need to consider all those four inter-layers at once:

- at the surface layer (M0/M1) we need a notation for each of the distinct models (such as, in Peter’s example, for mind-map, Business Model Canvas, and tubemap)

- at the metamodel (M1/M2) we need a metamodel that would describe the components and interactions (entities and relations, plus the rules and parameters that define them) that make up each of those notations

- at the metametamodel layer (M2/M3) we need to identify how to move entities (and, in a few cases, relations) between each of the metamodels, without losing or damaging anything from what’s already been done at the individual (meta)model layers

- at the ‘atomic’ layer (M3/M4, the focus of this post) we need to identify the ‘assembly-language’ fine-detail of exactly what happens at each stage and with each change to each of the underlying motes, so as to verify that everything actually works and nothing is missed.

Note that for most work we shouldn’t ever need to look at the ‘atomic’ layer. We’re only doing it now because we’re roadtesting the ideas for this ‘mote’ concept, deliberately running everything backwards from the surface layer to check out the interoperability and the rest.

(In other words, yes, we’re doing a deep-dive into the ‘assembly-language’ layer, and it isn’t what we’d normally do. But if you’re a CompSci student, you’d usually expect to have to build a compiler at some stage, to prove that you know what’s going on ‘under the hood’. You’d typically start from hand-sketches that become UML diagrams that become language-specifications for the compiler that works with assembly-language. Most everyday users aren’t CompSci students mucking around with compilers – but someone has to do it if we’re to get usable surface-layer applications. Same here, really. ![]() )

)

What I’ve been suggesting is that we can support all of those requirements at this layer with a single underlying entity, which I’ve nicknamed the ‘mote’. This has a very simple structure:

- unique identifier

- role-identifier (text-string) – specifies what role this mote plays in the (slightly) larger scheme of things

- parameter (raw-data) – to be interpreted as number, pointer, text, date or anything, according to context

- variable-length list of pointers to ‘related-motes’

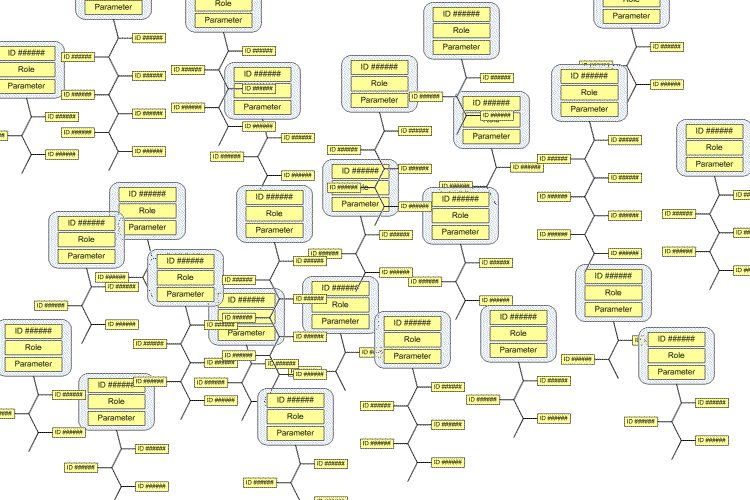

In graphic form, it looks a bit like a bacillus:

But we need to remember that it’s tiny – again, much like a bacillus. It’s the ways that they all link together that provide it with the overall power. The mote’s parameter carries just a tiny bit of information; but when that information is placed in context with that from other motes, we can build up towards a much bigger picture.

In effect, what we have in the EA-toolset’s repository is a kind of ‘mote-cloud’:

The ways in which the motes link together I’ve summarised in the previous post, ‘EA metamodel – a possible structure‘. Many remain always as tiny little fragments of information, re-used all over the place in simple many-to-one relationships. But some motes do get large enough to notice – and that’s what we start to see further up the scale, as Entity, Relationship, Model and so on.

Interestingly, there is one context in which we do work directly at the mote-level. It’s when we ask those two questions: “tell me about yourself?” and “tell me what you’re associated with, and why?”. The information to answer those questions is carried directly within the mote, in its embedded role and parameter and via its related-mote list. The ‘yourself’-mote in this case would only be an Entity, a Relation or a Model.

Hope this helps to clarify things a bit further? Comments / suggestions requested as usual, anyway.

Love this thread of conversation.

Putting together the ideas from your series so far – the notions of a new UI or series of UI metaphors, as well as a low-enough level of data atomicity to link all models together, I’m wondering (in a fantastical Sci-Fi sort of way perhaps) about something that can auto-tag these motes. A Borg nano-probe perhaps? 😉 Something that would curse and recurse throughout the enterprise space, tagging objects, and reporting back its results to the big DB in the sky. I’m thinking about something like ZeroConf here. Something that, when unleashed on the enterprise, would report back all resources (objects) so that the “hard part” of tagging all these Mote objects (and in a large enterprise, there would be millions of them), would be done programmatically. The human layer would be applying the smart filters to them, such that, the holographic space could be viewed in smart “slices” at a time.

Then, as you say, the real effort, the real innovation will be in the UX space. And for that, I agree an agile prototyping approach that lets us ‘play’ with views of the space, would be the way to go. This also begs for an iOS treatment, something that by its definition, is touchable, tangible. Pinchable, zoomable. Direct action on object, via click or touch, is a UI imperative, and depending on how those objects are presented to the user, the UI possibilities could be intriguing indeed.

What I would say such a project should NOT do, is try to go the open-source (or as Bob Metcafe famously called “Open Sores”) approach, where some monstrously large thing is put together by a committee. yes, it would “work” but it would be clunky and ultimately incoherent. A project like this needs the sexiness of a Jonathan Ives, and the smarts and wisdom of a Steve Jobs. People with taste, and restraint. That’s the hardest part of any innovative UI effort. And I’ve been involved in quite a few. It’s like the old San Exupery quote; “a design is not finished when there is nothing left to add, but when there’s nothing left to take away”. The minimalism and simplicity will be the gold here.

And if all the above is done right, this would be GOLD.

Cheers,

Curtis Michelson

Creative Consulting / Business Analyst

twitter: SpecosaurusRex

Thank you Tom,

I was responsible for relaying the assembly language comment. Something that came about from an email discussion I was having with a friend as I was relating to him the concepts you’d described in previous posts. No offence was meant by the comment, we were both trying to understand the utility of your ideas. Having followed your blog for some time, I know that you wouldn’t have posted such ideas without long and deep thought and careful analysis. The fact that you’ve been describing some of these concepts since at least 2009 is testament to this. Even though you had described the concept of a holographic view previously, I hadn’t realised that the metamodel you described in the post More detail on EA Metamodel was in fact a metametamodel or even a metametametamodel (m4). This was something that you clarified in the reply to my comment and refined in further comments and now this post. Till that time I had thought you were promoting an instantiation of what we’re now referring to as the m3/m4 model directly, which would, I still believe, be metaphorically like programming in assembly. So no offence meant and hopefully the comment has helped all your audience gain greater clarity and insight to what is undoubtedly a powerful and revolutionary idea.

The reason I was relaying the ideas to my friend (as I don’t have his permission to post, I won’t name him here), was I think he might potentially share some common ground and have some skills to offer your endeavour. He is the only person I know with a wealth of experience in creating metametamodels that will potentially generate real business value. He also has the rare ability to be able to program very well and also have a very strong understanding of enterprise architecture concepts. He has been developing dynamic metamodels that give you a different diagram and palette of tools depending on different contexts.

@Curtis Michelson – Curtis, yeah, exactly: “something that would curse and recurse across the enterprise space” – nice way to put it…

Quite a few CMDB tools do that already (is that what ZeroConf is? I don’t know it…), and it would also fit well with the developing scope of Internet-Of-Things and Citizen-Sensing and the like. I’ll admit I hadn’t thought of that side of it before now, but the ‘mote’ concept would certainly work well for those requirements.

And yes, “the real innovation will be in the UX space”. Another admission here: I’ve been pushing the Open Source bandwaggon simply because that’s all that I can afford… 😐 (As a half-crazed stuck-out-on-the-sidelines independent consultant, there’s certainly no way I can afford the tens-of-thousands-per-seat price-tag of most of the commercial tools – and neither can most other folks, to be honest. Which kinda restricts the market a lot – much more restricted than it needs to be, anyway. Humph.)

I do take your point about the ‘committee problem’ that cripples many Open Source projects, and also the real value of a Jonathan Ive / Steve Jobs combination of talent. (There are couple of German guys who are doing some really nice work in the EA / UX space that I’m keeping my eye on, but there’s no way I can afford to pay them for the work that needs to be done here. 🙁 ) So whilst, yes, I’d really like to see something good/useful come out of the Open Source space for the toolset need, I’m not going to insist that that’s the only route. And I’m fairly certain that with some innovative thinking, it should be possible to get out a toolset in the iOS space (or equivalent: OS-X, Android, HTML5, whatever) that does some really nice info-handling and still comes out at a mass-market price of $30-50 or so.

Where I will insist on staying non-proprietary is in the information-exchange format (file-format). Going proprietary there would defeat the entire object of the exercise, which is to be able to move EA-type information between toolsets across the whole toolset-ecosystem. Without that ability to move things around, what’s the point?

I do think the ‘mote’ concept is the right way to go here, but I’m well aware that it still needs a lot more work – both in terms of its structure and relations, and also in how it would actually be used within a toolset. Any ideas on that, please?

And thanks, of course. 🙂

@Anthony Draffin – Anthony: oops… my turn to apologise… sorry… 😐

(That’s what comes of my not bothering to chase back through the references. If I’d remembered that it was you, I would definitely have known that was no put-down intended: my apologies… It’s true I have had a rather nasty little set of snide-putdowns from someone here in the past few days, but it certainly wasn’t you!)

Anyway… to come back to topic…

Yes, as I said in the post above, the analogy with assembly-language is a very close one. If I haven’t managed to insult your colleague beyond repair, perhaps we could talk ideas a bit more about this?

Many thanks, anyway. And, again, my apologies?

Thanks Tom for the quick reply. “half-crazed stuck-out-on-the-sidelines independent consultant” Sheesh, does that ever describe me, too!! love that line.

Hey, I hear ya about budget. Of course, this would get crazy expensive. Design, or good design, is never cheap. But let me ask you this, would you ever really get the tool you NEED (not necessarily want) if you went the cheap route?

I agree though, the open part of this is the underlying model, or metamodel, or whatever you want to call that. The schema, the notation, and the file format, etc, that’s all in the open domain. But someone, has to create the app, and the app has to be really good. Unfortunately, that would mean, the half-crazed independents like us, might be priced out of the market. Perhaps. But I have hopes someone will find the right value breakthrough.

FYI, zeroConf was/is an IEEE thing, and an open-source sort of project that defined what a self-configuring network would have to be or act like to deserve the name. Apple has embraced it and implemented said idea in the form of “bonjour”, and Microsoft has done something similar, but I doubt they relied on the IEEE standard for it. the end result is, you get on a network, and all objects and resources (printers, servers, other network nodes, and so forth) all self-identify in a common semantic substrate, such that the client can easily connect to any and and all such devices. The outlying objects “speak”, in a sense. There’s sense and intelligence out there on the network. So, I was fantasizing about the objects out in the enterprise space “speaking” to us, and giving us their information, such that we could pull in objects into our app and begin viewing them. I might be totally talking out of my ass, too. But I’m thinking really sideways! 😉

Wasn’t familiar with this until today, but I see what you mean about the CMDB tools. and there’s a standard for federating CMDBs in a large heterogeneous enterprise together into a meta-CMDB.

http://dmtf.org/standards/cmdbf

Tom, following on from the assember language analogy ( and perhaps the “bit” as the unit of “information” might have worked equally as well ) i think it captures some important features of this whole exercise. firstly, at the machine level even the most esoteric program or document are just looooong sequences of these primitive units, and like your mote cloud, essentially incomprehensible without knowledge of the higher order structure. The mote carries with it the possibility of connecting, but as only a subset of all possible combinations would BE meaningful, the intelligibility of any particular arrangement of motes is a function PURELY of their arrangement NOT of their content. I think that including a role as a constituent of the mote compromises your system because it makes intelligibility a function of the mote rather than an emergent property of the arrangement. It also seems to preclude a mote from playing more than one role in a cloud, and so effectively prevents your mote from actually BEING primitive.

So if the mote is to BE the primitive, rather than making it a triple, consider dropping the role slot entirely, and simply using [UID+(data)] as the frame for your mote – but then this looks like a very familiar structure – for it is simply a key:value pair, which IS the basic concept of any addressable space whether a database table, a flat file or a memory address. The mote:role slot is analogous to a class name in strongly typed OO languages, which your “compiler” would use to determine the well-formedness of a particular zone of the mote cloud.

The choice between mote[2] and mote[3] ie effectively the choice between [-Type] and [+Type]. By implication also, combinatorial rules for mote[3] are going to be a syntactic/semantic matter because they are effectively enforced by your “compiler”, but mote[2] has no such syntactic checker so combinatorial rules must be handled at the semantic/pragmatic level, that is the trade off and depending on how regular the subject domain is, such an “early binding/strong typing” strategy may suit, but for less predictable subject domains, mote[2] might suit and a “late binding/duck typing” strategy adopted, with all coherency checks deferred to runtime.

So i contend that Mote[2] is effectively a MOF:M3 primitive and i think mote[3] is actually a MOF:M2 construct ( ie.mote[3] = Mote[2+TYPE] ). In either case, the rules for evaluation are not IN the mote cloud as such but are handled by the “exception handling” system ( and in the case of mote[2] by the “compiler”).

Regards

Robert

Tom, i noticed after posting the comments above, that actually your mote has an additional component which is effectively a set of MOF uids. this does not substantially effect what i have suggested but it does undescore in my mind the sense that mote[4] as i’ll call it, is as much a MOF:M2 construct as mote[3], and it does remind me that the MOF specification for M3 as i recall does performs a sort of bootstapping exercise in that it makes use of its own constructs in its own definition, but i’ll have to go off andd confirm that now before i say too much more. Also, as your original aim was to invent a construct that would support an EA repository, perhaps a key-value NOSQL datastore like REDIS would be appropriate!!

regards Robert

@Curtis Michelson – Hi Curtis – yeah, the CMDB theme is an important one, because it needs to be seen as part of the same continuum of structural-information etc as that which we use in architectures, rather than as something separate and distinct. (In effect, it’s the real-world telling us what we actually have, rather than strategy etc telling us what we ‘ought to’ have.)

As for “I might be totally talking out of my ass”, well, increasingly, that’s what I fear I’ve been doing throughout these posts. Judging by some of the notes that have been coming up – such as Robert Phipps’ just above, but also some emails from other colleagues – it seems I’ve merely been ‘reinventing the wheel’ with regard to the mote idea, but doing it in a really clunky way. 🙁 Looks like I have had something useful to say on toolsets and EA methods, but at the detail-implementation layer all I’m really doing is demonstrating my lack of competence. Oh well. (Oh the joys of self-doubt…)

@Robert Phipps – Hi Robert, and thanks for jumping in.

Okay, I’ll admit it: I’m totally out of my depth here: my stuff is too technical for many folks, but this stuff is way past my competence. (I haven’t done CompSci – I was self-taught back in the early micro days when CompSci was often more of a hindrance than a help – but at this point it really shows… 🙁 ) Another colleague talked about ‘triples’, but I’ll admit I have no idea what you and he mean, how it differs from what I’ve described above, and what difference it makes in terms of constraints (or lack of them) on the underlying structure. Help?

The only thing I can perhaps usefully add is that I don’t think that a core based only on simple key/value pairs will cut it. To me the key is that, much as in knowledge-systems and living ecosystems, there are three parts to this: content, context and connections. Each mote brings a bit of direct value to the party (its parameter). As with living cells within a larger collective, it knows its own context within the ecosystem (its role). And through its connections, it identifies what it relates to, and why (its ‘tail’ of the ‘related-mote’ list, and the roles of each of those motes). We then need some kind of ID to identify each mote. Which gives us the four-part structure I’ve outlined above.

Not knowing proper CompSci database-design, I can only describe it in abstract terms, but that’s the core idea. I don’t think we can cut it back any further than that without losing some key aspects of the support for emergence. (And yes, obviously, I need to do a detailed worked-example, though it probably needs to be on a technical wiki rather than here on a more narrative weblog?) But yeah, I’m well aware that I may well be “talking through my ass”, as Curtis put it. Hence the initial request for help. Which I’m not very good at accepting, of course… apologies…

Again, help? Including helping me to accept help on this? 😐

Many thanks, anyway. 🙂

Tom,

I did a first try to see if I can deal with a Venn Diagram. The photo is at http://bit.ly/rrwdyV (hope that the link will work).

The idea in short is:

1. Model a Venn Diagram with 3 sets, with a caption Audience

2. Sets are Mind Mappers, Storytellers and Architects

3. The Storyteller set consists of 4 zones: B and the overlapping zones BC, AB, ABC

4. every zone has a population (not in used my sketched diagram but population is a value needed to draw the sets correctly) and a label

3. namespace of Venn Diagram with 3 sets is V3

I have sketched a red kind of subway line to show what motes you must through to find the value of zone B (the storytellers who are not mind mappers and are not architects).

Maybe the venn diagram stuff will be clearer if you look at the applet at http://www.cs.kent.ac.uk/people/staff/pjr/EulerVennCircles/EulerVennApplet.html .

Can you tell me if I use your idea correctly before I go deeper/further to the core…

(if you can make any sense out of this 🙂 )

“As for “I might be totally talking out of my ass”, well, increasingly, that’s what I fear I’ve been doing throughout these posts.”

The value of the idea remains regardless if the implementation is clunky or not. I’ve gotten some great new insights just from playing with the paper prototype which I never would have had by sketching or looking at the diagrams (by themselves or together on one sheet). The insights came when I fooled around with the motes/little papers!

I keep the insights for myself until I played a bit more and until Tom has given some feedback on this first try…

@Peter Bakker – Peter, I’ll admit I’m struggling a bit with this. I think it’s at least one level higher than I’ve been describing for the root-level motes, which may be creating some confusion. For me, at least… 😐

The overall approach is definitely what I was aiming for, and what you’re describing there are indeed motes – because everything would be, in this schema – but some of them seem much more abstract than I’d meant in this post. For example, most of the ‘roles’ described in the picture are from a much higher level than the sort-of ‘assembly-language’ in the previous ‘A possible structure’ post.

Rather than jumping straight to the mote level, might be more useful right now to look at the three original notations you wanted to work with: mind-map, Business Model Canvas, and tubemapping:

– What are the entities in each notation? [just the set of names, for now, but will lead to root-motes for each of those entity-types; note also any implied entities, such as the BMCanvas building-blocks]

– What information do each of those entities need to carry? [eventually, leads to a set of motes for each parameter, linked to other motes for the labels, the parameter-types, and so on]

– How do these entities relate with each other? [again, just the names for the distinct relation-types; though note some of the implied relations, such as placement within the building-blocks of the Business Model Canvas]

– What are the rules that determine those relations? [this leads us toward the set of tag-motes that we’ll need for entity-types and relation-types; also some specific motes that would define and guide sequence etc for tubemapping]

– How are each of these items displayed on the screen, or printed, or reported as text? [leads us toward definitions for presentation-motes to be linked to our entity- or relation-motes]

– Which items are specific to one notation only? Which items need to be carried between notations? [leads us towards definitions for namespaces, and towards distinctions between root-level versus namespace-specific items]

In other words, what needs to happen as you move an entity from a mind-map to a BMCanvas to a tubemap and back again? What information do you need to keep with the entity to identify that it is the same entity? What information do you need in order to select it, when you’ve changed notation? What needs to be added to its history and its relationships – its definition of ‘tell me about yourself’ / ‘tell me what you’re associated with’ – as you use it for senemaking and decision-making within each of those notations?

It would certainly help me if you could play with that for a while, and report back…? Many thanks for all you’ve done so far, anyway – much appreciated!

@Robert Phipps – Robert, following the assembly-language metaphor again:

– the mote-ID is a globally-unique instance-ID (not an opcode-ID or class-ID)

– the mote-role is the op-code

– each op-code in the assembly-language supports one parameter-by-value (in effect optional, because it may be set to null/NaN etc)

– each op-code supports any number of parameter-by-reference (i.e. pointers to other motes, via the related-mote list)

Perhaps a closer metaphor would be a kind of mix between assembly-language and the structure of FORTH (see http://en.wikipedia.org/wiki/Forth_(programming_language) ). In FORTH, everything is just a word (an opcode) or a parameter-value, and we define higher-level functionality by accreting other words or parameters onto a word. In the same way here, everything is a mote; a mote that has higher-order meaning and functionality (such as an Entity or Relation, or something built on top of those structures) is still just another mote, but has accreted more functionality via its related-mote list. A really simple mote such as a tag might just contain its parameter-value and nothing else; by contrast, an Entity-mote might have a large related-mote list, many of which motes themselves have large related-mote lists, and so on recursively. (There’ll be a need to watch for the risk of circular-references, but the same risk occurs in FORTH and many other programming-languages.)

As for your comments about MOF layering etc, it’s obvious I need to have another go at the MOF spec, but I’ll admit I’m struggling there… 🙁 I do understand that to make something simple, there’ll always be some real complications hidden beneath the surface; but I must admit I do wonder whether it really does need to be as complicated in description as MOF seems to make it… ::sigh::

Thanks again, anyway – and hope this makes some sort of sense, and not hopelessly naive on my part?

@Tom G

Thanks Tom,

I started at the highest level because I needed some confirmation if I used the mote concept correctly. From your answer I understand that I am.probably on the right track 🙂

So now I will work downwards to the root and sidewards to, as you ask, to share concepts between the different models. Which was precisely the reason for adding this Venn Diagram, because I want to play with the sets/zones in relation to the customer segments of the business model canvas. So stay tuned!

@Peter Bakker – Great: many thanks!

(Note that Venn Diagram is another model-type to map out in this form, by the way… 🙂 )

@Tom G

Hi Tom,

Because I’m dealing with a paper prototype and it becomes to messy and time consuming to deal with loose papers I’ve decide to try a kind of tubemap approach. Therefore I made for the next version of the prototype the design decision that all connections (by relation or through the use list) are bidirectional/two-way. Which means that from a mote point of view every mote that I’m associated with is also associated with me (both can see each other and use each others parameter-value).

Again, this is just to keep the visualization of the paper prototype simple.

Hi Tom,

I’m going to admit that all along, as I’ve read your posts, I’m seeing you develop the MOF all over again.

Now, to be fair, the MOF was designed to allow the M1-M4 levels to share a much richer model than you need to use… but that’s not a problem. By way of metaphor, you need transportation, and there’s a Toyota dealership down the street… just because a Model-T is simpler, that doesn’t mean you should invent it.

That said, I’d like to seperate out the model from the tool. Just because you can use the MOF to capture the data, that doesn’t mean that the tool you wish to develop already exists. The tool, AFAIK, does not exist.

Most of the UML modeling tools on the market do use the MOF under the covers, but they layer on a rich layer of modeling and diagramming metaphors which make your ideas unwieldly. I would not suggest that the existing set of tools gets it right from an EA standpoint, and I think you can improve upon it by creating a rich toolset that allows the kind of modeling that EA requires.

That said, you get some very real benefits if you model your underlying data structure on “Complete MOF.” First and foremost, folks using your tool would be able to quickly export data and import it into existing modeling environments (like EA tools as well as UML modeling tools). That means that your tool can focus on EA modeling and let the existing tools focus on rich graphics.

Good Luck,

— Nick

@Nick Malik – Nick, “I’m seeing you develop the MOF all over again” – yes, as I commented somewhere earlier above, that’s exactly what I’m afraid I’ve done… 🙁

Oh well. The one (possible) advantage is that I’ve gotten to that point from first-principles, looking at EA-related modelling in general. If it turns out that ‘Complete MOF’ is the way we ought to go, then that’s what we ought to do. At least we’ll have gotten there not simply by grabbing at MOF straight from the start, but by a parallel process of exploration that happened to arrive at the same place and found that MOF was already there (and worked on by better minds than mine, I’m sure! 🙂 )

You say, “They [the UML tools] layer on a rich layer of modeling and diagramming metaphors which make your ideas unwieldy”. There are two points I’m somewhat confused by in that sentence. One is that one of the problems I’ve been seeing is that the UML tools seem to have too much of a “rich layer of .. metaphors”, in that they really seem to constrain what we can and can’t do: we need a toolset that can go much, much broader and more versatile than simply the UML/BPMN cabal. And I don’t understand what you mean by “make your ideas unwieldy” – what do you see that I’ve gotten wrong relative to UML etc?

In any case, the exact structure of the metamodel is not really my core concern: I only developed (or roughed-out, rather) the model-structure to give something that people could argue about, rather than going round and round in circles with arbitrary abstracts. My real concerns are threefold:

– this concept that entities and their relations should be able to move between model-types and notations in a much freer way than is currently possible, and be able to answer those questions about “tell me about yourself” and “tell me what you’re associated with”;

– a toolset that can support just about any notation we throw at it (including user-defined notations), and be able to move entities and relations between the different models;

– a non-proprietary file-format that supports both of those requirements, and can move both model-data entities, relations etc) and notations between toolset-instances.

That’s the real aim here. The metamodel-structure was just a ‘thought-experiment’ towards that aim. When I last looked at MOF, a year or so back, it did seem way too unwieldy and cumbersome to do that – but I’m probably wrong about that, as I am about many other things as well. 🙂 Obviously I need to look again, don’t I? 🙂

But thanks, anyway – does help a lot.

Now I’ve played a bit with Tom’s idea I think the goal or subject of what we are trying to do is not metamodeling (on whatever level). For me the goal is not to make a tool to make or adapt other models but to gain insights from a diversity of models together which you won’t get from looking at the models separately.

For that we need a sense-making tool which can translate the information from all those different senses (models) into a common “brain language” and use that common “brain language” to create new insights (patterns).

To speak with Einstein: We cannot solve our problems with the same thinking we used when we created them.

So forget about existing metamodeling tools/languages/standards and help us create a new way of seeing!

This is of course my personal view, Tom may feel totally different about this 🙂

@Peter Bakker

To be clear: Tom’s comment was not yet visible when I started to type mine 🙂

@Peter Bakker – Re “Tom may feel totally different about this”.

Short answer: I don’t. What you’ve summarised there is what I call ‘context-space mapping’ – perhaps have a wander through some of the posts here that reference that topic? 🙂

“To gain insights from a diversity of models together” is also a main focus of my book ‘Everyday Enterprise-Architecture‘, of which you can still download a PDF of the whole content for free from here.

In many ways that’s what all this is about; that’s why I’ve been pushing so hard in this direction. I’m just glad to see that it makes sense to other people too. 🙂

@Tom G

I downloaded the book a few weeks ago but it is still on my “to read” list with a bunch of other books. To busy with tubemapping and playing with your ideas 🙂

@Tom G

Your saying “I’m just glad to see that it makes sense to other people too. ” made me think of:

We’re just two lost souls

Swimming in a fish bowl,

Year after year,

Running over the same old ground.

What have we found?

The same old fears.

Wish you were here.

Hope other people will help us before we end as lost souls…

Tom, my comments re MOF were only that your Mote did not seem to be as high up the meta-tree as you had suggested, but this is neither here nor there. I guess i took the centre of the discussion to be about which level of abstraction the mote belonged to, which i now think, in retrospect is NOT the a particularly central question. The aim of MOF is to support inter-operabilty between disparate representations, but your Mote is an implementation primitive, which as such can be as simple or as complex as necessary to meet the requirements of the task at hand. Implementation primitives would hardly ever be representational primitives ( except perhaps at the bit/opcode/quantum/elemental level !! ). If the implementation primitive is simple, then all the smarts are in the arrangement. if the implementation primitive is complex and therefore smarter, then some of the smarts can live there and some in the arrangement. I think that perhaps my rant on MOF was a bit peripheral to your main concern. Your EA repository will need to be able compose motes ( of what ever structure ) together and deal with the composite as a unit, and it is the ability to abstract, that is most important. You certainly don’t wantt to be dealing at the “cellular” level all the time. Cells compose into tissue which coallese into functional blocks >> some of which constitute organs >>> and so on ( to extend the biological/physiological metaphor ).. anyway, love the topic, thanks for hosting this most interesting thread. regards Robert

@Peter Bakker

After a good night sleep I dont’t think that my earlier phrase “common brain language” is really a good description of what we are trying to do. A better comparison would be the nervous system. If you look the first 2 minutes of this video http://www.youtube.com/watch?v=CI5OGLv1t_w then you should understand why 🙂

@Tom G

Apology accepted Tom. I’ve been following comments for the last few days, I just haven’t had any further constructive input that hasn’t been covered by others.