Decision-making – linking intent and action [2]

How is it that what we actually do in the heat of the action can differ so much from the intentions and decisions we set beforehand? How can we bring them into better alignment, to ‘keep to the plan’? And how does this affect our enterprise-architectures?

This is Part 2 of this exploration: the first part is in the post ‘Decision-making – linking intent and action [1]‘. (Once again, please note that this is ‘work-in-progress’, so expect rough-edges and, uh, partly-baked ideas in various places?)

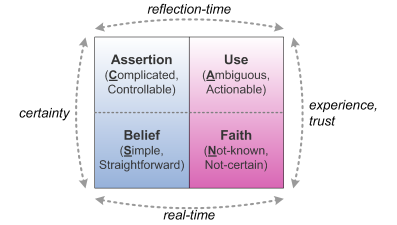

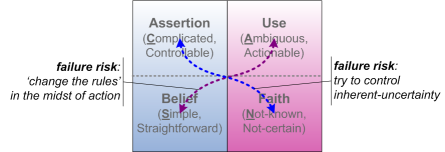

What we ended up with the previous post is that we what we do want is strong ‘horizontal’ connections across the modalities at the same time-distance to action, and strong ‘vertical’ connections across the time-scales at the same modality:

What we usually don’t want – unless intentionally, and with considerable extra care – is ‘diagonal’ connections across both timescale and modality in the same link:

The key point for architecture is that at the moment of action, no-one has time to think. Hence everything that we build in the architecture to support real-time action also needs to support the right balance between rules and freeform, belief and faith, in line with what happens in the real-world context.

It needs to ensure that we have the right sets of rules for action when rules do apply, and the right experience such that the fallback into faith is as effective as possible whenever the rules don’t apply.

What this implies is that, within the architecture, we’ll need to include:

- services to support each sensemaking/decision-making ‘domain’ within the frame

- services to support the ‘vertical’ and ‘horizontal’ paths within the frame

- governance (and perhaps also services) to dissuade following ‘diagonal’ paths within the frame

It also implies the need for a radical rethink of ‘command and control’ as a management-metaphor, which is where we finished in the previous post. What we’ll turn to here is the other items in that list immediately above.

Before we start, though, one important point to note: all of this is recursive. For sanity’s sake, I’ll need to keep things as Simple as possible here, using bullet-point lists and the like: but in reality all of it is also Complicated, Ambiguous and None-of-the-above – and each of those aspects likewise has components that are simple, not-so-simple and so on. It’s clear-cut and simple, and it’s blurry and messy – all of it recursive, ‘self-similar’ and different, all at the same time. Which gets more than a bit complicated or complex or even chaotic if we try to describe it all in one go…

So for now I’ll take the easy way out: I’ll aim for just a brief-as-I-can-make-it summary, and go into more detail where necessary in later posts. Or you can ask for clarification in comments here: it’s up to you. Point is that, of necessity, this is only scratching the surface: I’m well aware that it ain’t as Simple as I may make it seem, and I’ll trust that you’re aware of that too.

On services to support each domain:

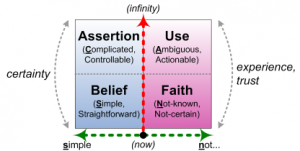

For this section we’ll explore both sensemaking (left) and decision-making (right) together:

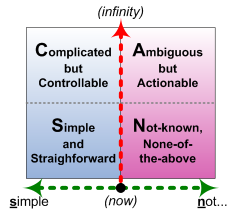

In both cases, the domains here split into two distinct sets, ‘horizontally’ either side of the Inverse Einstein test:

- on the left-side (‘order‘), our sensemaking and decision-making tactics (Simple / Complicated, Belief / Assertion) assume that things are predictable – and hence that doing the same thing should lead to the same result

- on the right-side (‘unorder‘), our sensemaking and decision-making tactics (Ambiguous / Not-known, Use / Faith) assume that things may not be predictable – and hence that doing the same thing may lead to different results, or achieving the same results may require doing different things

The vertical distinctions between the domains are often rather more subtle, but it’s crucial that our architecture does provide support right down to the exact moment of action. We need to make a point of this, because there’s an all too common tendency to assume that what works well distant-from-action – Complicated analysis and Complex experimentation, for example – will also work well at the point of action. Yet as the old joke warns us:

In theory there’s no difference between theory and practice. In practice, there is.

‘Distant-from-action’ and real-time action are related, yet qualitatively different, in much the same way as Newtonian physics differs from quantum-physics. Hence these pairs of domains in the ‘vertical’ dimension as well.

So: order-domains:

What support do you have for Simple sensemaking: ordered, ‘controlled’, at real-time? What kinds of sensemaking are needed within the work at or close to the exact moment of action?

- examples: checklists, comparison-charts, mechanical sensors, real-time signals

What support do you have for Complicated sensemaking: ordered, ‘controlled’, predictable, but some distance away from real-time – either before the event as preparation, or after it, to make sense of what happened? What different types of support do you need for different ‘distances’ from real-time, from seconds to minutes to hours to days to months to years to decades and beyond?

- examples: analytics, dashboards, computational filters, aggregation

Going back the other way, from sensemaking to decision-making:

What support do you have for Assertion-based decision-making: decisions that assume the existence of order, ‘control’, predictability, yet also are some distance from – usually prior to – the moment of action? What different types of support are needed over the different timescales that we might describe as strategic, tactical and operational?

- examples: algorithms, hard-systems theory, computation or business-rules IT-systems

What support do you have for Belief-based decision-making: real-time decisions based on certainty, on rules, on assumed predictability? In what ways does this decision-making differ when there’s no time to think, no separation between decision and action?

- examples: rule-sets, rote-learning, step-by-step checklists and work-instructions, physical machines, real-time IT

And: unorder-domains:

What support do you have for Ambiguous sensemaking-contexts: some distance from the action, yet still known-uncertain? What different types of support do you need before and after action, and for different ‘distances’ from real-time?

- examples: experimentation, pattern-matching, statistics, trend-analysis, futures techniques, crowdsourcing

What support do you have for None-of-the-above sensemaking-contexts: right at the moment of action, yet inherently uncertain in some or all aspects? What kinds of sensemaking need to take place here?

- examples: listening, ‘flow‘, managing panic, social structures for ‘safe to fail’

(Note that most of that last set of examples would address not so much the sensemaking itself, but providing appropriate conditions for real-time sensemaking in inherent-uncertainty.)

From sensemaking to decision-making:

What support do you have for Use-based decision-making: decisions that are some distance from the action, yet do not assume certainty or predictability? What different types of support are needed over the various different timescales of distance-from-action?

- examples: patterns, guidelines and values, soft-systems theory, prioritisation, probability and necessity (modal-logic), social methods (from meetings to voting-systems etc)

What support do you have for Faith-based decision-making: decisions that must be made in the heat of the action in the midst of inherent-uncertainty?

- examples: principles (i.e. actionable values), skills and experience, context-design to maximise safe-fail or ‘graceful failure’, trust in ‘that which is greater than self’

(That last item is by far the hardest to describe, but it’s a key reason why I use the term ‘Faith’ here. I suppose this might perhaps be a kind of ‘hive-mind’ effect, but the point is that decisions here will often carry a feeling of ‘it was the right thing to do’, an ‘intuitive’ decision that aligns with a broader collective-purpose without conscious knowledge or certainty of how it does so. Deep familiarity with shared principles and values is a known key driver and anchor for this type of decision-alignment – hence their importance as and at the core of an enterprise-architecture.)

Review those lists above: which of those items would you currently include in your enterprise-architecture or process-architecture? Most conventional architectures will describe only the left-side (‘order’) items – yet support for all of these forms of support will need to be in place for the enterprise and its architecture to work well. Note any gaps in the architecture, and, even more important, gaps in support; and then move on.

In the next part of this series we’ll explore the architecture of how we link all these domains together. Any questions for now, though? Over to you, anyway.

Very interesting post. I have been pondering similar questions on how to place Decision Science at the centre of Enterprise Architecture. A not terribly elegantly named high-level view is given in the first article.

http://stevenimmons.org/2012/01/the-centrality-of-decision-science-in-enterprise-architecture/

http://stevenimmons.org/2011/12/decision-rethinking-enterprise-architecture/

Steve – good points there in those two articles – many thanks.

“The weakness of many Enterprise Architecture frameworks is that they do not recognise the fundamental connection to the decision making process.” (from the second article) – I agree strongly with the distinction you draw between information-systems rather than solely (computer-based) information-technology. I also very strongly agree with you on the importance of linking EA to decision-making – otherwise what’s the point, frankly? 🙂

The only point I’d disagree with you somewhat is on describing it as ‘decision-science‘ – I’d say it’s actually more a decision-technology that we need, i.e. a merging and balancing of both the science and the art of decision-making, rather than solely the science.

But otherwise yes, well put – thanks again.

@Tom G

Thanks Tom, I previously referred to it as Decision Theory which sounded rather tenuous. I agree that it is probably something more of an amalgam of theory, science and practice (i.e. decision technology). The technology dimension needs to be in place be that a decision support system or an aspect of command and control systems.

@Steve Nimmons – Given this, I’ll perhaps have to correct myself, since I used the term ‘decision-physics’ in the subsequent two parts in this series… oops…

(Though actually that’s perhaps still okay, if we consider that the metaphoric ‘decision-physics’ is the underlying mechanics of a decision-technology?)

But your summary above is probably the best way to put it, anyway: “something more of an amalgam of theory, science and practice”.