On sensemaking in enterprise-architectures [2]

How do we make sense of uniqueness? How can we make sense of what’s happening at the exact moment of action?

In the previous post in this series, I looked briefly at Boisot’s I-Space – promoted by some as ‘the answer’ to everything in the information-space – and discovered that, useful though it may be for other types of sensemaking, it doesn’t really address this specific challenge of uniqueness.

[If you’re interested in Boisot, I understand that Cognitive Edge have just released a selection of his blogs there as a stand-alone document – check it out, perhaps?]

I then delved into a bit of my own history to identify the key theme for me here: the interaction between the individual and the context, in the moment of enacting some form of skill. In other words, uniqueness in practice.

So why is this relevant to enterprise-architecture? The last part of that post gave a brief overview of some of the reasons why uniqueness – and the balance between sameness and uniqueness – is right at the core of business-models, business-architecture, risk/opportunity management, operational excellence and many other key concerns in enterprise-architectures and the like.

So let’s keep digging a bit deeper here, to explore what we can use for sensemaking in that context of uniqueness.

Another brief aside on Boisot

Two more points came up in that brief exploration of Boisot.

One is that the core problem that he was tackling in most of the articles and other items that I found was a specific aspect of economics: the perceived-value of a portfolio of ‘knowledge-assets’, and their profit-potential in terms of utility versus scarcity – leading to strategies such as hoarding versus sharing, or Neoclassical (‘N-Learning’) versus Schumpeterian (‘S-Learning’). That’s obviously going to be important in a possession-economy, where ideas and information are treated as personal possessions, and where information-hiding and price/value mismatch are crucial to the viability of many business-models – however questionable those might be in terms of business-ethics and the like. Yet the practical problem for enterprise-architectures is that within the organisation, we need a functional economy – ‘the management of the household’ – not a dysfunctional one: which means that the economics-models we need are responsibility-based, not possession-based. Hence for most architecture-purposes, Boisot’s business-focus, interesting though it may be, is likely to be more of a hindrance than a help, relative to what we actually need in practice.

The other point is that, whilst the Social Learning Cycle (SLC) does indeed touch that instant of uniqueness – tacit-knowledge at the moment of action – it seems to skim over it as of almost no relevance. (It’s Step 1, ‘Scanning’, in the SLC diagram above.) I see exactly the same happening in Cynefin, which is based in part on Boisot: there’s the same apparent avoidance of the uncertain, the same rush to the ‘more interesting’ areas of complex-adaptive-systems and the like, represented in Boisot’s SLC as the early stages of abstraction, codification and knowledge-sharing (Steps 2, 3 and 4). To me this is like saying that the only thing that matters in a football game is the final score, and ignoring everything about the individual moments and individual skills from which that score will actually arise. Sure, the score may be of interest to someone who wasn’t personally involved in the action: but to those who are involved, it’s the sense-making and decision-making at the moment of action that matters most.

Hence, in an enterprise-architecture, we need to support that kind of ‘business-speed’ sense-making and decision-making – because that ‘irrelevant’ moment-of-action is where everything happens, where every choice and every part of preparation becomes a concrete fact. The crucial point is whilst there’s time for abstractions before that moment, and time after that moment, when we’re in that moment there’s no time for abstractions at all.

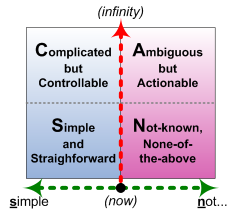

To describe this in SCAN terms, look first at the core graphic:

Then remember that the vertical axis is available-time – the time we have available to us before a choice must be made, and expressed in immediate action. When time gets compressed to nothing – as by definition it must, at the moment of action – we’re left with only the single ‘horizontal’ dimension of decision-logic. In visual form:

So at that brief moment, all previous analysis and experimentation – the entirety of the Complicated and the Complex, to use the SCCC terms – will likely become unavailable, because there’s no time for it, or no time to access it. Analysis and experimentation can be useful afterwards, of course – but only after that crucial ‘turning-point’, the moment of choice, the moment of action. Within that moment, everything is stripped down to the bare bones: which leaves us only the Simple, and… something else. A ‘something else’ that’s often misdescribed as ‘the Chaotic’, but is better understood as an explicit ‘Not-Simple’, an explicit ‘None-of-the-above’.

We know how to do Simple. That’s not in question. Yet that ‘something else’ is, well, something else…

And one of the few things we know about that ‘something else’ is that tools that place their focus elsewhere – such as complex-adaptive-systems and the like – are going to be no help there at all. For useful insights on that kind of sense-making, we need to look elsewhere.

Sense-making in real-time

Let’s put this into a real-world context.

You’re an airline pilot, on a yet another scheduled flight in a twin-jet airliner, 150 passengers on board, five crew. Routine, everyday, nothing unusual about it at all. You’re still on first fast-climb out of the airport, full thrust but barely above stall-speed, not yet three minutes from takeoff, five miles out, 3000 feet up. And without any warning at all, you hear several loud thuds, the windshield turns brown, both engines go silent. Yep: you’re in trouble… big trouble…

Remember when they told you that an airline-pilot’s life is years of tedium separated by moments of terror? Well, this is one of those moments…

The plane’s still flyable, sort-of: no fatal damage in that sense, at least. But you know that this thing is going down – no choice about that at all. And soon, too: at this height, you’ll be lucky to have even three minutes’ flight-time left. That’s maybe ten miles at most. But you really, really do want to have some choice as to where and how this thing comes down – because if you get it wrong, here above a city that stretches as far as the eye can see, it’s not just you and your passengers that are going to die. This is a city that knows only too well just how much death and destruction a fully-laden airliner can cause…

What happens now depends on what you choose to do right now. So what do you do right now?

This is the real-life story of US Airways Flight 1549, of course. What Capt Sullenberger and his crew chose to do is a matter of public record: the (necessarily-terse) communication with air-traffic control, for example. And the relatively-happy ending of the story, in the middle of the Hudson River, with all passengers and crew safe, to be rescued by the ferry-boats mere minutes later.

Just remember that this story could so easily have had a very different ending… So it’s worth exploring what went right that day – and the architecture for real-time sense-making and decision-making that made that outcome possible.

The first point is this: there’s no time to stop and think. Whatever analysis we do here is going to be minimal at best; any experiments that we can do in this time must return usable results in seconds. In short, no Complicated, no Complex.

There’s a huge amount of thinking and experimentation that’s gone on before this point, of course – long before the flight took off, in fact much of it before the aircraft was even built. Someone sat down and worked out what to do if the engines don’t work: there’s a separate auxiliary generator, for example, and another ram-air turbine that’s driven just by airflow. The fly-by-wire system can be set to self-levelling – a factor that could well be critical in any emergency-landing. There’s even a panic-button to close up key vents in case of a water-landing. But all of that is only useful if it’s actually used: which may not be the case.

[That vent-closer panic-button wasn’t used, as it happens: Sullenberger said afterwards that the water-landing had ripped holes in the hull far greater than those that the closer would have sealed, so it wouldn’t have made much difference.]

Another part of the experimentation is on a personal basis, developing personal skills. This is the role of the simulator: we know we can’t make the context ‘fail-safe’, so we practice emergency response in ‘safe-fail’ conditions – where no-one will get hurt when we get it wrong – so that we have certainty in ‘body-learning’ for when it does happen for real.

[Both pilot and co-pilot had spent many, many hours on simulators, practicing responses for all kinds of emergencies – including an engines-out water-landing. This wasn’t just conceptual knowledge, abstract knowledge: this was about ‘body-learning’, appropriate action without need for conscious thought.]

In preparation for the emergency itself, there’s a huge focus on keeping things Simple. The aviation industry were the first to make intensive use of checklists – with good reason, because they help to make sure that nothing vital is missed.

[The co-pilot skimmed rapidly through the three-page checklist for engine-restart. Nothing worked, so they turned to other options. But note that the checklist was there, available, immediately to hand: someone had planned in advance for exactly this kind of incident.]

In an emergency of this kind, no-one can afford to panic. (The nature of panic is important in this: we’ll come back to it later.) The whole culture is geared to keep everything calm, focussed, making maximum use of what little thinking-time that is available.

[Listen to the air-traffic audio-track: the tower-manager asks “which engines?”, in evident disbelief. “He lost thrust in both engines, he said”, says the controller. “Got it”, is the terse reply.]

And there’s a lot more than just Simple thinking going on – this is much more than just ‘sense / categorise / respond’. This is a very fast continuous scan, using every form of modal-logic – the logic of possibility and necessity, the logic of ‘None-of-the-above’.

[The transcript shows that within a mere six seconds after the report of bird-strike, traffic-control provides a return-course to the airport – but to runway 13, coming in from the far side. Just twenty seconds later, Sullenberger has already worked out that he won’t make it, and that a river-landing is possible – “we’re unable, we may end up in the Hudson”. A quick check for other nearby airports – runway 01 at Teterboro would require too steep a turn, Newark is too far. Fifty-two seconds after the bird-strike, Sullenberger reports “We’re gonna be in the Hudson”.]

At some point, it becomes essential to focus only on the task at hand: all communication with others shuts down until the immediate task is complete.

[After “We’re gonna be in the Hudson”, Sullenberger has no further contact with air-traffic control; his aircraft disappears from radar. The transcript shows the controller continuing with his job, handling incoming and outgoing traffic almost as if nothing unusual has happened. Sullenberger and his co-pilot ditch the aircraft safely in the river less than two minutes later.]

After the immediacy, the task may well continue, though in other forms.

[Sullenberger personally checks that everyone is clear of the aircraft, twice walking the length of the cabin in chest-deep icy water. He is the last to leave the aircraft.]

The emergency ends; the pressure eases off. The next part of the architecture cuts in at this point: it’s crucial to help the people involved to ‘change gears’, and to know that time-compression has ceased – otherwise there can be can be a collapse into a kind of counterpart to panic, namely post-traumatic stress.

[The controller reported later that for him the most traumatic point was after the incident had ended. During the incident itself, there simply wasn’t time to do anything but focus on the job at hand; but afterwards, all the possibilities of what might have gone wrong came back to haunt him. All ‘imaginary’, yet experienced as if ‘real’: this is a type of context in which the usual distinctions between ‘real’ and ‘imaginary’ make very little sense.]

In effect, we explicitly re-engage the Complicated and, especially, the Complex – ‘Ambiguous but Actionable’ – through tactics such as an After Action Review of four simple questions:

- “What was supposed to happen?” – requires us to know the expectations of the context

- “What actually happened?” – requires us to observe, to have observed, and to remember what was observed

- “What was the source of the difference?” – requires us to assess, evaluate

- “What can we learn from this, to do differently next time?” – re-embodies the results of the evaluation as personal ‘response-ability’

In SCAN terms, the time-available axis may change radically during the progress in the context, with time seeming to compress and expand according to the stress of the moment; yet the decision-making options, from true/false Simple to fully-modal None-of-the-above, remain much the same throughout. The real requirement here is for some means to support appropriate choice of decision-making method from moment to moment.

And that’s what we’ll look at in the next part of this series.

Enough for now: any comments so far, anyone?

Leave a Reply