Four principles – 1: There are no rules

What rules do we need in enterprise-architecture? At the really big-picture scale?

This is the second in a series of posts on principles for a sane society:

- Four principles for a sane society: Introduction

- Four principles: #1: There are no rules – only guidelines

- Four principles: #2: There are no rights – only responsibilities

- Four principles: #3: Money doesn’t matter – values do

- Four principles: #4: Adaptability is everything – but don’t sacrifice the values

- Four principles for a sane society: Summary

A bit of background first. I’m in the process of sorting out the content and structure for a conference-presentation and book, on the somewhat off-the-wall theme of ‘How to think like an anarchist (and why you need to do so, as an enterprise-architect)‘. The timescales are horribly tight, so I’m gonna need some help on this one – hence this series of posts, as a live exercise in ‘Working Out Loud’.

The aim is to explore some ideas and challenges at the Really Big Picture Enterprise-Architecture (RBPEA) scope – applying enterprise-architecture principles at the scale of an entire society, an entire nation, an entire world – and then see where it takes us as we bring it back down again to a more everyday enterprise-architecture.

As I described in the previous post in this series, for some decades now I’ve been looking for the most fundamental principles upon which long-term viability can depend: principles that will allow the design or self-design of an organism, an organisation or an entire society not merely to survive, but thrive, under conditions of often highly-variable variety-weather – the changes in change itself.

What I keep coming back to is a set of just four closely-interrelated principles: there are no rules, there are no rights, money doesn’t matter, and adaptability is everything. So here’s a bit more detail about the first of those principles for a sane society: the idea – or understanding – that in the real, practical, everyday world, there are no absolute rules that we can always rely on to apply everywhere and everywhen. Once we accept the reality of this, it has huge implications for our enterprise-architectures…

Principle #1: There are no rules – only guidelines

(Or, if you really need some kind of rules, then the only absolute rule is that there are no absolute rules.)

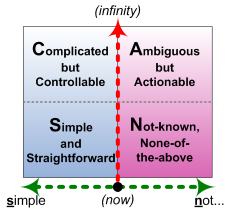

There are no rules: everything’s contextual. What we think of as ‘rules’ are the codified – ossified? – outcome of experiences or intentions that have come out much the same way for enough times for us to think it’ll not only usually come out the same way (a guideline) but always come out that way (a so-called ‘law’). We can illustrate this quite simply, with the SCAN framework:

(The horizontal-axis is the part that’s important here – we’ll ignore the vertical-axis for the moment.)

The horizontal axis describes a spectrum of modality or possibility, from absolute certainty and repeatability all the way over on the left, to absolute randomness or uniqueness over on the far-right. Some way along that spectrum – shown here as halfway, but in reality much further over to the left than most people would prefer – is what we might call the Inverse Einstein boundary: to the left (the blue zone), doing the same thing will lead to the same results, whilst over on the right (the red zone) doing the same thing may or will lead to different results, or we may or will have to do something different to achieve the same results.

To the left, order; to the right, unorder. Rules only ‘make sense’ in an ordered world – but there’s much more to the world than just a made-up structure of order. Rules assume certainty; guidelines don’t make such assumptions, but because they are always somewhat uncertain, they need to be linked to the respective measure of uncertainty. Which can sometimes be tricky – especially where people really really want to believe that things are certain, even if they’re not.

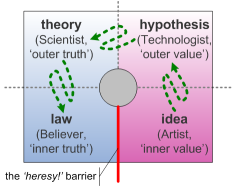

Another useful SCAN-related perspective on this is the classic history-of-science view of the development from idea to hypothesis to theory to law:

The catch is that it’s assumed to be a one-way process – a bit of back-and-forth along the way, to be sure, but once something becomes a ‘scientific law’, it always stays that way. Yet in reality, most so-called ‘scientific law’ only applies exactly under special circumstances that rarely exist in real-world practice. The same is true of so-called ‘constants’: most are only constant in, again, special circumstances. When we bring them all together in a real-world context, suddenly things can often get a lot more complex – and a lot more uncertain.

Take a simple scientific example: the law of gravity. There are definite constants there: we use them to design spacecraft, to plan interplanetary orbits. But once we start mixing everything together in a real mission-context, things can suddenly get a lot messier as all those different laws and constants interact. Just take a look at what the JPL Curiosity team had to do during their ‘seven minutes of terror’ to land their rover safely on Mars: and even then there was no certain guarantee that it would work. There are no rules – there are only guidelines, mixed in with awareness of constantly-varying degrees of uncertainty.

The same applies in the social context. Laws often only make sense in specific contexts: sometimes they may still make sense after centuries,but others may work well for only a few special-cases that barely survive a mere matter of months. The rules of religions are literally made-up myths, stories to fit specific contexts that may well no longer apply in this context, right here, right now. And the social-rules we call ‘morals’ are, in reality, best described as a lazy-person’s ethics, a lazy way to use made-up rules to avoid facing the real complexities of the real world – and, all too often, attempt to export the responsibilities for those complexities onto everyone else.

As every doctor on late-night duty on the cancer-ward will know all too well, all those certain-seeming morals and rules and laws around a social notion such as “thou shalt not kill” can be a lot more of a hindrance than a help when trying to work out how best to help a patient in the agonising last stages of terminal illness… Real guidelines do help in this – especially when coupled with the honesty to respect the harsh trade-offs that so often have to be made in real-time, in the moment of action. But someone else’s morals, laws, rules? Not so much…

And when we look at real-world practice, the only real law is Murphy’s: if something can go wrong, it probably will. (The word ‘probably’ is often omitted, but its inherent uncertainty is the whole reason why Murphy’s is such a true law.)

Yet Murphy’s is so much of a law that it also has to apply to itself: hence if Murphy’s Law can go wrong, it probably will.

In other words, most of the time, Murphy’s cancels itself out. Which is why we get the illusion that things are predictable, that they follow rules.

Which in reality they don’t: Murphy’s law only cancels itself out most of the time, not all – and we never quite know when it won’t cancel itself out.

Hence, in real-world practice, the only absolute certainty is that there is no absolute certainty.

Sure, the myth of rules is useful – no doubt about that at all. Guidelines are hard at times, and uncertainty certainly is hard: that’s why we teach kids the guidelines they need as if they’re ‘the rules’, about road-safety, about where to play and not-play, about what to eat and not-eat, and so on.

But it’s really, really important to remember that although we might use these guidelines as if they’re ‘the rules’, they’re not necessarily the rules that the real world plays by. ‘As if’ is not the same as ‘is’… – and if we ever forget that, in the real world, we’re likely to find ourselves in real trouble…

There are no rules – only guidelines. That’s the core principle – core understanding – upon which everything we do will need to be built.

Implications for enterprise-architecture

I’ll often describe myself as a ‘business-anarchist’, as a somewhat-joking contrast to the more usual ‘business-analyst’. Yet it’s much more than just a joke: business-analysts work with ‘the rules’, tweaking them for best-fit to the current circumstances, whilst the business-anarchist uses a rather different and much broader skillset to take over when the rules themselves no longer make sense. And it’s from the perspective of the disciplined business-anarchist – rather than the more certain worldview of the business-analyst – that we most need to assert that “there are no rules – only guidelines”.

It’s crucial to understand here, though, that “there are no rules” doesn’t mean an absence of all constraints, a random free-for-all. What it does mean is the absence of pseudo-certainty, an absence of arbitrary constraints. That’s a rather important difference…

The point is that rules describe what ‘should’ happen inside a metaphoric box that’s bounded by often-undeclared assumptions. Yet by definition, a logic is unable to determine the limits of its own premisses, or to reassess or redefine its premisses: all it can do is follow the rules of that logic. If the context happens to stray outside of those bounds, the rules are not only likely to give guidance that’s inappropriate for the actual context, but also have no means to identify that the context is outside of the box.

In short, rules can be useful, but result in systems that are inherently fragile or brittle, with little to no resilience or self-adaptation to variation or change.

Hence what we need for most real-world contexts are not rules, but guidelines that explicitly acknowledge the uncertainty, and that can challenge and dynamically reassess their own validity and, where necessary, suggest alternatives, all preferably in real-time.

Unfortunately, most of our existing systems are built around rigid true/false rules and their undeclared assumptions… – a reality that provides many interesting challenges for enterprise-architects…

Some practical suggestions to explore:

— system-design: search out all instances of rules that are deemed to apply in a given context:

- what are the assumptions behind those rules?

- what will (or does) happen if the context moves outside of the bounds of those assumptions?

- by what means will the system identify that the context has moved outside of those bounds?

- what impact will there be on the viability of the system if the context moves outside of its expected bounds?

- what mechanisms will be available to the system to respond appropriately to and, where necessary, recover from a context that moves outside of its expected bounds?

- what guidelines and mechanisms are needed by, and available to, the system in order to adapt itself to localised or broader changes in which the context moves and stays partially or wholly outside of the systems existing expected bounds?

Most of this applies especially to automation – whether IT-based, purely mechanical, or some combination of the two. Most current IT is only capable of following strict true/false logic; most physical machines are only capable of following strict physical ‘laws’; both assume absolute-certainty, and hence both must rely on some form of ‘external help’ to realign their functional-logic to actual reality, or to escalate decision-making when the context moves outside of their predefined scope.

In practice, that ‘external help’ will usually need to be human – a real person, working in real-time. Most automated mission-critical systems will need a human-in-the-loop somewhere in the system, to handle exceptions and to override the automation where necessary. Where an automated system must be entirely self-contained – such as in a remote sensor or space-probe – its operation must include active guidelines such as pattern-matching or goal-seeking and the like that operate ‘above’ the base-level logic. Design-implications include:

- each overall system needs the ability to identify when the context moves ‘out-of-bounds’, and to accept and acknowledge that the context has (necessarily) moved beyond the ability of a closed-logic to adapt to the context, and therefore must ‘escalate’ the decisions (recursively, if necessary) to a sub-system that has the competence and authority to handle such ‘out-of-bounds’ cases

- each such escalation or transfer of decision-making authority may (and often will) change some aspects of the effective service-level agreement – rule-based decision-making is usually a lot faster than free-form guideline-based decision-making, but also a lot more brittle

- the need for escalation means that the qualitative-parameters (time-of-response, accuracy-of-response, etc) of the effective service-level agreement can be context-dependent, and in some cases may be highly variable – the overall system-design needs to be aware of those variances, the potential impacts of those variances, and the inherent uncertainties around those variances

- some form of manual-override will usually be necessary somewhere in the system, to cope with any out-of-bounds ‘special-cases’

A real-world example of the latter was an automated system for management of child-protection casework, which broke down – with extremely embarrassing results for the agency – because its requirement for date-of-birth as a key-field for the record for the child couldn’t cope with a special case of protection required for a child who was not yet born and therefor had no date-of-birth…

— organisational design: Almost by definition, most organisations are built around rules. However, the real-world moves onward all the time, in its own way, which means that rules can often become irrelevant or out-of-date – hence likewise for any organisation that insists on running everything ‘according to the rule-book’.

The primary purpose of rules in organisations is to speed up decision-making and to clarify roles and responsibilities. Since organisations are also systems in their own right, all of the notes above about the limitations of rules in systems-design also apply here. The natural decay over time of relevance and appropriateness of rules is also a key source of organisational entropy, which, if not addressed, will eventually cause the decay and death of the organisation itself. In addition to the system-design implications above, implications for organisation-design include:

- every organisational rule needs to be subject to regular review, to reassess its relevance or appropriateness to the current organisational context

- most rules should be assigned a metaphoric ‘use-by’ date that should trigger automatic review

- explicit mechanisms to manage the practical implications and impacts of changes of rules need to be in place, for each type and scope of rule

- to guide response to broad-scale change, organisations will usually require some form of stable ‘vision‘ or ‘promise’ that describes the permanent anchor or driver for a shared-enterprise broader in scope than the organisation itself

The ISO-9000 quality-system standards provide a useful worked-example of layered structure to manage rules in an organisational context. At the point-of-action, work-instructions provide explicit step-by-step rules, and guidance on how to address expected variance. When the work-instruction becomes insufficient, we turn to procedures that, in effect, describe how to adapt or redefine the work-instruction to fit the context. When procedures prove inadequate to cope with the actual variance, we turn to current policy for that overall scope; and if and when a context occurs where policy will not fit the case, we turn to the vision, as the ultimate anchor for the overall organisational-system.

There will always be variance in any real-world context; there will always be something that doesn’t fit the current rules and expectations. As the old joke goes, “As soon as we make something idiot-proof, along comes a better idiot!” Guidelines take over whenever the rules fail: yet since the rules will always fail somewhere, the wisest design principle we can use is to assert that there are no rules – only guidelines. And then, as designers, work with – not against – the implications that fall out from that fact.

[Update (later the same day)]

A bunch of really obvious points that I forgot to include on the first pass through this post… (my apologies):

- almost every instance of innovation demands the rejection of one or more existing ‘rules’ – for example, “it is impossible for a heavier-than-air craft to fly”, “it is impossible for any person to run faster than the four-minute mile”, “it is not possible for typesetting and layout to be done by anyone other than a trade-apprenticed craftsman”

- almost every change – whether in business or elsewhere – implies either a blurring or repositioning or abandonment of some existing ‘rule’

- every organisational restructure is, in effect, a suite of changes to the ‘rules’ governing interpersonal-relationships across the organisation

- every change of legislation is or implies a literal rewriting of ‘the rules’

- every amendment or redesign of a business-model implies a rewrite – often on many different levels – of the ‘rules’ that specify how the business operates

Any other equally-obvious ‘there are no rules’ items that I’ve missed?

Hi Tom – Like where you are going with this series, I do think these ideas extend beyond the conventional scope of EA.

Re: rules, another way to say this would be to separate model or plan from its implementation. Planning can be helpful, we just don’t want it to be gratuitous.

The reasons systems of all kinds break is because they encode explicit rules with a fixed context baked-in, when we really want to have plans as guidelines that can be ‘configured’ by context, increasing relevance, but more importantly, that can be re-planned to support unanticipated variance for an implementation or change to the master plan. Plans are then prototypes that evolve, though adaptation may be constrained by other rules – permissions, but in line with your post even those rules should be subject to negotiation.

Ideally information provides context for flexibly exploiting capabilities for a purpose.

Best,

Dave

Hi Dave – many thanks, and very strongly agree with you re the dangers of ‘plans’ as “explicit rules with a fixed context baked-in”.

(Planning is very different, and a very necessary discipline to learn: the crucial point is that although in practice von Clausewitz’ dictum always applies – that “no plan survives first contact with the enemy” or, more generically, first contact with the real-world context – that does not that we don’t need the discipline of plannning! What we need, in practice, is the ability to reformulate plans in accordance with the overall intent – which demands both the discipline of planning and real clarity on intent.)

do all “rules” have a time period? The rule can change quarter but only after more information is adapted to nullify the previous thought? An enterprise is always viewed in terms of a specific time period. More information is learned, more technologies are introduced that changes what was thought was a “rule” … even if it was a scientific law. After all, the world was flat for many only for a certain time period. We are the only galaxy was also believed until new information (because of new technology) was uncovered.

Good question, Pat, though I don’t think it’s quite like that: it’s more that we need to recognise that rules do need explicit review after various periods of time, rather than that the rules themselves would or should have an explicit ‘use-by’ date. (I was perhaps a bit misleading in the post about that – my apologies…)

The key point, as you say, is that “an enterprise is always viewed in terms of a specific time period” – and things do change over time. Hence rules that were exactly right for the context and knowledge that applied at the time they were formulated, can, over time, drift to being less-useful, and then less-than-useful, and eventually just-plain-wrong – which is not a critique of the formulated those rules, but just that the context for which those rules were a good fit no longer applies in the current enterprise. The world has moved on, but the rules haven’t: simple as that, really. And that’s the real reason why we need to review rules. Which first needs an acceptance that ‘the rules’ may not always be ‘the rules’. Hence, as a perhaps overly-simplistic but at least pithy reminder that, ultimately, ‘there are no rules’.

I think we are saying the same thing … though you are a better communicator. Agree that all rules need to be revisited as to its usefulness. When the are out of use, we don’t want to forget them (because of historical analysis), we want to expire them. We really don’t know many of the expiration period until the cultural/technical/whatever change occurs (or begins to occur). Therefore, it is impossible to place a “used by” date.

Hi Tom. I find a lot to like in what you’re writing here in general, as well as this current thread. Just a few thoughts inspired by your posts so far.

An example of your “rules make brittle systems” is the typical of an organization when it uses some workflow manager or other to “automate” some work, typically using an idealized picture of what will happen, rather than basing the automation on any process that has passed the test of real-world use for some time.

How do you help people come to agreement that context has changed and therefore a new response is necessary? Is this part of what EA can provide?

Possibly worth exploring: people codify things into rules (or stereotypes, in another context) partly out of a natural drive toward efficiency – reduce the number of things one must consciously think about so we can focus on the other stuff

Re: human in the loop – this solution is going to be a tough sell, as many organizations don’t trust their people.

re: organizational rules – yes, yes, and yes. I advocated putting an expiration date on all architecture standards produced in our company – hasn’t been adopted yet, but I’m still hanging on to the idea.

Another component of this entropy – the organization forgets why the rule was originally put in place

Hi Jeff – apologies that I missed replying to this earlier…

On automation of work: yes, exactly. The danger then is that things that don’t fit the automated workflow first get shoved into the ‘too-hard basket’, and then eventually ignored altogether. Which means that they accumulate and quietly fester in the ‘too-hard basket’ until it overflows in a bubbling, frothing mass that smothers everything, bring the respective process to a grinding halt. ‘Rules-engines’ are much beloved by BPM advocates, but they carry hidden dangers that far too few of those advocates are willing to address at all. Sigh…

On “How do you help people come to agreement that context has changed” – the short answer is “With difficulty, usually”. 🙁 Part of this is the ‘boiled-frog’ syndrome: most of the changes we deal with are quite gradual, and hence quite difficult to see as change until someone finally notices that it doesn’t work at all. Under hierarchies, though, obeisance to ‘the rules’ is a part of the persistence-architecture for the hierarchy itself – obeisance is to the role, rather than the person as such – so any questioning of rules also implies questioning of the validity of the hierarchy, which is definitely in the ‘Not Allowed!’ box. A key role for EA is to help create a safe-space in which rules can be questioned – but somehow without upsetting the hierarchy too much to be personally-dangerous. It can be a tricky balance at times… 🙁

On “natural drive towards efficiency” – yes, definitely agree there. As a counterpoint, perhaps note Ivo Velitchkov’s concept of ‘Requisite Inefficiency’ – see http://www.strategicstructures.com/?p=597

Thanks again, anyway!

Thoughts prompted by this very interesting and helpful exposition

Where do patterns fit into this?

The applicability and utility of a rule is in part determined by the stability of the environment in which it operates. Given that the rate of change of our environment is accelerating, the life of rules is becoming shorter, and hence we establish “intelligence mechanisms” to alert us to changes in the environment that might warrant changes to the rules or guidelines or principles that we establish.

This leads to the concept of adaptability and agility – and perhaps, then into the exploration of complex adaptive systems. What does that body of thinking have to tell us?

@Peter: “Where do patterns fit into this?”

I view patterns as a category of structured-guidelines: a recommendation that at first glance looks a bit like a rule, but explicitly acknowledges both incompleteness and ambiguity. There’s a bit of ‘fill in the blanks’, to adapt for contextual difference, but also usually a much stronger acceptance of uncertainty, that ‘rules can change’, both in terms of immediate context and over time.

In essence, a pattern embeds a spectrum from rule-like to full-uncertainty, and identifies which parts of the response to the context should match up to which parts of that spectrum. Where some part of the response should be rule-like, it should set out the rules; where more fluid, it should provide guidelines as to how to interpret and make sense of the uncertainty, and to develop appropriate responses. The classic MoSCoW set for requirements-definition is a good example of a pattern-spectrum for patterns: Must-have, Should-have, Can-have, is-know-to-Work/not-Work.

On “‘intelligence mechanisms’ to alert us to changes in the environment” etc – very good point, and yes, very necessary. Except that I’ve not yet seem any BPM ‘rules-engine’ that actually includes any such mechanism… which is precisely why the darn things are so brittle. (‘Fit and forget’ they are not…!)

On “complex adaptive systems … what does that body of thinking have to tell us?”, my short answer is that I don’t know – or, more precisely, that, unlike rather too many others, I don’t pretend to know. To be honest, I don’t think anyone knows: from my reading of that ‘body of thinking’ so far, I’ve seen heck of a lot of hot-air, a heck of a lot of wishful-thinking, and an awful lot of variously-muddled variously-useful contextual-interpretations and intuitive-responses dressed up in a spurious cloak of ‘science’. To me the most useful thinking in that space often comes from the so-called ‘spiritual’ domains – writers such as Lao Tse, for example. In fact a simple rule-of-thumb, for me, is that the more something in the space claims to be based on ‘complexity science’ or suchlike, the more likely it is to be just a cynical repackaging of smoke-and-mirrors fluff to give worried executives the delusion of certainty amidst the inherently-uncertain. Bah…

There are two distinct challenges here, relating to the role of statistics, that in my own work I summarise as the horizontal and vertical dimensions in the SCAN frame.

The first is the shift from a certainty-based ‘always-true / always not-true’ (‘is-a’, ‘is-not-a’, or ‘has-a’, ‘does-not-have-a’, as in classic data-models and rules-engines) to a more statistical basis of ‘truth’ (‘sometimes-is-a’, ‘may-or-may-not-have-a’, such as we need in most reference-frameworks). That’s the horizontal-dimension in SCAN. The huge challenge there is to get people to understand that whilst we can usually be certain about some aspects of the context – for example, we can often be quite certain about probability, or the impact of eventuation – yet high-probability does not mean ‘certain to happen’, and low-probability does not mean ‘will never happen’… Concepts from complex-adaptive-systems can often be useful: the catch is that the moment the word ‘science’ enters the picture, it tends to reinforce rather than disperse the delusions of spurious-certainty – which really doesn’t help.

The other challenge – represented in part by the vertical dimension in SCAN – relates to a shift from a statistical focus to a more quantum-like one: something we could almost describe as ‘chaotic adaptive systems‘. The point here is that, somewhat paradoxically, adaptive-systems are often less sensitive to larger-scale shifts than they are to individual ones: changes that are visible at the statistical level can suddenly be swamped by changes that echo outward at very high speed after initiation at just one node in the system. For example, Margaret Mead’s comment that social change always starts from “a small group of committed citizens”; a potentially-pandemic antibiotic-resistant strain of a virus starts with a single nominally-‘random’ mutation. The statistical tools of conventional complex-adaptive-systems are almost no help here: by the time they’re able to notice the change, it’s often already too late to do anything about it. We can, however, use those tools to build a better picture of the overall system and its criticality or potential-receptiveness to ‘chaotic’ change – which is not the same, though, as using those tools to detect the change-point itself, as some people still purport.

The other fundamental theme within complex adaptive systems is the interdependencies across the respective system. For EA, that’s what Enterprise Canvas is designed to address, as a kind of checklist for service-viability within its respective context. I certainly don’t claim that it will model all aspects of complex-systems, but it does to surface interdependencies and potential wicked-problems across those interdependencies. More on that some other time, if you want?

Hope this makes some degree of sense, anyway. 🙂

Intelligence and BPM – I slight digression I realise.

But your comments underline my inherent suspicion of the claimed value and benefit of technology based BPM!

Yep! – technology-centric or technology-only ‘solutions’ tend not to be very good at dealing with the real vagaries of the real-world… Dunno why that fact is so hard to get over to some people: I can only guess there’s an overdose of wishful-thinking of the ‘deus-ex-machina’ kind there, that’s all… Oh well. 🙁