SCANning the toaster

How about a nice, simple, first-hand everyday example of SCAN in action? 🙂

Here’s the context: toasting my mid-morning snack – that old English delicacy, a hot-cross bun.

Except that the toaster didn’t work.

So here’s the toaster, with the two halves of my hot-cross bun still sitting in the slots:

And here’s what happened (or didn’t)…

- I put the bun-halves into the toaster, push down the lever, watch the timer-lights (the five LEDs on the right-side of the control-panel) count down until the buns pop up.

- I pick the buns out of the slots, drop them on the plate, get ready to spread butter on them, and realise that they’re not toasted. Not even hot. Hmm… must have missed something?

- I check the plug: yep, that’s in. In fact, the timer-setting (the red ‘5’ in the control-panel display) has been on the whole time, so it must have been plugged-in anyway.

- I put the buns back in the slots; push down the lever, checking carefully that it is all the way down; check that the timer-lights are on, and counting down correctly, until the buns pop up again at the right time.

- Except they’re still cold. I park the buns on the plate, and stop to think. What’s gone wrong?

- Without putting the buns back in, I push the lever down again. The timer-lights come on, doing the timing correctly, but the heater-strips inside the toaster don’t glow. The toaster’s working overall, but the part that actually does the toasting isn’t.

- Hypothesis: maybe there’s been a mains-spike, and its microcontroller needs a reboot?

- Pull the mains-plug out to force it to do a reboot: yep, the control-lights come on okay.

- Test it again, pushing down the lever. Nope, no joy: heating-strips still aren’t glowing, so no heat.

- Hypothesis: there’s a large breadcrumb shorting it somewhere.

- Visual inspection: nope, can’t see anything.

- Rattle it about a bit anyway to shake loose any crumbs, then empty the crumb-tray. (Yes, I did pull out the mains-plug first – don’t panic!)

- Second visual inspection: nope – still can’t see anything.

- Test it again, pushing down the lever.

- Still no joy: control-panel says its working, heater-strips show it isn’t.

- Get hold of a brighter light and a wooden skewer, to do a more careful inspection, with a bit of additional prodding. Nope, can’t find anything at all.

- Quick test again, more in hope than anything else. Still isn’t working.

- Hypothesis: there’s a wire come loose somewhere?

- No, stop – just stop. I’m not equipped for this – I don’t have the right test-gear or anything.

- And I’m running out of time, too. Forget it.

- Okay, it’s going to be cold cross bun, then. An unwanted culinary experience…

- Wait a moment, what about the little grill-oven, won’t that work as a toaster as well? Even if only one side at a time?

- Check its controls: hey, it will do both sides at once!

- How long will it need? Three minutes for a first try, perhaps?

- Put the bun-halves on the slide-out tray, close the lid, set the timer, watch and wait until the timer rings.

- Properly toasted? No, not quite…

- Okay, give it another couple of minutes, I’d guess.

- Set the timer, start it going, watch and wait again.

- Done?

- Yes, done. Hooray!

All terribly trivial, I know. But even at this level, it’s a really good illustration of how much we jump around between sensemaking, decision-making and action. In other words, right into SCAN territory.

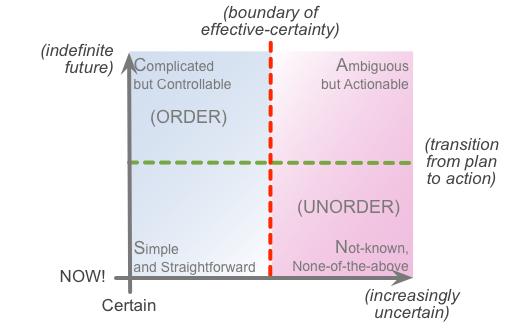

So let’s remap the whole thing onto SCAN, and see what it shows us about how that jumping-around actually works. First, here’s the SCAN frame:

And here’s the remap, step by step:

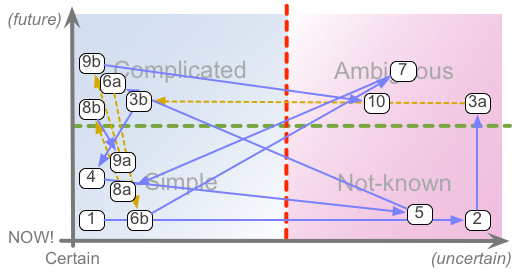

- Standard procedure for toasting. [Simple]

- Not toasted. [Not-known]

- Experiment, then analyse. [Ambiguous to Complicated]

- Repeat standard procedure for toasting, with explicit confirmation of each step. [Simple, slightly back from real-time]

- Still not working. [Not-known]

- Repeat the standard procedure [Simple] followed by analysis [Complicated]

- Hypothesis: reboot required? [Ambiguous]

- Do standard procedure for reboot [Simple] and analysis [Complicated]

- Repeat the standard procedure for toasting [Simple] and analysis [Complicated]

- Hypothesis: breadcrumb short-circuit? [Ambiguous]

- Standard procedure for setup of visual inspection [Simple] followed by analysis [Complicated]

- Standard procedure to clear crumbs [Simple]

- Repeat procedure for visual inspection [Simple] and analysis [Complicated]

- Repeat standard procedure for toasting [Simple]

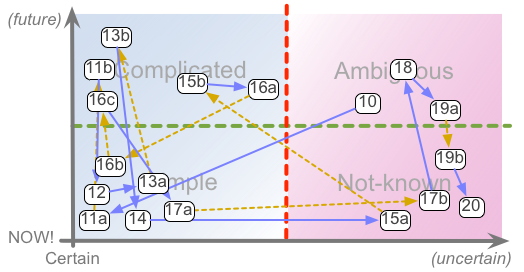

- Still no joy [Not-known] so analyse results [Complicated]

- Extend test-algorithm [Complicated], apply modified procedure [Simple] and analyse results [Complicated]

- Repeat standard procedure for toasting [Simple] without success [Not-known]

- Hypothesis: loose wire? [Ambiguous]

- Self-doubt [Ambiguous to Not-known]

- Abandon the investigation [Not-known]

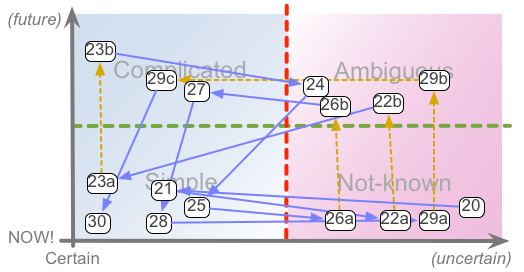

- Standard procedure for ‘Disappointment’… [Simple]

- New idea [Not-known] for new option [Ambiguous]

- Standard procedure to list equipment-capabilities [Simple] and compare with requirements [Complicated]

- Guesstimate for timing [Ambiguous]

- Modified standard procedure for toasting, adapted for grill-oven [Simple but with some uncertainty]

- Review outcome [Not-known to Ambiguous]

- Analyse results of review [Complicated, with some uncertainty]

- Repeat modified standard procedure for toasting, with amendments [Simple, with some uncertainty]

- Done? [Not-known to Ambiguous to Complicated]

- Success and completion [Simple]

Or, in visual form – steps 1-10:

Steps 10-20:

And steps 20-30:

Did I really do all of that, just to get a toasted tea-cake? Yikes… 🙂

Practical application

Again, it’s a trivial example – and intentionally so, of course. Rather, the real value here is more if we take what we’ve just seen above – the looping-around and constant jumping from one mode to another, much as in an indisciplined version of John Boyd’s OODA – and instead turn the whole thing the other way round: provide support for disciplined sensemaking, decision-making and action.

(Some of this gets kinda technical again – sorry… But if you interpret and compare it to the real-world example, it probably makes a bit more sense? I hope?)

First, if we want known, certain, repeatable results, at high speed, we need to be in the Simple domain. Various corollaries arise from this:

— We will need clear, precise, unambiguous, preferably ‘Yes/No’ instructions that apply directly to the specified context. There can only a small number of these instructions applicable at any one time, and even fewer choices: typically a maximum of half-a-dozen for humans, and precisely one instruction or choice-point at a time for automated systems.

— Because there is (almost) no time to think or calculate when within the Simple domain, those instructions will need to be developed and tested in another domain – usually but not always the Complicated domain.

— The Simple domain delivers fast, and reliably, only when the assumptions behind its instructions do match the real-world context. When those assumptions do not match up with the real-world, the action will ‘fail’ – in other words, deliver ‘unexpected results’, or, in SCAN terms, an inherent and enforced transit into the Not-known domain. Repeating the same actions after failure will usually make things worse. Action in the Simple domain therefore needs sensors and/or other mechanisms to identify when its assumptions no longer apply – in other words, when the context has moved ‘outside the box’ of the predefined assumptions.

— When a risk of failure is detected at run-time, the Simple domain can sometimes slow down – move away somewhat from real-time – so as to incorporate what’s usually a fairly rudimentary amount of the Complicated-domain’s methods of analysis, and thereby attempt to avoid full failure. (In SCAN terms, the action moves to the right – less-certain – and upward – less-rapid – yet still remains ‘within’ the effective bounds of the Simple domain.) In effect, there is a trade-off here between uncertainty, reliability and speed: as uncertainty increases, either speed or reliability will need increasingly to be sacrificed.

When we don’t know what’s happened and we’re running at full speed, we are, by definition, in the Not-known domain. We can also choose to move there, “in order to remember something we never knew”. Various corollaries arise from this:

— Everything that happens here is in some way unknown, literally ‘out of control’ or ‘outside of the box’ – or, arguably no ‘box’ at all. Out towards the extremes of this domain, there is no such thing as reliability or predictability, because there is no identifiable link between anything and anything else. Machines that rely on repeatability will usually fall into a ‘chaotic’ state here, and will typically need to be pulled out of that state by external means such as a forced reboot. The equivalent in humans is often described as ‘panic’ – a literal expression of the Greek root ‘pan-‘, as ‘the everything’, all at once.

— The Not-known domain is – by definition – inherently unpredictable; and whilst everything does happen here in real-time, time itself can pass in an unpredictable way. Where some kind of known action – ‘productivity’ – is required, yet transits into Not-known are probable or possible, ‘hooks’ should be provided to make it easier to return to the Simple domain and minimise ‘wasted time’ in the Not-known. An emergency-action checklist is a classic example of such a ‘hook’ – potentially reducing uncertainty sufficiently to return towards the Simple domain.

— More broadly, principles and patterns can provide ‘seeds’ around which some form of meaning can coalesce, or provide some of reference-anchor or direction. These are often especially important where it’s desirable to remain ‘in’ the Not-known domain – such as for ideastorming, or to encourage serendipity – or for contexts that depend on unpredictability, such as improvisation in theatre or music.

— Because there is (almost) no time to assess within the Not-known domain, those principles and patterns will need to be developed and tested in another domain – usually in the Ambiguous domain, for principles, or in a combination of Ambiguous and Complicated for action-checklists.

— For many purposes, such as in idea-generation for experimentation, it can be useful or necessary to slow down – to ‘drop out of the chaos’ somewhat – so as to enable capture of the imagery, ideas and experiences. We might likewise need to constrain uncertainty somewhat. (In SCAN terms, the action moves to the left – less-uncertain – and upward – less-rapid, yet still remains ‘within’ the effective bounds of the Not-known domain.) In effect, there is a trade-off here between comprehensibility, possibility and speed: as the requirement for comprehensibility or applicability increases, either possibility or speed will need increasingly to be sacrificed.

In the same way that both Simple and Not-known are a matched-pair at the point-of-action, Complicated and Ambiguous have the same kind of matched-pair relationship away from the point-of-action, respectively supporting analysis and experimentation.

For the Complicated domain, the core purpose is to develop ‘rules’ and suchlike that are compact enough to be usable at run-time in the Simple domain. Various points arise from this:

— Note that, by definition, its work takes place at some distance from the action: the Complicated domain does not act directly on the real-world. This can cause the misleading belief that “there’s always time for more analysis”: the reality is that such ‘analysis-paralysis’ – avoidance of commitment to action – can itself cause failure.

— The focus within the Complicated is on analysis, identifying ‘correct’ or self-certain answers within a specified algorithm. It may have considerable difficulty to ‘bootstrap’ itself with sufficient uncertainty to assess the validity of its own assumptions. (In SCAN terms, moving towards the right of the frame, yet still within the bounds of the Complicated domain.) In general, a transit to the Ambiguous – usually intentional – may be needed for this purpose.

— Unlike the Simple domain, the Complicated domain can handle any required level of ‘complicatedness’, including delay-effects and feedback-loops, as long as it fits within the core requirements of effective-certainty and effective-predictability. However, the algorithms will be actionable only to the extent that they can be partitioned into ‘chunks’ that can be applied in the Simple domain, as per above. Another trade-off applies here: increasing complicatedness in general enforces slower response and greater difficulty in partitioning into actionable ‘chunks’: increased logic-processor speeds can mask this trade-off somewhat, but by definition can never eliminate it.

For the Ambiguous domain, the core purpose is two-fold: to develop patterns and principles that can be actionable in the Not-known domain; and provide some means – typically, hypothesis and experiment – to support development of actionable algorithms in the Complicated domain and subsequently for the Simple domain. Various points arise from this:

— Note that, by definition, its work takes place at some distance from the action: the Ambiguous domain does not act directly on the real-world. This can cause the misleading belief that “there’s always time for more experiments”: the reality is that such avoidance of commitment to action can itself cause failure.

— The focus within the Ambiguous is on experiments to point towards a usable hypothesis. It may have considerable difficulty to reduce the ambiguities and uncertainties enough to make it usable as a base for algorithms – especially for systems which require certainty, such as computer-based systems – or even explainable with consistent terminology and suchlike. (In SCAN terms, this implies moving towards the left of the frame, if still within the bounds of the Ambiguous domain.) In general, a transit to the Complicated may be needed for this purpose – if only to test the hypothesis to the point of failure.

— Unlike the Not-known domain – which, by definition, is inherently particular and unique – the Ambiguous domain can handle any required level of complexity and generality, as long as the exploration does for arbitrary levels of uncertainty and unpredictability. However, the outcomes of the exploration will be actionable only to the extent that they can be simplified down into principles or patterns that can be applied in the Not-known domain, as per above, and/or packaged into hypotheses that can be tested in the Complicated domain and further adapted for the Simple domain. Another trade-off applies here: increasing need for generality and pattern-identification for re-use enforces slower response and risks a tendency to suppress usable and valid uniqueness.

Overall, as described in the later part of the post ‘SCAN – some recent notes‘, we can summarise the kind of techniques or checks that we’d use in each domain in terms of a simple set of keywords:

— Simple

- regulation – reliance on rules and standards

- rotation – systematically switch between different elements in a predefined set, such as in a work-instruction or checklist (or in the elements in a framework such as SCAN itself)

— Complicated

- reciprocation – interactions should balance up, even if only across the whole

- resonance – systemic delays and feedback-loops may cause amplification or damping

— Ambiguous

- recursion – patterns may be ‘self-similar’ at every level of the context

- reflexion – intimations of the nature of the whole may be seen in any part of or view into that whole

— Not-known

- reframe – switch between nominally-incompatible views and overlays, to trigger ‘unexpected’ insights

- rich-randomness – use tactics from improv and suchlike to support ‘structured serendipity’

Which we could again summarise in visual form as follows:

Probably the main point I’d like to emphasise this time, though, is how fast all of this switching-around really is. It isn’t that we sit in some specific domain for long periods at a stretch: instead, as can be seen in the toaster example above, the whole thing is fractal, recursive, continuously looping through and round and within itself, often so fast that it can be hard to catch what’s really going on. The real advantage of something like SCAN is that it provides ‘just enough framework’ to be able to see all of this happening, as it happens – and thence start to gain more choice about how we do our sensemaking, decision-making and action.

Anyway, more than enough for now: time to get back to practice, I’d suggest? (Or, in my case right now, to bed. And without a butter-laden hot-cross-bun! 🙂 )

Tom,

the main point here is how to explain a simple work in a complicated pattern. I am sorry but I see this more as an anti-pattern.

thanks, Cay – though I’ll admit I’m not sure / don’t understand which part (or all) you;re describing as an anti-pattern?

one of the aims here is to actually look at how thinking-processes switch around at near-real-time. most of us (most people I’ve come across, anyway – including myself) don’t often seem to take the time to do that kind of self-observation, in order to learn from it. and (again applying SCAN to itself) it’s usual we can only do that by stepping back a bit from the point-of-action – it’s very hard to do at the point-of-action.

(the first stage of the sweep from unconscious-incompetence to conscious-incompetence etc, in fact)

if we attempt to do this at the point-of-action, then yes, I’d agree, it becomes an unhelpful anti-pattern – it slows things down for no real gain. if we do it as a learning-exercise, the trade-off is different, and a lot better – if we do learn from it, of course. 🙂