IT-centrism and real-world enterprise-architecture

Is there an IT-based solution to every business problem? And is the IT-based solution always the most efficient and effective option?

One of my more constant struggles in EA is to get supposed ‘enterprise architects’ to think about each context in its own terms – and not assume arbitrarily that throwing some lump of computer-based IT into the mix will automatically solve every problem…

Frequently, when I try to explain this, I get accused of being ‘anti-IT’, of being some kind of antediluvian Luddite – which is not the point at all. What I am trying to explain is that, as with everything else, computer-based IT has significant and inherent limitations – and if we don’t respect those limitations, and work with them, we’re likely only to make things worse.

The classic example, perhaps, was the almost unmitigated disaster of IT-centric ‘business-process reengineering‘. The sales-pitch from the vendors and consultants alike was that automating all business processes would be faster, more efficient, more reliable and much, much cheaper: but in practice it usually turned out to be none of these things – quite the opposite, in fact… As one of its originators, Michael Hammer, later admitted:

I wasn’t smart enough about [the human factors in processes]. I was reflecting my engineering background and was insufficient appreciative of the human dimension. I’ve learned that’s critical.

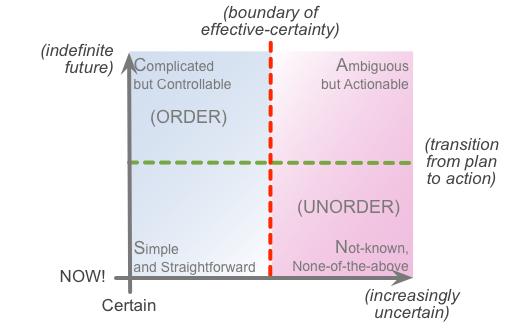

The other key point that’s too often missed here, about ‘the human dimension’, is that people have to take over the sensemaking, decision-making and enaction of processes when the the IT runs up against its practical limits. The blunt reality is that the IT can only ever handle the more certainty-oriented subset of the real context – in SCAN terms, the part of the context over to the left of the ‘boundary of effective certainty’:

Over in that part of the context – the more certainty-oriented or ‘easily-repeatable’ parts that we could map over on the left-hand side of the frame – using IT usually is far quicker, cheaper, more reliable and the rest. But once we cross that ‘boundary of effective-certainty’, IT-systems simply don’t work: they give the wrong answers, or incomplete answers, giving rise to inaccurate decision-making and, often, rapidly-spiralling levels of failure-demand.

IT-centrism is, in essence, an extension of the delusions of Taylorism, that desire or hope or assumption that everything ‘should’ be subject to predictable control, and hence ‘should’ be controllable by certainty-oriented means. Unfortunately, this just doesn’t work in practice: any real-world context will always include elements of inherent uncertainty.

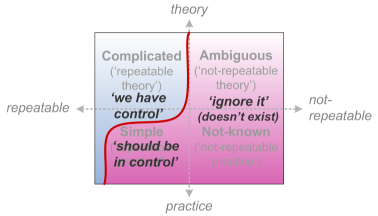

In practice, the Taylorist delusion plays out in two distinct forms. The first is an assertion that if the (automated) system can’t handle it, we can safely ignore it. The boundary of effective-certainty thus becomes an absolute cut-off – the boundary of the capabilities of the overall system itself – such that anything to the far side of that boundary becomes dismissed as ‘Somebody Else’s Problem’. Unfortunately, there’s often a significant difference between the theoretical boundary (upper part of the SCAN frame) versus the boundary that applies in practice (lower part of the frame) – often resulting in the system looking usable enough in theory, but too constrained in practice for real-world use:

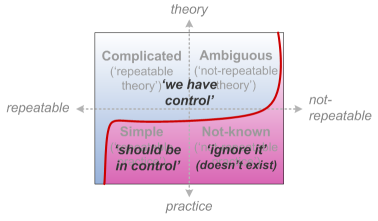

The more extreme form of the delusion asserts that everything is reducible to certainty – that there is no ‘boundary of effective certainty’, but instead that certainty can and does extend all the way over to the far-right of the SCAN frame. We see this, for example, in attempts to make laws or ‘business-rules engines’ to cover every possible eventuality or special-case. The two-fold reality that this delusion refuses to face is that, by definition, a quest for ‘absolute certainty’ extends to infinity, and hence is inherently unachievable in practice; and also, in practice, piling on more and more ‘special-cases’ slows the whole thing down so much that it simply doesn’t work anyway – hence the kind of skew between theory and practice illustrated in this SCAN map:

Instead of trying to force everything to fit to an IT-centric ‘solution’, a better and wiser approach is to identify the effective limits of IT in a given context, and build a solution that supports people in doing the parts of the work that the IT can’t reach.

As a real-world example of where and why an IT-centric approach to information-systems won’t always work, and why a more manual approach can sometimes work better for the actual need, take a look at this Washington Post piece: “Sinkhole of bureaucracy: Deep underground, federal employees process paperwork by hand in a long-outdated, inefficient system” – about the US government federal-employee pensions-administration.

Notice that yes, on the surface, it’s incredibly inefficient: taking information from paper folders, searching out the missing pieces (often from electronic records), getting all the required signatures, typing the whole lot back into an IT-system, and then filing the paper records away in one of almost 30,000 filing-cabinets. It’s incredibly expensive, and it hasn’t materially improved its average-case performance for more than 30 years (though, to be fair, now handling a much larger case-load).

But notice that three separate attempts to convert the whole thing to a more modern IT-system have failed – the last one at a cost of somewhat over USD$100million. The reason why those ‘improvements’ have failed is not a fault of IT as such, but simply that the context is too ‘messy’ – too laden with wicked-problems – for ‘sameness’-oriented approaches to be able to work well enough for the need: as the article describes, it’s a tangled mess of old laws and special-cases and variants and person-to-person chasing that inevitably makes any rule-based IT-system collapse in a tangle. It’s not anyone’s fault, it’s not intransigence or Luddite attitudes on the part of the workers or managers, it’s not the developers’ fault, it’s just that the context is not a good fit for the usual kind of IT-based approaches that work well in, say, a banking transaction-system.

Before an all-inclusive IT-system could be made to work here, it’d take years upon years to sort out the underlying mess, not least because many of the tangles would require explicit changes in the law. And with laws intersecting with other laws intersecting with yet other laws, and with any and every change spawning off requirements for maybe a dozen or more other changes, this really is one heck of a mess: not easy to resolve at all.

So, for now, this is a real case where the enterprise-architecture and information-architecture can’t rely on IT alone: we have take the complexity and the human context into account, and work with the mess rather than trying to force-fit it to some predefined concept of ‘control’.

For this type of mess, human-oriented systems like this often provide better solutions than purely IT-oriented ones. Yes, such systems are ‘inefficient’, but they’re actually surprisingly effective, given the complexity of the context – with satisfaction in its overall effectiveness evidenced in quite a few of the comments that follow the article.

Contexts like these are not at all uncommon: yet if we try to apply conventional IT-oriented approaches to them, all we’re going to do is make things worse – and often expensively worse, at that. That’s why we need an enterprise-architecture that’s broader than IT alone – broad enough, in fact, to be able to identify what type of context it’s dealing with, and select an appropriate mix of IT, human and machine to match up with the context’s needs.

Two thoughts:

The first is that “and not assume arbitrarily that throwing some lump of computer-based IT into the mix will automatically solve every problem…” is anything but an ‘anti-IT’ position. IT’s version of Hell involves the implementation of the squishy, imprecise, ‘sort of maybe like’. If there isn’t a rule to follow, no one will be satisfied with the result. This is because IT doesn’t solve problems, it automates the repetition of solved problems.

The second is that I’d suggest that the boundary of effective-certainty is less a bright line, and more a departure point between approaches. To the left, IT can automate a solution, providing control. To the right, IT’s contribution is no longer control, but facilitation of human response via storage, communication, and perhaps some pattern matching. All in all, though, the human dimension is very much in the driver’s seat as the level of certainty decreases.

@Gene: “IT doesn’t solve problems, it automates the repetition of solved problems.”

That’s a really good point. (A few rare exceptions, of course, such as Gordon Pask’s ‘Cybernetic Serendipity’, but they’re kind of ‘the exception that proves the rule’ 🙂 )

It also assumes that the problem a) is ‘solveable’ – which almost by definition only applies to tame-problems – and b) has been solved – which is often kinda questionable in many real-world contexts.

@Gene: “To the right, IT’s contribution is no longer control, but facilitation of human response via storage, communication, and perhaps some pattern matching.

Yep – hence adaptive case-management and Sigur Rinde’s ‘Thingamy’ (see http://www.thingamy.com/ and http://30megs.com )

@Gene: “the human dimension is very much in the driver’s seat as the level of certainty decreases”

Yep – and again, often terrifying to see how much the IT-obsessed (or, more generally, ‘control’-obsessed’) try to force-fit the world to their supposed certainties as “the level of inherent certainty decreases”.