And more on toolsets for EA

Delighted to see that the past couple of posts on toolsets for enterprise-architecture and suchlike have stirred-up quite a bit of interest. So let’s keep going, and see if we can make this happen for real. 🙂

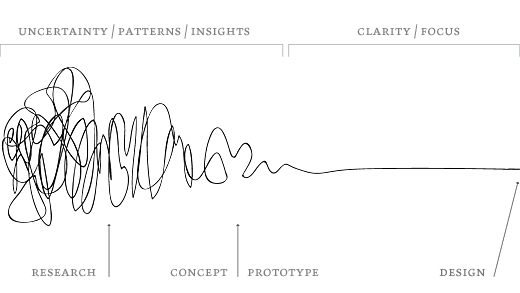

One thing that definitely worked was Damien Newman’s description of the development-process as ‘the Squiggle’:

And yes, people definitely ‘got it’ that most of the existing EA tools are designed only to satisfy the needs of the ‘easy bit’ at the right-hand end of the Squiggle, and that we therefore needed tools that will work with the rest of the Squiggle as well.

Hence a fair few people came up with suggestions for such tools:

- Scapple (via Peter Bakker and Ric Hayman)

- Tinderbox (via Ric Hayman)

- CmapTools (via J-B Sarrodie)

- Protegé and Essential (via Adrian Campbell)

The catch is that whilst all of those are great for the left-hand side of the Squiggle, they don’t connect up with any of the tools for the right-hand side – Archimate, UML, BPMN and so on. In fact the only toolmaker I know so far who’s made serious moves to link everything together is Phil Beauvoir, in the Archi toolset:

- ArchiToolkit: Idea was free sketch/canvasses which could be then constrained/mapped to formal metamodels if required. Going from sketches, inventory making, process capturing, <anything> to ArchiMate, for example. Perhaps semantic reasoning.

Yet even then, as he says:

- ArchiToolkit: The whole “sketch” and “canvas” thing in Archi was never really developed beyond POC stage, effectively.

So again, let’s make this happen.

(Please consider that, unless otherwise explicitly stated, the remainder of this post is licensed as Creative Commons BY-SA: Attribution (here), Share-Alike, Commercial-use allowed, Derivation allowed. The aim is to ensure that whilst implementations of a toolset based on these descriptions may be commercial and proprietary, and individual editors may also be proprietary, the overall data-exchange formats and editor-definition formats must be open and non-proprietary.)

Let’s take it past just a proof-of-concept stage, and build towards something that will link all the way across the Squiggle – from ‘messy’ experiments and explorations all the way across to formal-rigour, and back again.

And that’s our first requirement: any entities in any models from any part of the development-process can link together with any other entities.

It’s not as hard as it might sound: it’s not that we need a single tool to connect across all of that space, but a unified toolset – which is not the same requirement at all. Agreed that it’s simply not feasible (not yet, anyway!) to build one tool that could cover everything from free-form scribbles to every type of formal-rigour, usable across every part of the toolset-ecosystem; but as far as I can see, it is already feasible to build a set of distinct, discrete tools that can also work together in a unified way, as a platform, to make that kind of interconnection possible.

There are four parts to it that we need to resolve:

- the structure of the underlying data-model

- the structure of the data-interchange files that could exchange information between implementation-instances across the whole toolset-ecosystem

- the structure of the files and data to create editors (tools) that would work in that consistent way

- the structure of the apps and user-interfaces to allow the editors to work on the respective underlying-data

Of those, the data-interchange file-format and the editor-specification format must be open and non-proprietary – otherwise we’ll be little better off than we are already, with the current mess of incompatibilities between proprietary file-formats. The other two parts – the underlying data-model and the apps themselves – can be either proprietary or non-proprietary: I’d very much prefer to see a complete open-source implementation, but ultimately it may not matter all that much, as long as we have access to the sharable data and the means to make sense of it.

There’ve already been a fair few suggestion for data-models and databases:

- JSON-HyperSchema (and ‘elegant APIs‘ for same) and Jsonary (via J-B Sarrodie)

- HypergraphDB (plus descriptive interview) (also via J-B Sarrodie)

- Topic Maps (indirectly also via J-B Sarrodie)

- RDF/OWL (via Peter Bakker, Ivo Velitchkov, Phil Beauvoir and Adrian Campbell)

- MongoDB (via me) and NoSQL/document-databases in general (via J-B Sarrodie and others)

Of these, probably Topic Maps represents the closest fit to the conceptual level of the data-needs as I understand them. I acknowledge that a lot of people have been pushing me towards RDF/OWL, but I still don’t think it’ll do the job – not least because of its requirement that every relationship is binary, whereas I suspect that we’re quite often going to hit up against true n-tuples.

For a real-world example, MongoDB looks like it’d be able to tackle any data-structure we’d need, certainly up to a proof-of-concept toolset that would already be really useful in its own right. (Mongo also has a very useful trick in its query-language such that we can ‘skip over’ some aspects of arrays within searches: I’ll explain why that’s so important in a later post, when we get a bit deeper into the data-structure.)

The underlying database-technology isn’t actually that important, as long as it can implement the required data-structure one way or another. (There’ll be all manner of trade-offs, but those probably won’t matter all that much even for a fairly feature-rich proof-of-concept.)

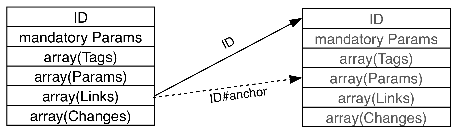

If we think in terms of MongoDB for the moment – in other words, an extensible, field-structured yet schema-free document-type model – then we can place all of the core data-items into a single table or ‘collection’. (The key exception would be user-account definitions, which should be in a separate collection, for security-reasons at least.) And as described in the section ‘Model-types and object-ownership’ in the previous post ‘More notes on toolsets for EA‘, we can describe every object (and yes, I really do mean ‘every object’) in terms of just one fundamental object-type, with variations that can be accommodated automatically within MongoDB’s query-language:

- identifier(s) + mandatory parameters

- array (0..n) of tags

- array (0..n) of optional-parameters

- array (0..n) of optional pointers

- array (0..n) of change-records

Each of the arrays contain objects, which themselves may have variable numbers, names and types of parameters. But by selectively using the same names for certain parameters – such as identifiers pointed to by link-pointers – we can greatly simplify the search, reducing the amount of recursion we’d otherwise need to do. (Again, I’ll explain this point in more depth when we get into technical-detail for implementation in a possible later post.)

The result is that, from the perspective of entity-relationship modelling, there is, in effect, just one data-entity type, whose pointers technically point back to ‘itself’:

Not quite that idealised ‘mote’ that I’d described in the previous posts, but not far off it…

As I see it at present, the data-interchange format is an expression of that nominal data-structure, including the links between entities, as appropriate. As per above, it’ll probably be implemented as some variant of JSON or Topic Maps – or that’s my understanding at present, anyway.

The tricky part is the editor-definition language. Starting from what is probably the wrong end of the picture, I’d like it to take a form somewhat like Literate Coffeescript, such as parsed by Docco – a straight text-file, where the main-text is Markdown-format, but selected parts of the Markdown are repurposed for the definition. (For example, headings represent sections of the definition, unordered-lists represent object-definitions, and ordered-lists represent arrays.)

In the model I’ve been working on so far, an editor is essentially a kind of plug-in that will work on data-objects in some defined way. The key point is that there could be any number of them: hence why the whole edit-mechanism needs to be structured as a platform for plug-ins. And whilst there would be standard editors – because the whole point of this is to support information-sharing in various ways – we also need to allow for the ‘messiness’ stages, within which since some ways of working are unique to an individual person: hence it must also be possible to define them ourselves, and then share those editors with other people, too. Hence the need for some kind of definition-language for editors. (At some later stage we might extend this to a visual-editor for editors, but the exchange-format would be primarily textual anyway, so it’s simplest to start just with the text part.)

Editor plug-ins define all of what happens on the screen and in the underlying database. For the very first stages we could start with basic form-type layouts, but we would very quickly need to move to various types of graphic-layout and graphic-editing – hence the editor-definition must be able to support that. But in a plain-text format that doesn’t assume (or probably even allow) low-level code. Which is where it gets tricky…

As I see it, an editor-definition has four distinct parts:

- core-definitions: identity, copyright (if any), language, etc

- object-definitions: tags and parameters for objects that this editor creates and/or edits, plus default display-graphics etc

- data-definitions: default-values and predefined-lists (for pulldowns etc)

- view-definitions: the actual editor-functionality: menus, trigger-events and responses, data-manipulation, context-sensitive help etc

The basic text-only format alone would be sufficient for forms-based editors; for editors that use graphics, we could probably include the graphics by reference, via Markdown links or some such. What I’m particularly trying to avoid is bundling low-level code as part of the editor-definition itself.

The first three parts of the definitions are pretty straightforward: they could easily be described in a JSON-like format, though preferably made somewhat more readable and commentable as a ‘literate’-type format, as per above.

The view-definitions part, though, is not straightforward, and I’ll admit I’m still decidedly struggling with how best to make it work. Conceptually at least, it’s straightforward enough for forms and for basic graphics, but the language-structure I roughed-out a couple of years back – somewhat like an event-oriented variant of the classic HyperTalk language – may not be able to extend far enough to support an equivalent of what Archi can already do with Archimate or the like. (I do have some ideas on this, but I need to talk them through with someone more competent at language-parser development than I am.) The challenge is still to do all of this without requiring direct access to low-level code – in part because anything that directly accesses low-level code is a security-risk, or can at the least screw up both view and data, but in part also because the huge range of user-interfaces and interface-capabilities across the toolset-ecosystem pretty much mandates at least some level of abstraction.

The only way out that I’ve seen so far is to build, in parallel, code plug-ins that are independent of any specific editor, but whose functionality can be called-on by editors. The analogy is sort of somewhere between a conventional ‘require‘-statement (a kind of declaration in the core-definitions section of an editor-definition that this editor needs that code-module, and won’t run without it), and/or a FORTH-style ‘word’ (which would then expose one or more new keywords that can be used in the view-definition scripting-language). These code plug-ins, however, would not be user-definable – at least, not in the same way that the editors are. They need to be understood as part of the code-base, rather than the editor-base: I can’t as yet see any viable way round that constraint.

Oh, one other key point: an editor would not be run as a script, direct from the text-format: that would be way too slow. Instead, we’d load the editor-definition into the app, and then compile down to a Thing (or, more likely a set of Things) that sit in the same collection as all the other data-Things, as described earlier above. For this reason, the editor-editor – the editor that manages editors – would be the first editor-definition that we’d need to write: a facility to load, search, display and select from the current set of editors. Once that’s in place, pretty much everything follows automatically from that – hence it’s a key requirement for any proof-of-concept. (That kind of recursion isn’t unusual, by the way: the first procedure that we write for a quality system is the procedure to write procedures, and the first principle that we write for an enterprise-architecture is about the primacy of principles.)

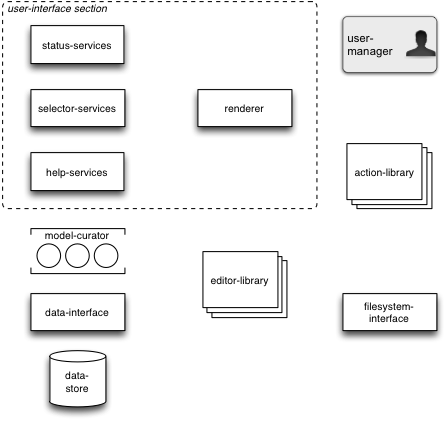

On now to the final part of the puzzle, the apps and user-interfaces. As I understand it, this is actually a lot more straightforward than it might seem at first glance: there’s quite a lot of it, but there’s nothing new as such in the underlying technologies that it would need, and with proper attention to containers and components (thank you Simon Brown!) and clear separation-of-concerns, it should (I hope!) come together with relative ease. From what I’ve seen so far, the architecture consists of the following key elements:

- data-store: the underlying storage for Things, as described earlier

- data-interface: an abstraction-layer that manages data-access and data-change – needs plug-ins for different types of data-store

- filesystem-interface: an abstraction-layer that manages input and output for the underlying filesystem (may be server-only for some app-types)

- user-manager: handles everything to do with users, including access-controls and view/edit-rights

- model-curator: acts as ‘custodian’ for the set of Things currently being referenced and in use by editors (including session/settings-data as well as Things-data)

- editor-library and editor-switcher: maintains the currently-loaded stack of editors, and passes events to the editor currently on top of the stack

- action-library: maintains the currently-loaded set of actions (code plug-ins), and passes events to the respective action

- selector-services: maintains and displays the current menu-sets (or equivalents), and triggers action-events as appropriate

- status-services: displays current status and messaging

- help-services: displays context-sensitive help (as registered by editors)

- renderer: displays the user-interface content, including setup of user-interface event-triggers

(There may also need to be a distinct central-controller element, but it may not be required if the event-structure is set up correctly.)

We could summarise this visually as follows:

As per all of my other stuff around Enterprise Canvas and the like, I’m assuming a concept of ‘everything is a service’, with service-activities triggered by events. On one side, there are event-responses specified in the editor-definition; on the other, I’m assuming a standardised cross-mapping between actual user-interface metaphor (keyboard/menu/mouse, touch-and-gesture etc) and nominal interface-function, such that editor-definitions would work in the same way (as far as practicable, anyway) on whatever actual user-interface metaphor applies on that part of the toolset-ecosystem. It’s the task of the renderer and the selector-services to make sure that the right event-triggers are in place for the user-interface to enact as required, and for the editor-library and action-library to respond to as appropriate.

That’s the basic ideas so far for the architecture, anyway: over to you for comments and suggestions, if you would?

Interesting topic, 3 years ago I created an outline design for an EA tool, which had three distinct layers.

Base layer was driven by an ontology, which enabled you to import your own dictionaries/data from any source, and convert into an ontology. Funny enough I met a company who had that bottom bit. Believe it or not they imported the entire Aris database into their ontology convertor (in front of me in minutes).

The second tier is a modelling viewer, with text auto complete. Basically content of any model is driven by the content of the ontology, this enables local language. Also removes the need for QA and inventing terms people don’t know.

The third tier was a google type search tier, which would enable people to search for content (models and content from the third tier) they were interested in, and users were returned the options (like google), to view as images, text etc..

The third tiers goal is to solve the issue that all EA tools suffer with, getting the content out easily, without the aid of a vendor. Also content must be driven by conditional formatting, which enables you to create views based on different stakeholder needs.

The tool also enabled designers to model the service design aspects and plumb into the business and technology delivery (full iPad design support). Giving full outside in and inside out alignment.

In terms of what happened, well i had early discussions with software vendors, but was probably to far ahead for the times, but hey you never know the need for real time modelling, might just be right 🙂

Hi Mike – yes, sounds really interesting. 🙂

What we’re finding – just as you did – is that lots of people have single parts of the puzzle, often really well resolved (as in your example of the ontology-importer). What’s missing is something / someone / somelotsofpeople / somewhatever to link all of the pieces together into a unified whole – or at least unified enough that multiple and useful pathways through that whole become possible.

I’ve been nibbling-away at this for more than a decade now, and likewise have had quite a few talks with vendors over the years, but none of those discussions have ever really gone anywhere beyond polite expressions of interest. I can understand why, I suppose: it’s often very much not in vendors’ interest that the joined-up-whole should exist, unless they themselves control the entirety of that unified-whole – which I don’t think would be possible, given the technical and human-skillset constraints.

On ‘real-time modelling’, it does sound like you’ve been addressing a (yet another) different aspect of this unified-whole: talk somewhen on this, perhaps?

And yes, whilst we’ve certainly been “too far ahead for the times”, it’s possible that at this stage it at last “might just be right”. 🙂 Let’s make it happen this time?