Decision-making – linking intent and action [3]

How is it that what we actually do in the heat of the action can differ so much from the intentions and decisions we set beforehand? How can we bring them into better alignment, to ‘keep to the plan’? And how does this affect our enterprise-architectures?

What we’ve been looking at in this series of posts is a key architectural concern: at the moment of action, no-one has time to think. Hence to support real-time action, the architecture needs to support the right balance between rules and freeform, belief and faith, in line with what happens in the real-world context. And it also needs to ensure that we have available within the enterprise the right rules for action when rules do apply, and the right experience to maintain effectiveness whenever the rules don’t apply.

As we saw in previous parts in this series, this implies is that within the architecture we’ll need to include:

- a rethink of ‘command and control as a management-metaphor [see Part 1 of this series]

- services to support each sensemaking/decision-making ‘domain’ within the frame [see Part 2 of this series]

- services to support the ‘vertical’ and ‘horizontal’ paths within the frame

- governance (and perhaps also services) to dissuade following ‘diagonal’ paths within the frame

So this is Part 3 of the series: exploring the architecture of how we link together the various domains of sensemaking and decision-making within the enterprise.

[Two key reminders here: this is ‘work-in-progress’, so expect rough-edges and partly-baked ideas; and although I’ll aim to keep the descriptions as simple of possible, note that all of this is recursive, with many intersecting layers of simple and definitely-not-simple – so please do expect to have to do exploratory-work of your own here too.]

On services to support the ‘horizontal’ and ‘vertical’ transitions:

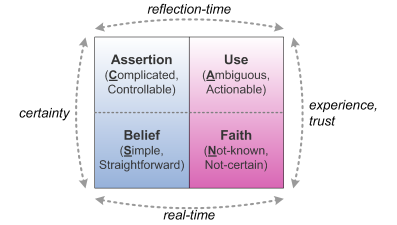

We can summarise this part in terms of the following diagram:

Although sensemaking and decision-making tend to be blurred together within these transitions, there’s usually a clear set of distinctions:

- services that work across the modalities in real-time action

- services that bridge between certainty and uncertainty in planning for action and reflection on action

- services that improve how we apply certainty in action

- services that improve how we work with uncertainty in action

The first two sets of services are primarily ‘horizontal’ across the SCAN frame, linking across the modalities but at a single timescale; the other two sets are primarily ‘vertical’, crossing timescales but on either side of the Inverse-Einstein boundary. There’s obviously enormous scope here, but to keep things simple I’ll stick to a single scenario for each.

For real-time, imagine starting this off with a checklist – a pilot’s pre-take-off check for an aircraft, perhaps.

This gives us a Belief-based structure for decision-making – ‘belief’, because the ‘correct method of working’ is embedded in the sequence of the list. It also gives a Simple true/false method for sensemaking – ‘simple’, because either something checks off against the list, or it doesn’t. After much repetitive practice, using this checklist is ‘second-nature’ to the person doing this work – yet the list is also followed with care and attention.

And because the checklist is followed with care – as ‘the truth’ – the pilot notices that something doesn’t check off correctly. For this example, we’ll assume it’s the radio: there’s no response and no apparent signal from the control-tower.

The moment that we hit something that ‘doesn’t fit’, by definition that throws us across the other side of the SCAN frame, into the Not-known. Notice that for a (very) brief moment, there’s a sense of panic – at which point all the previous training and skill and experience should kick in, together with Faith-based decision-making, to cope with ‘a context larger than that covered by the rules’.

[I’ve deliberately chosen a fairly minor yet everyday example here: an incorrect radio-setting. For a far less everyday example where the same principles and processes apply, moving back-and-forth across the real-time spectrum, see the section ‘Sensemaking in real-time’ in the post ‘On sensemaking in enterprise-architectures [Part 2]‘.]

In a fully-structured process, there would be another checklist here, specifically to guide sensemaking and then decision-making around what’s (not) happening with the radio – in other words, a tool to pull this back over to the left-side of the frame again, with Simple / Belief. But if the checklist doesn’t exist, or isn’t found, the sensemaking and decision-making remains over on the Not-known / Faith side of the frame.

It’s a high-risk context, so the pilot can’t afford to ignore the problem, and also can’t ‘go on faith’ – the checklist makes it clear that that radio must be working correctly before take-off can be allowed. So notice what happens next: the sensemaking remains on the unorder side, but drops out of real-time. Everything slows down: the pre-take-off process has to stop whilst the pilot carries out a quick series of experiments – in other words, moving somewhat up into the Ambiguous / Use space.

Most of these are Simple true/false tests (is the radio switched on? is the headset connected? is the frequency-setting correct?), which in principle are rule-based, except that the pilot is creating these tests on the spot, from past experience and knowledge of the equipment, rather than following a (non-existent) checklist. One of these tests shows that the frequency has been set for the destination airport rather than this one. The pilot looks up the correct frequency from a reference-chart – another Simple tool – and then changes the channel – a Belief-based decision.

Going back to the original checklist – in other words, now back in real-time again, over on the left-side of the SCAN frame – the pilot re-checks the radio-call: this time it does confirm correctly. The pilot then completes the pre-take-off checklist without any further Not-known interruptions.

From an architecture perspective, notice two points here.

The first is that real-world sensemaking and decision-making at the point of action will often bounce back and forth between Simple / Belief and Not-known / Faith. Most typical business-processes will start over on the Simple / Belief side of the frame – in other words, ‘follow the plan’; yet anything unique, anything different, anything unexpected that doesn’t fit the predetermined ‘the Rules’, will automatically force a transition over to the Not-known / Faith side of the balance. And in most cases, only skill and experience will bring it back over to the Simple side again, to deliver the required result. That’s what skill is, and largely what it’s for.

The second point is that systems which can only work with rules – which in practice includes almost all machines, and most IT-systems – cannot actually cope with that transition into the Not-known. And many if not most real-world contexts do include uncertainties of some kind or other. In such cases – which, again, is most cases – rule-based systems cannot be used to address the whole context: there must be a human skill-based component both to identify when the rule-based system is out of scope, and to take over when it does go out of scope.

The danger here is that IT-systems can sometimes simulate full-context capability from sheer speed applied to a sufficiently large rule-base – which gives the illusion that it can cope with the full context. Fact is that it probably can’t – that uncertainty again – but if we design on the assumption that it can, we’re going to be in real trouble when (not ‘if’) it fails. The architecture needs to take great care on this point: yet the sad fact is that most current architectures – especially IT-centric ones – don’t take anything like enough care with fallbacks and the like here. You Have Been Warned?

For reflection-time – moving back-and-forth across the frame, but at some distance from real-time – what we need are processes that focus on pragmatics and praxis: distilling theory from practice (right-to-left on the SCAN frame), and applying theory to preparation for practice (left-to-right on SCAN) in the unordered-realms.

This is the transitions between what’s described in SCAN as Complicated / Assertion and Ambiguous / Use. What we’re looking for here in the architecture is support at various different timescales – strategic, tactical, operational – for a whole swathe of interactions and trade-offs across the two sides of the frame. As mentioned back on the post ‘Decision-making – belief, fact, theory and practice‘, some of the keywords we’d look for on each side of that balance would include:

- theory versus experience

- ‘objective’ versus ‘subjective’

- ‘science’ versus technology

- ‘control’ versus trust

- true/false versus fully-modal

- organisation versus enterprise

- structure versus story

- sameness versus difference

- ‘best-practice’ versus (understanding of) ‘worst-practice’

- ‘sense’ versus ‘nonsense‘

- certainty versus uncertainty

- rules (‘the letter of the law’) versus principles (‘the spirit of the law’)

For example, this is – or should be – the ‘applied science’ transactions between the assertions of science and the usefulness of technology, each lifting the other to new levels of capability. And we’ll only achieve a real effectiveness via a fully-nuanced ‘both/and’ balance across all of these dimensions, and more – which is what the architecture needs to support.

At present, though, most enterprise-architectures and their subsidiary domain-architectures will be hugely skewed towards the left-side of that balance: theory and ideology, ‘objective’, ‘science’, structures, sameness, ‘sense’, rigid rules, near-random re-use of others’ supposed ‘best-practice’, true/false ‘proof’, abstract organisation (rather than human enterprise), and, above all, certainty and predictability. Yet the end-result of such imbalance is an architecture that is all but incapable of coping with either uncertainty or change – and relies instead on a stream of management-fads to give a spurious sense of certainty where none actually exists. Which is not a good idea, especially in the increasing uncertainties of most present-day business contexts. We need that balance…

The simplest way to work towards a better balance is that, for each item that seems to fit in either the Complicated / Assertion domain or the Ambiguous / Use domain:

- what is its counterpart in the opposite sensemaking or decision-making domain on the other side of the frame?

- what processes link these two items together, such that each can learn from and support the other?

- how do these processes vary at different distances from the point of action?

- how do these processes vary for different skill-levels or for use with different real-time process-implementations?

(We’ll come back to that last question shortly.)

So, for example, Complicated-domain analytic, algorithmic hard-systems theory has its Ambiguous-domain counterpart in experimental, emergent soft-systems theory: in what ways do these link together? How do they support each other, inform each other, conflict with each other, enhance each other? How do we identify (make sense of) which approach would apply better to any given context? What are the trade-offs that would guide such decisions?

[For some great examples of how this kind of interaction works in scientific research, see WIB Beveridge’s 1950 classic The Art of Scientific Investigation.]

Using those tests and guidelines, work your way across all aspects of the architectures, to identify gaps and imbalances across the SCAN domains.

For improvement of real-time action, the processes would, in principle, be partitioned across either side of the Inverse-Einstein test: those processes that focus ensuring that the same actions lead to the same results, versus those processes that focus more on skills-development, such that we can achieve the required variation in similar contexts or the required ‘sameness’ in different contexts. In very quick summary:

- improvement on the left-side (‘order‘) will focus primarily on efficiency (typically described in quantitative terms, and often regarded as synonymous with effectiveness)

- improvement on the right-side (‘unorder‘) will focus more on broad-spectrum effectiveness (with an emphasis on qualitative factors and human-concerns)

That order-versus-unorder partitioning is valid in itself – the Simple true/false methods used by machines and IT-systems, versus the full modality of methods available within skills-work. Yet it’s also in itself too simple, or too simplistic, rather: we need the framework to give guidance on skill itself.

This is where we come back to that question about reflection-processes that vary according to skill-levels. In essence, it’s not really a skill unless there’s some inherent-uncertainty involved in the context: before that, all the way over onto the Simple side of the spectrum, everything is literally mechanical, rule-based.

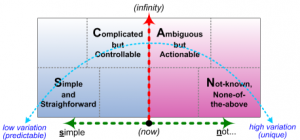

For this, we can turn to a cross-map of the SCAN frame with a spectrum of variability or predictability – shown as the blue curve in the diagram below:

The diagram is perhaps slightly misleading here, because the impact of variability doesn’t come out well enough: the blue line is itself another kind of continuous spectrum, rather than the Simple true/false implied by the colour-shading here.

[Part of the reason is that I don’t yet know how to how to do multi-layer multi-colour graded-shading in Visio: accept it as it is for now, if you would?]

What is relevant here is the the way in which skills-development follows the same effective path of increasing variability – including that increased distance-from-action in the middle of that curve.

What we actually have in skills is not so much a Simple ‘either/or’ – Simple or Not-simple, order or unorder, as implied on the diagram – but more a ‘both/and’ mix of order and unorder. Higher levels of skill also implies or requires the ability to cope with higher levels of modality, variability and unorder. We can split this in terms of five distinct skill-levels:

- Robot: no skill as such – Simple rule-following only

- Trainee: low level of skill – mostly Simple / Belief, aware only of ‘here and now’, requires active supervision to cope with variability

- Apprentice: some level of skill, still primarily order-based but able to manage more Complicated / Assertion contexts with broader factors and feedback / feedforward loops, with some active supervision

- Journeyman: significant skill, able to cope with higher levels of Ambiguity and context-dependent Use, with supervision mainly in the form of mentoring

- Master: high skill, able to cope with inherent-uniqueness, balance of ‘big-picture’ with ‘here and now’, and ‘supervision’ only in the form of peer-review

So when we look at the ‘vertical’ improvement-processes implied by the SCAN frame, we tend to find that they work best when they act on specific mixes of order and unorder, sameness and uniqueness – in other words, in alignment with these skill-levels.

We can also see the classic ISO-9000 quality-system derivation-sequence at work here, between each of those steps:

- work-instruction: context-dependent rules used by Robot and initial Trainee – emphasis on What and How

- procedure (basis for new work-instruction): used by Apprentice and above, defined by Journeyman and above – emphasis on Who, Where and When

- policy (basis for new procedure): used by Journeyman and above, defined by Master – emphasis on Why

- unchanging-vision (permanent-anchor for quality-system, used as basis and cross-check for new policy): used by Master, defined by Master in peer-review – the ‘Because.’ behind the Why

There are many, many types of review / improvement-processes – PDCA (Plan, Do, Check, Act), for example, or AAR (After Action Review) or OODA (Observe, Orient, Decide, Act). Yet almost all of them have this ‘vertical’ character, to link:

- from real-time action – where there’s no time to think

- to distance-from-action – which creates thinking-space and review-space, to enable improvement

- then back to real-time again – to apply that improvement in real-world practice

There’s a usually a slight sideways-move in there somewhere – because wherever practicable the aim should be to enhance those skill-levels, not leave them solely as they are. But what we don’t want are ‘diagonal’ moves that try to link one type of order / unorder mix at ‘thinking-time’ with a very different mix at real-time – because it all but guarantees failure in practice. We’ll explore that point in more detail in the next part in this series: for now, we’ll focus more on the ‘verticals’.

We can again summarise these processes in terms of those five distinct skill-levels:

— Robot: Simple / Belief only (typically machines or real-time IT-systems) – aim is to optimise efficiency within a specific defined context

This is the classic realm of Taylorist time-and-motion study, of Six Sigma and suchlike: if we assume that everything in the work-context remains the same, what can we do to improve the efficiency of that ‘sameness’?

The crucial point here is that the Robot can only follow the rules that it’s given: it can’t change anything by itself – or even adapt to any significant change in its context. The Robot must rely on an external ‘expert’ to redefine its rules whenever the context undergoes any significant change, yet the ‘expert’ does not have to deal with real-world consequences: a fact which, if misused, can lead to a dangerous co-dependent relationship between Robot and ‘expert’, based on mutual evasion of responsibility – something that we see far too often as an outcome of dysfunctional blame-based management-structures.

— Trainee: Simple / Belief <-> Complicated / Assertion – aim is to develop ‘rule-following’ efficiency and to develop awareness of the ‘larger picture’, to place own work in context, and to begin to cope with variability

We typically see two types of review-processes here. One type concentrates on practice – embodying ‘the rules’ through constant repetition, mainly focussed on method, on the ‘what’ of those rules as applied to real-time action. The other type, typified by the US Army’s ‘After Action Review’, begins a focus on enhancing personal ‘response-ability’ – a concern that will continue all the way through the skills-development sequence.

— Apprentice: Complicated / Assertion <-> Simple / Belief (with some bridge over to Ambiguous, e.g. via experimentation) – aim is to develop ability to use formal-theory to redefine own rules as the context changes

This is the classic realm of formal education, with an emphasis on theory and on the mechanics of the skill, the ‘how’ behind its processes and methods. However, the focus is almost more on ‘order’ than at the Trainee level, defining rules as ‘objective truth’ to be applied by others in real-time action. The main contextual-shift is a developing awareness of more and more Complication in those ‘rules’ – a layering nicely described by Jack Cohen and Ian Stewart as an increasing sophistication of “lies-for-children” – in which additional factors, interaction-loops and delay-impacts are added to the rule-definitions. One of the hardest parts of this stage is re-simplifying these ever-more-complicated algorithms and ‘rule-sets’ down to a form that can be used in real-time action…

— Journeyman: Ambiguous / Use <-> Not-known / Faith (with some bridge over to Complicated, e.g. as ‘applied science’) – aim is to enhance ability to work with increasing levels of variation and near-uniqueness, such as by applying patterns and guidelines

This is typified by the crucial shift in awareness that theory alone is not enough: in the real world, ‘truth’ is often highly contextual. This is the realm of ‘real’ complexity, of emergence, of iterative exploration and experimentation, and also a more explicit acknowledgement of the inherent unorder that underlies wicked-problems and the like. It’s also a realm of probability and improbability – hence a strong focus on concerns such as the uncertainties of statistics, on kurtosis-risks, long-tail opportunities, and so on.

[Note the danger of failure to understand the probabilistic nature of statistics – that they always embed and embody some degree of unorder and uncertainty. It has its rules, but they’re not the same order-based rules as in the Complicated domain: for example, it’s true that chaos-mathematics can enable us to be very precise about the degree of uncertainty in a context – but it does not remove the uncertainty itself. Another important ‘You Have Been Warned’ that we need to pass on to our architecture-clients?]

There would also be a stronger emphasis here on guidelines and patterns, and on what we might describe as the approaches to each skill – the unorder of the ‘other mechanics’ of the skill, such as in the psychological and emotional drivers, and in ergonomics and individual difference. Continuing and expanding the theme of the After Action Review, this is the realm of responsibility-oriented continuous-improvement processes such as PDCA and kaizen, of simulators and ‘sandboxes’ and other ‘safe-fail’ learning-spaces, and also of context-exploration tools such as the skills-labyrinth.

— Master: Not-known / Faith <-> Ambiguous / Use – aim is to enhance effectiveness, being able to work with any level of variability and uniqueness at real-time, in line with overall vision and values

It’s at this level that we return to real-time practice, but this time aiming to be able to work with unorder, rather than fight against it (or even pretend that it doesn’t exist…), as in the rule-based assumptions of the Robot space. Here there’ll be a strong emphasis on enhancing capability for improvisation, and for coping with inherent uncertainty, such as with innovation and with Black Swans and other opportunities and risks at the extreme end of unorder. For skills, this would also bring together the previous themes in active acknowledgement that method = mechanics + approaches – hence true skills are both same and different for everyone at every time. On a practical level, there’s also a strong emphasis on the use of principles, vision and values to provide a stable anchor for guidance amidst inherent-uncertainty.

[Notice that, again, all of the above sequence is recursive: we may well be at Master level in some skill-domain, but barely at Trainee-level in another – a fact that can at times be somewhat challenging… 🙂 ]

Implications for enterprise-architecture

For enterprise-architects, there’s a lot to review here, because all of those items need to be in place if the overall architecture is to work well for the organisation and enterprise:

- services that bridge across the modalities of certainty and uncertainty in real-time action

- services that bridge between certainty and uncertainty in planning for action and reflection on action

- services that improve how we apply certainty in action, how we work with uncertainty in action, and the skills of each person to work with these

We’ll need to identify each of these items, for each of the respective ‘horizontal’ and ‘vertical’ contexts; and wherever there are gaps in the needed support, identify what needs to be done to create and embed the respective items.

We also need to be aware of and act on some really nasty booby-traps that, if we’re not careful, can damage or even destroy the entire enterprise. Dysfunctional management-structures and misapplied Taylorist ideas are well-known examples of these: the real problem there is that the illusion of ‘control’ is so comforting to so many that these muddle-headed mistakes keep on coming back to bite us time and time again, like the proverbial ‘bad penny’.

Another serious danger that’s a bit more subtle can arise from those seemingly-relentless demands to do more and more, faster and faster. Part of this is that the sheer pressure to produce can cause a disconnect between strategy and tactics and even between tactics and operations: when everything has to happen now, there’s no time to think about what’s being done, or why. Not a good idea…

But a corollary of that is that if there’s no time to think, there’s also no time to develop skills – a point which again is made clear in that cross-map between SCAN and the variability-curve above. All too often we’ll come across an organisation that in essence consists of Masters and Robots (such as machines or IT-systems, or ‘crowdsource’ structure such as Mechanical Turk which in effect treat real-people as Robots), with nothing in between – perhaps a few Trainees to do the grunt-work, but that’s about it.

There’s little question that this can be highly profitable in the short term. Yet it’s a model that, almost by definition, cannot and does not scale – hence the constant complaints we see about ‘skills shortages’ and the like – and why so many startups seem to crash-and-burn so soon after their first flush of sweet success. And if there’s no means within the organisation’s architecture to develop those skills, there’s also no way to learn the contextual information needed to create the next generation of Masters – see the post ‘Where have all the good skills gone?‘. Ignoring the skills-development issues may seem profitable at first, but it’s actually a guaranteed path to commercial suicide. Once again, You Have Been Warned?

Anyway, enough for now: more on this and other related themes in the final post in the series.

Any comments or questions so far, anyone?

A big part of the answer on “How is it that what we actually do in the heat of the action can differ so much from the intentions and decisions we set beforehand?” can be found in this amazing book: The Procrastination Equation by Piers Steel http://procrastinus.com/review/

To act impulsive and value lower short term profit more than higher long term benefits seems to be part of our genes. So enterprise architects must find a way to trick the decision makers so that they will take impulsive short term decisions which are in line with the long term interests of the total enterprise.

Enterprise architecture models are too much focused on things and will always be severely flawed because they don’t acknowledge the importance of the behavior & culture of enterprises/organizations/teams/people.

Hi Peter – thanks for the comment, and for the pointer to ‘The Procrastination Equation’ – a book must evidently read Real Soon Now… 🙁 🙂

“So enterprise architects must find a way to trick the decision makers so that they will take impulsive short term decisions which are in line with the long term interests of the total enterprise.” – yes, exactly. I’ve long worked with various themes around ‘how to trick people into being powerful’, or ‘stealth-futures’ and the like – what you’ve described there is essentially another variant on that same theme.

“Enterprise architecture models are too much focused on things” etc – again, strongly agreed: that’s one of the key reasons I’m shifting my attention and work much more towards ‘enterprise-architecture as story’. Will keep in touch with you on this, if I may?