Decision-making – belief, fact, theory and practice

In what ways do ideology and experience inform decision-making in real-time practice? How do we bridge between the intentions we make before and after action, with the decisions we make at the point of action itself? And what implications does this have for our enterprise-architectures?

This extends the previous post on real-time decision-making, ‘Belief and faith at the point of action‘, to crosslink with the earlier ideas on SCAN and sensemaking, and especially about where there is more time available to review and reflect on action.

[A gentle warning and polite request: much of this is still ‘work in progress’, so do beware the rough edges and knobbly bits, and use it with some caution; and whilst I do need critique on this, please don’t be too quick to kick down the scaffolding that’s holding it all together. Fair enough?]

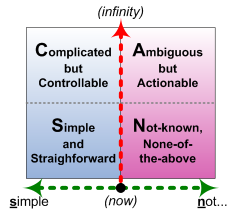

The previous post was about how options for sensemaking become more constrained as we approach real-time. Right at the point of action, the options reduce to either a Simple interpretation in terms of of true/false categories, versus a Not-simple interpretation based on a modal-logic of possibility and necessity, which is much harder to explain or even to describe to anyone else. In SCAN we’d depict that compression as follows:

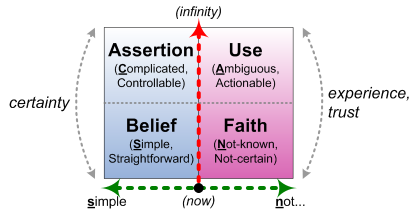

In much the same way, decision-making becomes compressed down to Simple belief versus Not-simple faith – neither of which are actually explainable, and both of which, at the root, are primarily emotional rather than ‘rational’:

In both sensemaking and decision-making, the crucial distinction – indicated in SCAN by where the red-line time-axis crosses the green-line axis of decision-modality – is what I’ve termed the ‘Inverse Einstein test’. Einstein is said to have asserted that “insanity is doing the same thing and expecting different results”: but whilst that’s true in a simple rule-based world, it’s not true – or not necessarily true, anyway – in a more complex world where many things are context-specific or even inherently unique.

So our ‘horizontal’ test is this: if doing the same thing leads to the same results – or is believed to lead to the same results – then it’s a Simple decision; if doing the same thing leads to different results, or if we need to do different things to get the same results, it’s Not-simple.

[Yes, I do know that that’s a Simple true/false distinction across a spectrum that in reality is fully modal. If you want to apply the appropriate recursion here, please feel free to do so: I thought it wisest here to keep it as simple as possible, because this can get complicated real fast, and unless we’re careful to keep the complexities at bay we could end up with a right old chaos of confusion. Which is, yes, yet another recursion… Hence best to keep it simple for now, as best we can, acknowledge that much of it isn’t Simple, and allow the recursions to come back in later when there’s a bit more space to work with it.]

The crucial point about real-time is that there’s no time available for a distinct sensemaking-stage: decision links directly to action, and vice-versa. (That’s why it’s called ‘decision’: the same linguistic roots as ‘incision’, it’s literally ‘cutting away’, ‘cutting apart’, the cutting-edge for action in the ‘now’.)

For sensemaking to take place, there must be a gap in time between one decision to the next. The key to John Boyd’s ‘Observe, Orient, Decide, Act’ (OODA) loop – which, importantly, is also not a loop as such – is that it still allows distinct sensemaking (‘Orientation’) to take place, but keeps it as close to real-time as possible: that’s what’s meant by ‘getting inside the opponent’s OODA loop’.

As time-available – the red-line ‘vertical’-axis in SCAN – extends outward either side of real-time, the OODA-‘loop’ can become recursive, and thence, given enough time, simplified-out to a Deming-style ‘Plan, Do, Check, Act’ (PDCA) continuous-review cycle, such as is also implied in the US Army’s ‘After Action Review‘:

- “What was supposed to happen?” – what was our Plan?

- “What actually happened?” – what did we Do?

- “What was the source of the difference?” – what do we need to Check?

- “What do we need to do different next time?” – about what do we need to Act?

As I’ve described in other posts, sensemaking-choices tend to split as described in SCAN: there’s a ‘bump’ on the path, indicated by the jump between simple true/false logic versus fully-modal logics of ‘possibility and necessity’ on the ‘horizontal’ axis, contrasted with a much smoother spectrum of choices as available-time extends in the ‘vertical’-axis. Although the ‘vertical’ boundaries are less clear-cut than the ‘horizontal’ ones, this gives us the four SCAN quadrants – Simple, Complicated, Ambiguous, Not-Known:

Those distinctions determine the appropriate tactics for sensemaking, as described in those earlier posts.

Decision-making seems to follow a similar, closely-related pattern – though that’s the part I’m having trouble pinning down right now.

[Boyd’s OODA is in part another attempt to pin down the same relationships; likewise Snowden’s Cynefin, if rather less so. Jung’s frame of ‘psychological types‘ is probably a closer fit than Cynefin for this: I’ve used a generic decision-types adaptation of it for some decades now, though it’s still not quite right. Hence this exploration here.]

So again, it’s ‘work-in-progress’, but this is where I’ve come to at present:

It’s a decision-making frame based on the same horizontal (decision-modality) and vertical (time-available) axes as in SCAN, and hence the same sort-of-quadrants but with a decision-oriented re-labelling: Belief (Simple), Assertion (Complicated), Use (Ambiguous) and Faith (Not-known).

On the left-side of the Inverse-Einstein test, the mechanism that links Assertion and Belief is a drive for certainty, for ‘control’. On the right-side, linking Use or ‘usefulness’ with the real-time openness of Faith, is more a focus on experience, underpinned by a deeper kind of trust – a trust which is often conspicuously absent in any concept of ‘control’.

[For this post I’ll focus more on what happens across the horizontal-axis, the relationships between theory and practice, or ‘truth’ versus ‘usefulness’. I’ll explore more closely the interactions along the vertical-axis – between what we plan to do versus what we actually do – in a following post.]

In terms of decision-making tactics:

- on the left-side, theory takes precedence over practice – or, in some contexts, ideology rules, which is much the same

- on the right-side, practice takes precedence over theory

In essence, this is CP Snow’s classic ‘The Two Cultures‘, the sciences (left-side) and the arts (right-side). Notice, though, that technology sits on the right, not the left: it uses theory, but that isn’t its actual base – hence the very real dangers in the often-misleading term ‘applied science’.

Bridging the gap, from left to right, is praxis,”the process by which a theory, lesson, or skill is enacted, practised, embodied, or realized”; and from right to left, is pragmatics, “a process where theory is extracted from practice”. As enterprise-architects would be all too aware, the latter always starts from pragma, from “what is expedient rather than technically ideal”: and it usually includes the joys of ‘realpolitik’, of carefully filtering reality to fit in with other people’s prepackaged assumptions…

That boundary denoted by the Inverse Einstein Test is all too real: whether the beliefs in question are ‘scientific’, religious, political or whatever, the ‘need’ for certainty will often trigger huge resistance against anything that doesn’t fit its assumptions. For example, there’s a very close mapping between this frame and the classic scientific-discovery sequence of idea > hypothesis > theory > law, which align with Faith, Use, Assertion and Belief respectively.

In real scientific practice, it’s not a linear sequence, there’s a lot of back-and-forth between each of the steps. And in principle, it should be a continuous-improvement cycle, a broader-scope form of PDCA. But as Thomas Kuhn and many others have documented, that same ‘need’ for certainty often places a near-absolute barrier between supposed ‘scientific law’ and any new ideas – in other words, between Belief and Faith – that brings that cycle to a sudden halt, sometimes for years, decades or even centuries. All too often, in practice, if we take the real-time ‘short-cut’ from Belief to Faith, we will be forcibly forbidden to return along the same path: instead, we’re forced to go ‘the long way round’, via Use and Assertion (hypothesis and theory) – which we may not have time to do. Which is a very real problem. And one that applies as much in enterprise-architecture as in any other field – as we’ve seen with the inane IT-centrism that has dominated the discipline for far too long.

It gets complicated…

What I’ve been seeing, as I’ve explored this frame, is a whole stream of often-subtle misunderstandings and ‘gotchas’ that I’ve noticed time and again in practice in enterprise-architecture and elsewhere. These seem to be where many unnecessary complications and confusions arise – so it’s worth noting them here.

For example, fact arises from experience: its basis is on the right-side of this frame – not the left. What’s on the left-side often purports to be fact: yet it’s not fact as such, but interpretation of fact – a very important difference. The left-side operates on information, an interpretation of raw-data – but it often has no means to identify the source or validity of that information, or its method of interpreting it. (This is the same inherent problem whereby a logic is incapable of assessing the validity of its own assumptions: by definition, it must call on something outside of itself to test those premises.) So on the left-side, there’s actually no difference between ‘real’ and ‘imaginary’ – which can lead to all manner of unpleasant problems if the left-side is allowed to over-dominate in any real-world context…

Importantly, there’s no real difference here between ‘objective’ versus ‘subjective’: that distinction is actually another dimension that’s somewhat orthogonal to this plane. What I feel, or sense, is subjective, but it’s still a fact; whereas how I interpret that feeling or sensation is not a fact – it’s an interpretation. Telling someone that they should or shouldn’t feel something is just plain daft: the feeling itself is a fact – something about which we don’t actually have any choice – whereas the ‘should’ is an interpretation arbitrarily imposed by someone else.

[What we do in response to a feeling is a choice – literally, a ‘response-ability’ – and is something that can be guided by ‘shoulds’ and the like: but not the feelings themselves. That’s a very important distinction which, sadly, surprisingly few people seem to understand…]

There is a specific sense in which subjective versus objective aligns somewhat with the ‘less-time’ versus ‘more-time’ on the SCAN vertical-axis. More-time means more time available for experimentation and analysis – and that can allow us to identify what’s shared (‘objective fact’) across many people’s experience, versus experiences that are more specific and personal (‘subjective fact’).

But there seems instead to be a tendency to conflate the objective/subjective distinction with the SCAN horizontal-axis – objective-fact as ‘truth’ on the left-side, subjective-fact as ‘not-truth’ on the right-side. There are ways in which that conflation can work – it’s at the core of the Jungian frame, for example – but we need to be careful about it. Using that conflation to dismiss all subjective-fact as ‘irrelevant’ – as the classic ‘command and control’ models would do – not only makes no sense at all, but is extremely unwise in real-world practice…

There also several other key distinctions across either side of the Inverse-Einstein test:

— ‘science’ versus technology, which also parallels ideology versus practice: on the left-side, there’s an assertion that something is ‘true’, whereas on the right-side we proceed as-if it’s true – which is not the same at all.

— organisation versus enterprise: the nature of an organisation is that it’s about left-side themes such as control, beliefs, repeatability and certainty; the nature of an enterprise is that it’s not certain, “a risky venture” and suchlike – with all that that implies.

— structure versus story: most structures within current enterprise architectures will, again, have a left-side focus on providing repeatability and certainty; story and other forms of narrative-knowledge provide an alternate kind of ‘structure’ that holds many of the right-side themes together

— sameness versus uniqueness: another key enterprise-architecture theme, sameness and repeatability is very much a left-side theme, whereas uniqueness is just as much a right-side theme

— ‘best-practice’ versus ‘worst-practice’: the notion of ‘best-practice’ assumes that practice that worked well in one context will be directly applicable to another, the same success repeatable in another; by contrast, maintenance engineers and others who work extensively with unique or near-unique contexts share their learning more through ‘worst-practice’, stories of what didn’t work in a given context. (I think I first heard that one from Dave Snowden? – credit where credit’s due, anyway.)

The trade-offs across each of these dichotomies all have direct implications for the design and structure of any enterprise-architecture.

Implications for enterprise-architecture

Take a look at those dichotomies again: which side do you think is emphasised by current enterprise-architectures?

The obvious answer is that, almost invariably, the left-side is given priority over the right.

However, this has huge consequences for the effectiveness of the overall enterprise, and for the enterprise-architecture that describes it:

- interpretation takes priority over fact: never a good idea…

- theory and ideology takes priority over practice and experience: that’s almost a definition of (misused) Taylorism…

- the need for (spurious) ‘certainty’ and ‘control’ takes priority over trust of anything or anyone: ditto on Taylorism…

- the reliance on true/false decision-methods can render the organisation unable to cope with any form of uniqueness

- the need to force-fit everything into sameness of content – ‘best practice’, IT-centric BPR and the like – fails to grasp the differences of context

- the over-focus on organisation – ‘the letter of the law’ – literally kills off the spirit of enterprise…

Look at most of our existing EA toolsets, too: can you find any toolset that’s actively designed around anything other than true/false logic? Other than in rare model-types such as ORM (Object-Role Modelling), there’s no means to describe modality in relationships – hence, for example, no directly-supported way to describe a usable reference-model that allows for real-world ifs, buts and perhapses.

And whilst every toolset focusses on structure – and most do that very well, too – how many of those toolsets also help us to focus on the counterpart of story? They might support few use-cases, perhaps, but that’s about it: there’s a huge gap in capability there…

What we need, urgently, is a better balance between structure and story, between theory and practice, between organisation and enterprise. And without adequate support in the toolsets, that means that we have to create that balance ourselves.

The crucial point is that this balance is not an ‘either/or’, but a much more modal ‘both/and’:

- theory and experience

- ‘objective’ and ‘subjective’

- ‘science’ and technology

- certainty and trust

- true/false and fully-modal

- organisation and enterprise

- structure and story

- sameness and difference

- ‘sense’ and ‘nonsense‘

- certainty and uncertainty

We will only achieve a real effectiveness in the architecture via a fully-nuanced ‘both/and’ balance across all of these dimensions, and more.

So take a careful look at your own organisation, your own enterprise-architectures and the like: where is it out of balance, in this sense? In SCAN terms, how much does it over-emphasise the left-side at the expense of the right-side? And what can (and must) you do to bring it back into a better balance overall?

Comments/suggestions/experiences on this, anyone?

Tom. After letting this sink in and making due allowance for its unfinished nature, what keeps coming back to me is the following.

In discussions about degrees of complexity (whether that gets plotted according to Cynefin or using SCAN), I have always looked at a problem domain/solution domain relationship. The problem domain is more or less complex and the solution domain can be that too. One normally tries to break down complexity in the problem domain into a set of manageable (simple) pieces. We may then reassemble them to create a meaningful solution. We define solutions which are as simple as possible (but nor more so).

What you seem to have done is to introduce a third dimension, where we are actually considering the desirability of simplicity/complexity. This comes up in your coincidensity piece too. What you are saying, if I understand you correctly is that a simple (and therefore typically rules driven) solution may be possible but is not necessarily that correct response to any challenge out of the problem domain (regardless whether that itself is simple or complicated). And by the same token sometimes it’s absolutely the right response.

I’m not phrasing this quite right. The way I just explained it means that there isn’t a third dimension but that your new factor is just an additional (albeit very new) way of assessing a solution. Maybe it’s clearer if I constrain the solution domain to be how we implement the behavior we’ve decided upon in that third dimension.

I realize this is not central to your argumentation above but I think it’s implicit (probably you didn’t stress it because it was obvious to you) and for me at least is the most significant aspect of the whole. I hope that doesn’t disappoint you!

Stuart – thanks, and I hope I have this correctly – if not, yell? 🙂

“I have always looked at a problem domain/solution domain relation”: this was the core of what I was aiming for with context-space mapping, if you look back a year or two in this blog. SCAN was intended to be a simplified entry-level version of context-space mapping. In a way, sensemaking versus decision-making is problem-space (sensemaking) versus solution-space (decision-making) – though there’s also this other axis in decision-making, between the ‘considered’ view of the (future) real-time solution versus the actual real-time solution.

Degrees of complexity, and “breakdown into manageable pieces”: this is one point where I strongly agree with Snowden and Cynefin and the ‘true complexity’ folks, and where I strongly disagree with Roger Sessions and the like. There are two fundamentally different approaches to complexity. One is the Sessions-style view, which asserts that complexity can always be viewed as an analytic problem, ‘breakdown into manageable pieces’: the only challenge is finding the right method to break it down, hence Sessions’ ‘Simple Iterative Partitions’ etc. The Snowden-style view (and I hope I have this correctly here) is that in ‘true complexity’ there are emergent properties that become inaccessible (probably not the right word, but close enough) the moment we try to break it apart – such as the way in which the emergent property called ‘life’ will usually, uh, disappear if we split a living creature into its component pieces… – hence to understand the emergent properties we must always work with and through the whole.

Hence I kind of suspect that what you’re describing as ‘the desirability of simplicity/complexity’ is actually the extent to which we’re willing to work with true-complexity, rather than try to force-fit it into the analytic mould. Or, to use Kurtz’ term, the extent to which we’re willing to allow unorder to be unorder, rather than attempt to impose the abstractions of some predetermined ‘order’ upon it. Imposing some abstract form of order on real-world complexity will often feel highly desirable – yet there are also risks that we’d now have a ‘map’ that doesn’t align well (or well enough) with the real-world ‘territory’. In that sense, ‘the desirability of simplicity/complexity’ is the priorities we apply to that trade-off: more towards the comfort yet also increased-risk of ‘order’, versus the discomfort and uncertainty yet also increased realism of accepting unorder as unorder.

There’s an additional catch here in that most current IT-systems can only work with true/false ‘order’ (left-side in SCAN) – they’re not competent at true modal decisions, or the unorder of true-complexity (right-side in SCAN). In essence, IT-systems are forced to take the Sessions-style approach: cut everything up into small pieces, and throw them around fast enough to give the illusion that it’s working. It’s true that we can get emergent-properties out of that process – but those emergent-properties may not align well, or at all, with those in the real-world context, and by the nature of of true-complexity that (mis)alignment is not necessarily something we’d ever be able to ‘control’. Hence there are genuine risks associated with using IT and other ‘order’-based systems – or, especially, depending-on such systems – when we’re in a context which has inherent unorder.

In effect, IT-systems – and, for that matter, overly-bureaucratic human-systems – hold us over on the left-side of SCAN, whether or not that’s where the real problem or solution would lie. Hence, again, part of “the desirability of simplicity/complexity” is whether we’re willing to accept true-complexity in the problem-space, or whether we would privilege a preselected ‘solution’ such as an IT-system because it appears to offer ‘simplicity’. There are serious risks inherent in the latter, yet they’re all too easily ignored – as we see so very often in IT-centric ‘enterprise’-architectures.

Dunno how much of that makes any better sense than before? Advise / throw-bricks, perhaps? 🙂

Hi Tom,

Regarding EA Toolsets, is the problem that the tools are limiting a rich description of the problem-set constraining any potential solution-set?

If we want to depict/support modalities in relationships, and embrace related complexity so we can closer to real-world, shouldn’t we allow the late binding/configuration of these relationships retaining some uncertainty?

If we want to get from structure to story, we shouldn’t wrestle with structure (static constraints), but start with a null set of constraints and layering them back in based on context – round wheels with breaks rather than square wheels.

Just a thought – hopefully I’m not too far off base.

Best,

Dave

Dave – you’re right, of course, though it’s more than just that the toolsets are “limiting a rich description of the problem”.

Take a really simple example of a reference-model for IT-architecture. At present, most of the tools can only handle true/false: either something is in the reference-model or not, and complies with the reference-model or not. Given that constraint, how do we describe the very common scenario of alternatives? What we’d often have is a MoSCoW-style layering:

— Must (mandatory, ‘shall’) – “must use xxx protocol” – no variance allowed

— Should (strong preference) – “we are a Java development-house” – variance allowed but only with strong justification, classed as architectural-waiver that should be resolved at some future date

— Can (recommend) – “our experience is that Cisco routers work best for this purpose” – variance allowed without waiver, but recommendation will be urged and supported

— known-to-Work (open option) – “this has also been tested successfully with jjj, kkk and mmm hardware” – variance allowed, but without technical or other support

I’m probably wrong, but at present I don’t know any current EA toolset that can handle even that minimal level of everyday modality.

On the relationship between structure and story, one of the real practical problems I had to deal with at one of my clients was a 20+year gap between uses of really crucial information. The application was highly contextual, so metadata alone would not be enough; and over that time-scale we couldn’t rely on people themselves to fill in the gaps. What we needed was some mechanism to carry the stories associated with each item, to give those future people enough of a handle to be able to work out the contextuality at that time.

Again, this would be another post in itself, and I’m still trying to finish the previous one! – apologies, will Get Round To It eventually…? 🙂

Tom – Thanks for responding, the EA tooling problem seems like a problem of Requisite Variety, or lack thereof. For our part, we do something very close to what you describe with MoSCoW, but we leave the classification itself open to change so it doesn’t become static structures. Importantly, that puts the ‘response-ability’ back on people, individual Resource owners and/or committees, allowing the environment to be dynamic/adaptive. It’s EITA as I have qualified before, but it is EITA that endeavors to play well as part of EA. In our approach, every interaction is a demonstration that the whole is greater than the sum of its parts.