Yet more on ‘No jobs for generalists’ [3a]

Why is it so hard for enterprise-architects and other generalists to get employment as generalists – despite the evident very real need for such skills in the workplace? And what part do current business-paradigms play in this problem?

A quick round-up of where we’ve gotten to so far on this:

- ‘On learning enterprise-architecture‘ – where all of this discussion started, exploring the skills needed for generalist disciplines such as enterprise-architecture

- ‘There are no jobs for generalists‘ – about why it’s so hard to get work as an enterprise-architect, and why most advertised ‘jobs’ for enterprise-architecture don’t actually have much to do with enterprise-architecture itself

- ‘More on ‘No jobs for generalists’‘ – exploring the implications arising from various responses to the ‘No jobs for generalists’ post

- ‘Yet more on ‘No jobs for generalists’ [part 1]‘: about the underlying assumptions that give us so much trouble

- ‘Yet more on ‘No jobs for generalists’ [part 2]‘: about structures and interdependencies between services

And where we’re going with this final part of the series:

- Part 3 (this post): about where and why Taylorism is a poor fit for many present-day business-needs – and some key implications that arise from that fact

I’ll also include some asides on some of the broader business-architecture themes that play into this – particularly the impact of financial-investors as ‘the owners’.

[Apologies, folks – this has turned out to be a huge post. I did promise to finish this series at this point, but I’ll have to split it into more manageable chunks, in line with the chapter-headings:

- Part 3a: ‘An incomplete science’ – about Taylorist notions of ‘scientific management’

- Part 3b: ‘Management as a service’ – a service-oriented view of the role of management

- Part 3c: The impact of the ‘owners’ ‘ – about how and where financial-investors come into the picture

- Part 3d: ‘A question of fitness’ – exploring the use of ‘fitness landscapes’ to guide selection of appropriate architectures

- Part 3e: ‘A question of value’ – how we could describe the business-value of generalists

- Part 3f: ‘No jobs for generalists?’ – a brief(ish) summary of the whole series

Although I’ll publish these as separate posts, they are all part of the same article, and in some cases may not make full sense on their own: if you can, do read them one after the other, as one continuous piece.]

First, though, I’ve mentioned Taylorism or so-called ‘scientific management’ quite a few times here – so what do I actually mean by this term? And what impact does it have on management, and on the overall ‘visibility’ of generalist-roles?

An incomplete science

There’s perhaps a risk that some of what follows might seem a straw-man argument: attacking an easy-target that doesn’t actually exist. And in a way that’s true for Taylorism in the strictest sense: for example, Taylor himself definitely did respect at least some of the key human-factors in the workplace. In practice, though, Taylorism is a bit like Marxism and the like, in that it’s often more about an over-simplified belief-system or mindset that may have little or no connection with the ideas of the nominal proponent, and places may run directly counter to what was originally intended.

In the case of Taylorism, that mindset is often not so much over-simplified as wildly over-simplistic: yet as can be seen all too easily in so many failed attempts at business-process re-engineering, Six Sigma and suchlike, it’s a mindset that’s still predominant in much present-day management thinking, education and practice. Therein lie a lot of real problems for enterprise-architecture and the like.

[A warning again to be aware of the ‘political’ dimensions here. To reduce the risk of this architectural exploration being perceived as a career-threat, do take care to keep the focus at all times on the architectural aspects and implications, as a strict, formal exercise in business-architecture – nothing more than that. We all have our own political opinions, of course, but for your own safety it’s essential to keep the professional aspects of architecture-assessment clearly separate and distinct from our personal views here – and wherever practicable, leave the politics to others!]

In essence, what I’m describing as ‘Taylorism’ is the linear-paradigm as applied to business-design – usually with the assertion or implication that no other paradigm could or should apply, and often without even any awareness that another perspectives are possible.

It purports to be ‘scientific’ in its approach to management, but the ‘science’ is more Newtonian than anything else – very much mechanistic, with little to no awareness of key themes in current sciences such as quantum or ‘chaotic’ systems, complex-systems, emergent-systems or social-systems. It’s typified by a set of key assumptions such as:

- everything is connected by strict identifiable cause/effect relationships

- all relationships are linear and transitive (‘reversible’) – i.e. we can always infer a cause from its effect

- everything is reducible to identifiable algorithms with identifiable parameters – i.e. we can always infer an algorithm from its inputs and outputs

- all parameters are separate and independent from each other, other than through the algorithm – i.e. it is always possible to isolate out and adjust one parameter at a time

- the only relevant system-effects in algorithms are feedback-loops and delay-loops – i.e. ‘hard-systems theory’ is the most that we would ever need

- given reliable execution of an appropriate algorithm, all outcomes are exactly predictable and repeatable

- all functionality follows strict combinatorial logic – i.e. functionality may be aggregated or decomposed into larger or smaller ‘production-units’, without changing the overall functionality in any way

- all production-units are exactly fungible or interchangeable – i.e. given identical algorithms and parameters, the only inherent differences between manual, machine or IT-based implementations relate to reliability or efficiency, not function

- system-design in accordance with these principles will inherently yield predictable control over the system

When applied as ‘scientific management’, this is extended with a few additional assumptions about the role of management itself:

- every production-unit requires an external manager as controller for the unit’s algorithm at run-time – i.e. to adjust content and resources for each of the production-parameters

- every production-unit requires external analysis to adjust the unit’s algorithm(s), usually on the basis of performance-data

- control and analysis for a production-unit is always external to that production-unit – the sole function of a production-unit is to execute its predefined, externally-defined algorithm(s)

- the internal operations of all production-units should be ‘de-skilled’ wherever practicable – because any requirement for skill or run-time judgement within a production-unit represents potential loss of certainty and control

- aggregated production-units will likewise depend on external managers and analysts for control and configuration at the respective levels of aggregation – hierarchies of functional-decomposition imply matching hierarchies of management and external-consultants

Taylorism explicitly rejects the concept of ‘craft’, that decisions take place within within the work itself. Instead, it asserts that, for maximum efficiency, there should always be a strict separation between ‘thinking’ and ‘doing’, in effect represented by a cadre of managers providing oversight and control of order-following workers or literally-robotic automation in distinct, separate, interchangeable and non-interdependent ‘units of production’. The capability required of the ‘doing-unit’ that delivers the functionality of each production-unit is pre-specified in a requirements-document (for automation) and/or a ‘job-description’ (for ‘manual’ implementations of functionality.

In Taylorism, the core metaphor of the organisation is as a machine:

- the sole function of workers/automation is to execute the processes of ‘the machine’, in accordance with the ‘job-description’ – in this sense, a ‘job-description’ is a specification for an externally-controlled, non-autonomous ‘doing-unit’

- the sole function of analysts, ‘time-and-motion men‘ and other ‘external consultants’ is analysis and reconfiguration of ‘the machine’, from a viewpoint outside of that of the respective production-unit in ‘the machine’

- the primary function of management is control of ‘the machine’

Managers and management are deemed to utterly indispensable to ‘the machine’, because without them, there would no means to control ‘the machine’. (One of the key outcomes of Taylorism was to create a demand for a huge cadre of managers, whose roles largely did not exist prior to the rise of Taylorism.) Analysts are likewise indispensable, for similar reasons. Human workers, however, are deemed dispensable, in fact should replaced by automation wherever practicable. In essence, the Taylorist ideal is a fully-automated factory where the only human roles are for analyst-managers; similar notions are also often found wherever linear-paradigm models predominate, such as in IT-centrism.

There can be no doubt that a Taylorist-type model is useful and valid for the specific types of contexts to which its constraints are appropriate. For example, it does fit well with design and operation of ‘dumb’ automation – in other words, algorithmic-automation which has no internal means for self-adaptation or self-reconfiguration according to changes in its own operating context. Yet it’s based on what is, by modern standards, a very incomplete concept of science both in practice and in theory (for example, no allowance for emergence or inherent-complexity, as mentioned above), and it does not fit well with any context where any of the following apply:

- any non-linearity or inherent-uncertainty in parameters, algorithms, feedback-loops and suchlike – such as in wicked-problems and kurtosis-risks

- any non-transitivity or network-effects – where performance-results from outputs cannot be used directly to identify required changes in inputs

- any context undergoing ‘Black Swan‘ disruption or other step-change

- any context where the parameters of the context themselves are inherently uncertain – such as in ‘stormy’ variety-weather

- any context where the rate of change or the level of uniqueness outpaces the ability of external-analysis to adapt its response to those changes

- any context for which linear analysis is inherently unsuitable

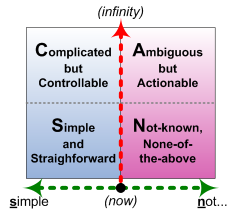

To describe the latter in terms of the SCAN sensemaking / decision-making framework:

By definition, Taylorism sits strictly to the left-side of the Inverse-Einstein Test: it assumes that doing the same thing must always lead to the same result. All real-time decision-making is deemed to be reducible to Simple rules and algorithms; those rules and algorithms are in turn derived by analysis that can only take place separate from real-time operations. (In quality-system terms, rules and algorithms are presented as work-instructions defined by analysis of the context in relation to higher-level procedures.) It has no built-in means to handle inherent-ambiguity, inherent-uniqueness or anything on the right-side of the Inverse-Einstein Test – where doing the same thing may lead to different results, or where the same results may be achieved by doing different things.

When presented with anything during real-time operation that is ambiguous, not-previously-encountered, or otherwise ‘not-known’ – in other words, anything to which a rule-based response is inherently unpredictable or unreliable – the only option available to a Taylorist model is to ‘escalate’ up the management/analyst hierarchy-tree. This leads directly to a Taylorist trap, in which the contextual knowledge and experience needed to solve the problem is often explicitly more and more filtered out with each move ‘upward’ in the hierarchy-tree, and each attempt at analysis takes more and more time – a ‘death-spiral’ that leads inexorably towards ‘analysis-paralysis‘.

More subtly, the over-focus on automation in Taylorism also feeds another ‘death-spiral’ that eventually makes it all but impossible to learn the skills needed to design and operate that automation: see the Sidewise post ‘Where have all the good skills gone?‘ for more on this.

There are also many, many well-documented human issues that mis-applied Taylorism frequently either ignores or glosses over – which, again, have often proven in practice to be real kurtosis-risks that, when (not ‘if’) they eventuate, cause losses that are considerably greater than any gains achieved by Taylorist-style techniques.

In short, there is nothing inherently wrong with Taylorism as such; but an awful lot wrong with it when it’s taken to be the only tool in town…

[[Next: Part 3b: ‘Management as a service’]]

Leave a Reply