The over-certainties of certification

A strange kind of annual ritual that they did there, that subtle ‘work-to-rule’, every year that I worked at that place.

Each autumn, up would come the new crop of graduates, each with their shiny new graduation-certificate and their own absolute certainty that they knew absolutely everything that there was to know about engineering. It was the job of the workshop-foreman to show them that they didn’t…

Hence that ‘work-to-rule’: the fitters and turners in the workshop were told to make exactly what the new graduates specified in their engineering-drawings – even if it didn’t make sense. Which meant that the technicians turned out parts that wouldn’t fit, or that couldn’t be assembled, or would corrode or collapse in minutes even if they could be assembled.

The graduates, of course, would shout and scream that the technicians had done it all wrong, that they hadn’t properly followed the design. At which point the workshop-foreman and, usually, a senior-engineer or two, would take each graduate gently through their drawings and show them what actually has to happen in the real world, where perfect-in-theory rarely matches up to the cantankerous confusions of Reality Department, and where a quite different kind of knowledge – the practical knowledge that the technicians had honed over so many years at the workbench – would also need to be brought to bear if the designs were ever actually to work.

Which, by a roundabout route, brings us to a really important topic in enterprise-architecture and the like: the over-certainties of certification.

As with most technical fields, enterprise-architecture is becoming riddled (raddled?) with all manner of ‘certification’-schemes (scams?).

Okay, yes, certification-schemes do have their place, and a useful role to play, in some cases and some circumstances. But as I’ve warned elsewhere on this blog, there’s a huge danger that, if misused, all that a so-called ‘certification’ actually certifies may be that someone has just enough knowledge to really get into trouble on any real-world project, but with not enough knowledge to get out of it. Ouch: not a good idea…

Worse, the possession of a certificate often seems to incite a kind of wild over-certainty in its recipients, a certainty that they alone know everything, and that others with much more real-world experience must necessarily know less than they themselves do – much as with the engineering-graduates in that story above. Which is really not a good idea…

So what do we do about it?

Something we can do is to get a bit more clarity about what we mean when we say that “certification-schemes do have their place”. What is their place, exactly? In what ways and in what contexts is certification useful – or, perhaps more important, not-useful? What can we use to tell the difference between them?

On option here is a crossmap between the SCAN framework on sensemaking and decision-making, and a simple categorisation of competencies and skill-levels.

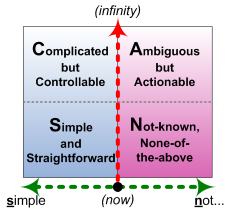

At first glance, SCAN looks like the kind of simple two-axis matrix so beloved by consultants:

It sort-of both is and isn’t, because the domain-boundaries can move around quite a lot, and it’s not so much a set of categories as a base-map to anchor a kind of journey of exploration around a context-space – hence a context-space map.

The horizontal-axis describes modality – the range of repeatability, possibility, and uncertainty in that specific aspect of the context. The red dividing-line is nicknamed the ‘Inverse-Einstein boundary’: to the left is an world of ‘order’ in which doing the same thing leads to the same results, whilst to the right is ‘unorder’, where doing the same thing may lead to different results, or we may need to do different thing to get to the same results.

The vertical-axis describes time-available-before-action – the extent to which we can make a ‘considered’ or theory-oriented decision, versus the rather different kind of practical decision that’s made at the actual point of action. The grey dividing-line represents the ‘quantum-shift’ in tactics that occurs as we move close to the point of action – a shift that is as fundamental as that between classical (Newtonian) physics and quantum (sub-statistical) physics.

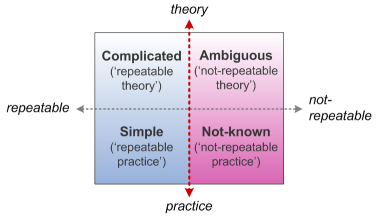

A perhaps-simpler way to summarise this is in terms of repeatable versus not-(so)-repeatable, and theory versus practice:

Do remember, though, that it’s a base-map – not a fixed list of categories.

We can then crossmap this to a common way to describe skill-levels, in terms of hours needed – archetypally, 10, 100, 1000 and 10000 hours, but often more – to reach and build towards specific levels of skill and competence:

- trainee (10-100 hours) – “just follow the instructions…”

- apprentice (100-1000 hours) – “learn the theory”

- journeyman (1000-10000 hours) – “it depends…”

- master (10000+ hours) – “just enough detail… nothing is certain…”

For example, a first-level trainee isn’t likely to be much use without some basic training – usually at least a day’s-worth, in most real-world contexts. And they’re not likely to develop much beyond a certain point without some solid idea of the theory that applies in that context – enough to communicate meaningfully with others who do know more than they do, anyway. To go further, that apprentice needs to gain a better grasp of the ambiguities and uncertainties of the trade, as a working ‘journeyman‘. And in almost any real discipline, it’ll take a long time – typically at least five years or so, or 10,000 hours, of study and real-world practice – to get close to anything resembling real mastery of the work, with all its nuances and subtleties and gotchas and traps-for-the-unwary. So when we crossmap this onto the SCAN frame, this is what we get:

Notice there’s another progression going on here: from practice to theory – the theory of certainty – and then from the theory of uncertainty, or complexity, back towards a very different kind of real-world practice.

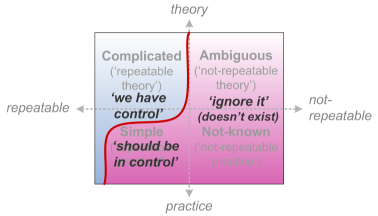

That, at least, is the traditional skills-development model – always grounded in real-world practice. Yet the old class-hierarchies, carried through into present-day business-practices through Taylorism and the like, assert a strict separation of roles: ‘higher-class’ officers and managers need learn only the theory, and sit back and tell ‘the workers’ what to do; whilst ‘low-class’ labourers are deemed incapable of thought, and must do all of the dirty real-world work, without ever being allowed to question or to answer back. Which, bluntly, is not only stunningly arrogant but really stupid in practice, and long since been proven to be really stupid, too. But unfortunately it’s still a very popular idea, especially with those who are too afraid of uncertainty as to ever get their hands dirty doing any kind of real work…

The result of that Taylorist myth is that we end up with a whole class of people who’ve learnt all of the theory, yet with little to no real grasp of the real-world practice. Whole business-universities and suchlike are set up on exactly this basis, theory-centric or even theory-only – which is not good news for anyone. It tends to create a skewed view of what is possible and what is not, and some very strange theory-centric assumptions about how the world works, which we could summarise on the SCAN frame like this:

One of the most dangerous delusions that the Taylorist myth teaches is the idea that everything can be reduced to ‘rational’ control – which again is not only not a good idea, but just plain wrong in real-world practice. When we combine this with the theory-centric view of the world – where doing practical work is always Somebody Else’s Problem, and in which that ‘Somebody Else’ can always be derided or blamed if the assumptions of theory don’t work in practice – then we can end up with people who have a seriously skewed view of the respective context-space:

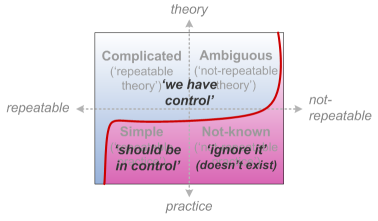

And that’s exactly what we get with certain types of so-called ‘certification’ – and why and where that absurdly arrogant over-certainty will arise. Ouch indeed…

Yet if we cross-reference the various types of certification-scheme against that skills/SCAN crossmap, we can derive a distinct description of what each type of certification-study should cover – and exactly what the respective certification-exam should test:

— Trainee skill-level(s):

- study: work-instructions; application of work-instructions in live practice; how to identify when to escalate to someone with greater experience

- test: ability to deliver specified results within acceptable tolerances; ability to identify when not to do the work (i.e. to not transition from non-competence to incompetence)

— Apprentice skill-level(s):

- study: shared-terminology; theory behind work-instructions (various levels of theory); links between theory and practice; reasoning from the particular to the general

- test: knowledge of theory as per specified ‘laws’ etc; ability to derive particular cases within the specified constraints of those ‘laws’

— Journeyman skill-level(s):

- study: complexity, ambiguity, uncertainty, probability, possibility; adaptation from the general to the particular (context-specific)

- test: knowledge of theory of uncertainty, and theory underpinning guidelines for working with uncertainty; ability to identify when a context is outside of expected constraints, and how to ascertain the actual constraints (and probable dynamics of constraints) applying in a given context

— Master skill-level:

- study: practical application in partly- or fully-unique contexts, in multiple and often interleaving (recursive) timescales

- test: ability to respond appropriately to unique and/or unpredicted or unpredictable circumstances, often in real-time; ability to innovate and contribute personally to the development of the discipline (such as via a ‘masterpiece‘)

In practice, it’s all but impossible to assess and validate ‘master’-level skills through any conventional kind of repeatable ‘test’, because they’re inherently about dealing with the unique or unrepeatable. The usual test-method there is via peer-review – and even that can be problematic if the person’s work is unique enough to have no direct peers. A very long way from the over-certainties of certifications, anyway…

There’s one additional very important corollary here, around the use of automated-testing with preset-answers, such as in a multiple-choice exam: a certification based on match against preset answers is not valid as a test of competence for any skill-level that needs to work in contexts of uncertainty. Multiple-choice tests only make sense on the ‘left-hand side’ of the Inverse-Einstein boundary: they should not be used for anything that involves any significant degree of unorder in that context. Hence, for example:

- a multiple-choice exam is a good means to test for knowledge and correct usage of terminology (a typical focus for a ‘Foundation’-level exam)

- a multiple-choice exam is not a good means to test for knowledge for how to adapt best-practices to a given context (a typical requirement for a ‘Practitioner’-level exam)

Many if not most of the ‘certifications’ in the enterprise-architecture space at present seem to be based on automated multiple-choice exam, and do not provide any means to test for linkage between theory and practice. Although this is acceptable for basic ‘Foundation’-level skills, it is a near-guaranteed recipe for disaster for skills of any higher level: the theory-only focus and arbitrary ‘truth’-assertions will test only for ability to pass the test, not for competence in real-world practice. Worse, such tests are unable to distinguish between lack of knowledge, versus higher competence in dealing with uncertainty – because both groups are likely to give answers that are ‘wrong’ according to the test’s own limited constraints – and thus tend to award highest test-scores to those who are not able to deal with real-world ambiguity: a test-outcome that can be highly misleading, and even dangerous, to anyone who relies on the certification-scheme as an actual metric of competence.

What’s also interesting here is that humility and self-honesty tends to follow the same curve:

- trainees know that they don’t know (much)

- apprentices know that they know more than trainees, and that what they know is ‘the truth’

- journeyman-level practitioners are always in the process of relearning the discomforts of ambiguity and uncertainty, where ‘truth’ tends often to be somewhat relative

- masters can usually be identified by their willingness to accept that the more they know, the less they really know

Which, overall, leaves us with the unfortunate tendency that those who know the most theory and the least of the practice are also those who are most certain of themselves and their knowledge – and, often, the most vocal in asserting their certainty of knowledge – yet in reality will often have the least applicable or useful knowledge. A rather importance distinction, that…

“Those who know do not speak; those who speak do not know” – something like that, anyway. Oh well…

Tom,

This is a great blog entry. Thanks for writing it.

One thing that I will add as a suggestion, extend the thinking to consider:

* Knowledge

* Skills

* Abilities

All three are required, there’s no *OR* in here, there’s always a mix of the three at play. And the methods for assessing abilities aren’t as well known, as they are for knowledge and skills.

kengon

Many thanks, kengon, and good points – especially about “there’s no *OR* here”, and the challenge of assessing abilities (for the future) rather than just (present-time) knowledge or skills.

This needs a lot my time than I have given to it. I am determined to come back to it but so grateful that the thought has been formalised here. I like the gradations of expertise especially the 10,000 hour rule. I think a missing element is how to grade the task that the person has to fill and avoid the HR and recruitment wallah approach of describing any role as requiring the ultimate in experience, qualification and general walking on water when following the simple instructions is what is appropriate.

Thanks, David, and really good point about “how to grade the task” and the overkill / over-expectations of the “recruitment wallah”. In part that’s what the SCAN framework aims to address, but that’s a different topic than here, of course. 🙂

Great post !

I was thinking that you would also address the Generalist versus Specialist question.

It seems more likely that a Generalist is a Master with 10,000 hours experience who can deal with any scenario, whereas a Specialist may be like an apprentice or journeyman who only understands a single domain (silo).

Thanks, Adrian. I’d agree that the ‘specialist vs generalist’ theme does have a place here – for example, it’s much easier to test for (lower- to mid-level) specialist than generalist – but I’d have to disagree with you about “It seems more likely that a Generalist is a Master with 10,000 hours experience who can deal with any scenario, whereas a Specialist may be like an apprentice or journeyman who only understands a single domain (silo)”.

If we look at medicine, a consultant-surgeon would need at least 10,000 hours of study and practice to reach mastery even in one narrow specialist-domain; Atul Gawande, in ‘The Checklist Manifesto‘, talks about hyperspecialisation as a response to the sheer complexity of modern medicine.

Practice alone is not enough, either: it’s quite easy to do 10,000 hours of hard practice without developing the deep-learning necessary to achieve anything resembling mastery. It’s more that it’s about that level of experience combined with deep-study and deep experiential-learning that seems to underpin real mastery of a domain.

The real point is that we have a choice about where we place those ‘10,000 hours’. If we focus on a single specific domain, and deep-study and deep-learning about everything within that domain, we become a specialist. If we spend the same amount of time and deep-study and deep experiential-learning about how domains link together, we become a generalist. A ‘generalist’ actually is a type of specialist – someone whose specialism is about understanding how things link together. To me that’s a really point that we do need to emphasise here.

Great post. I linked it in an ITSM Facebook discussion. (Back2ITSM) and one person (Peter Brooks) had an interesting idea.

“I wonder if somebody could develop a multiple-choice exam based on fuzzy logic. Each question would have half a dozen answers, none would be wholly right or wholly wrong, but, as in an adventure game, a scoring program could detect somebody who is ‘flying right’ and pass him, or somebody who consistently tries to emulate Icarus and fail him.”

Thanks, Aale, and yes, great suggestion from Peter Brooks, about “a multiple-choice exam based on fuzzy logic”.

I believe this is what Open Group were attempting to do, in their recent redesign and restructure of the TOGAF 9 Practitioner exam (there’s a comment on this from one of the Open Group leads somewhere on this blog, but I fear it would take a long search to find it…). I’m still not sure even that would work well enough for the needs of a field such as enterprise-architecture, though: pattern-matching via fuzzy-logic is a (very useful) technique for skills with in contexts with low- to medium-level ambiguity and ‘It depends’, but it can still be dangerously misleading for contexts such as EA that so often include true uniqueness, this item, right here, right now. (It’s the key difference between complex-domain and chaotic-domain tactics, to (mis)use A Certain Person’s terminology.) If the contexts can contain true-uniqueness, I don’t think there’s any automated way to test for competence in this: at present I still believe that the only appropriate test-method is via peer-review – and even that is only partially-feasible where the work-domain is inherently innovative and ‘new’.

Something to discuss in more depth somewhen, perhaps? – thanks again, anyway.

I’ve notice the lack of mentorships in so many fields. Those with life experiences are set aside for the new shiny grads. It’s a shame. So much valuable experience from the graduates (new insights) and masters are lost resulting in less than flexible organizations.

It is important for every trainee and apprentice to work with a master for a certain number of hours to achieve the next level. Masters must also agree to work with the trainee and apprentice for a certain number of hours to maintain the integrity of enterprise architecture.

Instead of being able to memorize to take and pass the test…lets add in the partnerships of mentorship in real life situations to the qualification.

So strongly agree with you about the need for mentorships, Pat – it seems to be a point that’s only just starting to be re-addressed with the (very welcome) return to something closer to traditional apprenticeship-type models in some disciplines and industries. (Though not with the implicit abuse of apprentices as barely-paid not-quite-slave-labour that occurred too often in ‘traditional’ apprenticeships, I’m very glad to say! 🙂 )

Incidentally, that ’10 – 100 – 1000 – 10000 hours’ sequence is only the start-point in each case, and indicates only a minimum experience-level for each category: in real-world practice, the experience-requirements can be – and often need to be – quite a lot higher. For example, the Wikipedia page on ‘journeyman’ mentions that the US requirements for trade-qualification as a journeyman-electrician, plumber or suchlike are typically 8,000 hours of study and work-practice; a proper BA or BSc degree – roughly the ‘journeyman’ equivalent for academics – would be around the same number of hours, too.