Scalability and uniqueness

What actually do we mean by ‘scalability’ in enterprise-architecture? What can and can’t we scale within the architecture, or the process of architecture itself?

These questions came up for me in thinking about a comment by Dave Duggal to the previous post ‘The craft of knowledge-work, the role of theory and the challenge of scale‘, and in particular to this one line of Dave’s:

Scalability (efficient repeatability or automation) is based on theory (models of an activity)

This isn’t really about what Dave said as such, but more about the implications of that definition above: that scalability is linked only with “efficient repeatability or automation” – or, to put it the other way round, that only repeatable or automatable things can be scaled. But is that true? Or is it just an assumption, and that we need to do some serious rethink here?

My gut-feel is that it’s not merely a circular assumption – that scalability and repeatability are linked to each other in that way solely because we choose to see it that way – but worse, it’s actually a term-hijack: that that choice of definition explicitly blocks us from seeing scalability in any other way. To get further than this, we need to challenge that term-hijack, and expand the meaning of ‘scalability’ to something quite a lot broader.

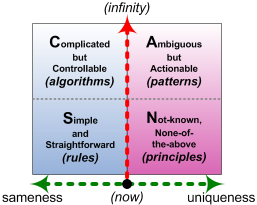

Yes, it’s easy to see that highly-repeatable things, automatable things – things that sit well over to the left on the SCAN frame – will scale well, and often scale fast. In SCAN, repeatability at large scale – and, especially, at high speed – would place that concern (metaphorically speaking) right down in the lower-left corner of the frame, keeping everything as Simple and straightforward as can be, following only the simplest of rules:

Easy, obvious – no question about it, that’s what we’d usually prefer to do if we need to do something at scale. Yet the reality is that that isn’t the only kind of thing we need to scale: in many business-contexts, we also need handle uniqueness at scale, too. And that’s when things get a whole lot trickier…

Examples of where we need to manage uniqueness at scale would include:

- healthcare

- customer-service

- clothing and fashion

- sales and market-design

- information-search

- farming – weather and micro-climate

- city-planning – topography, geography and particularity

In each case, what we have is a mixture of ‘same and different‘: not just one or the other, but always both together. In many of those examples, the common factor enforcing a requirement to address uniqueness is the fact that we have to deal with ‘people-difference’, but it’s not the only factor: sometimes – as with weather and micro-climate – we’re dealing with other types of natural high-variation and uniqueness.

What doesn’t work well is if we try to scale solely by enforcing repeatability and ‘sameness’: if we do that, we’re likely to end up with a ‘one size fits none’.

What also doesn’t work well is trying to scale uniqueness itself – because almost by definition, pure-uniqueness means there’s no equivalence of any kind between items, and hence nothing that we can scale.

What does work is applying scalability to the parts of ‘uniqueness’ that are ‘repeatable’ in some sense, yet without breaking the integrity of the uniqueness itself.

To make this work, we need to understand uniqueness in a somewhat different way. In practice, ‘uniqueness’ is always a blend of ‘same and different’, but as a unified whole.

One way to think of the relationship between ‘same’ and ‘different’ is like a Cantor dust:

When we first look at a context, it probably seems that everything is unique – a ‘chaos’ of uniqueness, to use the colloquial term. Everything’s different – as represented by the black line in the graphic above.

Then as we look a little closer, we’ll probably be able to identify a rule of ‘sameness’ that seems to apply to some part of it – represented by a white gap in the black line in that Cantor-dust graphic.

As we explore more deeply, we’ll find further ‘sameness’ rules that apply to some part of a remaining region of uniqueness; and more rules, and more rules, and yet more rules, until it might seem to the unobservant eye that everything is covered by some rule or other.

And if it is covered by a rule, we can control it, it seems, with absolute precision, absolute certainty. What a relief! – an easy way to scale, with certainty! Even better, if we can find enough rules, then we can control everything! – that’s the belief that Taylorists and IT-obsessives and vendors of ‘business-rules engines’ and the like all so actively promote, anyway.

Which is wrong.

Sorry.

It’s wrong. It’s indisputably wrong, all the way down to fundamental physics. (Which, given that we now know this to be true, also means it’s ethically and, often, legally wrong to pretend otherwise for business purposes – a little fact about which a lot of IT-system vendors need to be a lot more careful…) No matter how much we try to strip it away, some form of ‘noise‘ will always exist – everywhere. There is no way to force everything to sameness – which means there is also no way that we can ever bring everything ‘under control’.

If this isn’t obvious yet, take another glance at the Cantor-dust graphic. Follow the logic: keep dividing each remaining black line into three parts – black, white, black. The further we go into this structural-decomposition, the more white – the more ‘sameness’ – there will seem to be: yet some black, some uniqueness, will always remain – and become harder and harder to see. In the midst of a sea of sameness, there will always be some uniqueness – all the way to infinity.

And yet one of the fundamentals of chaos-mathematics is sensitivity to initial conditions. Hence, for example, if a process starts from one of these seemingly invisible bits of uniqueness, its outcome will be inherently unpredictable – especially if we try to force it to follow the rules. Hence the notion of ‘control’ is not merely a delusion – it’s a dangerously misleading delusion…

Ouch…

Just to make things even worse, that metaphor of the Cantor-dust is a huge over-simplification of what happens in the real world. First, unlike in that repetitive pattern of the Cantor-dust, we often have no way to tell when something actually is unique and different, other than by the fact that it doesn’t work as expected according to our ‘rules’ – which can sometimes be a bit too late, especially if we’d assumed everything will follow ‘the rules’. And contexts can also change dynamically over time – a reality I’ve described elsewhere as ‘variety-weather‘.

So if we were to believe that scalability is only possible with sameness, we’d be in serious trouble: we’d either be trying to force-fit everything into some kind of repeatability-oriented frame such as Six Sigma – which really doesn’t work for any kind of uniqueness – or else we’d just give up and say that scalability is impossible.

Yet if we look around us – especially at those examples above, such as health-care and retail and search – it’s clear that those are indeed being handled at scale. Sometimes at very large scale indeed, such as in the NHS or any large general-hospital (healthcare), Amazon or just any everyday large supermarket (retail), or Google and other online search-providers (search). So clearly it is possible to design and develop some kind of scalability in contexts where, by definition, there’s an inherently-uncertain mix of sameness and difference.

The most common method for dealing with this is implicit in the design of every supermarket: the store provides the sameness, whilst customers are left to deal with all of the difference. It does work quite well in a supermarket: each person has their own needs, but they can sort it out for themselves, making their own selections from the standardised aisles, and helping the store keep everything in balance via scanning and link-up to inventory at the standardised checkout.

No doubt it all looks like it works well, from the service-provider’s (organisation’s) perspective; yet when we view the system as a whole, it’s clear that it only works because the service-users (the customers) are taking up the slack – and that part may not be scalable. If it isn’t scalable – or if we put too much of a load on the service-users – then the system as a whole ceases to be scalable, too.

Hence if the organisation is concerned about overall scalability, it’s definitely in the organisation’s interest to view the system as a whole – outside-in as well as inside-out – and clean up its interfaces, and the relationships between those interfaces, so as to minimise friction across the whole space. To give an example of where this doesn’t work well, see the comment by Sally Bean on my post ‘Control, complex, chaotic‘:

I spent the early part of this week navigating between my GP, a private insurance company (courtesy of my husband’s employee healthcare scheme), my physiotherapist and a local diagnostic centre to get an ultrasound scan and ensure the results of it end up in the right place. This requires the patient to actively steer herself through it, by remembering to ask the right questions of each health practitioner they meet about what happens next, and working to the constraints that are in play.

Once again, look at this from a SCAN perspective. Yes, at first glance, SCAN might look like a crude two-axis categorisation-framework, but that isn’t how we’d use it here. Instead, any given context always has elements that can be anywhere within the frame: any combination of real-time versus ‘step back and consider’, and sameness versus uniqueness (or high-repeatability versus low- to no-repeatability) – and often changing dynamically in apparent-location within that frame as well.

The point that SCAN makes here is that with each element, we need to use the tactics that match that element’s respective ‘location’ on the frame: rules for high-repeatability at real-time, principles for low-repeatability at real-time, and so on.

Now apply the same kind of thinking as with the Cantor-dust: identify any ‘sameness’ amidst all of the ‘difference’ – especially where such ‘sameness’ lends itself to automation and any of the other Simple tactics for scalability. And – again as with the Cantor-dust – remember that this is fractal: it recurs in self-similar forms at every level and every scale, yet rarely so in quite the same way each time.

At first glance this process might look like a standard service-decomposition, but there’s a subtle yet crucial difference:

- always look for the samenesses within apparent difference

- always look for the differences within apparent sameness

- always maintain the view of the whole as whole – samenesses and differences, together

In other words, we don’t do what happens far too often in IT-projects and other automated ‘business-process reengineering’, namely design only for the easily-automatable bits and discard the rest as ‘Somebody Else’s Problem‘… Yes, we do do a sort-of-partitioning into sort-of parts, yet it’s also always a whole-as-whole, to provide a seamless, joined-up customer-journey and suchlike.

Perhaps the crucial point to understand is that scalability depends on applying the right tactics to each element in the respective element-mode at that time – Simple, Complicated, Ambiguous, Not-known. We can’t scale if we treat everything as different, unique; but we also can’t scale if we try to apply ‘sameness’ to things that aren’t the same, because the resultant failures and ‘failure-demand‘ will slow everything right down to a crawl. And, of course – just to make this even more fun – many of these ‘right tactics’ will change dynamically over time as well. In other words, not easy: but a lot easier – and also a lot more efficient, more reliable, more effective – than pretending that the need for that balance doesn’t exist.

So, the quick summary:

- scalability is easiest to apply with automation, which in general works best with things that remain always the same

- in that sense, much of scalability does depend on sameness

- anything involving real people or real-world contexts will always incorporate some significant extent of uniqueness

- since sameness is inherently and inextricably interwoven with uniqueness, the challenge is to tease out the samenesses, and apply scaling to those samenesses, without disrupting the integrity of the whole-as-whole

The next part, then, is to take that challenge further, into what we need to design and do in order to achieve real and reliable scalability in real-world contexts.

Practical implications for enterprise-architecture

Before going further, you might find it useful to read some of my previous posts on these themes, such as:

- Tackling uniqueness in enterprise-architectures (June 2010)

- Inside-in, inside-out, outside-in, outside-out (June 2012)

- Same and different (October 2012)

- On chaos in enterprise-architecture (November 2012)

- Control, complexity and chaos (December 2012)

- Metrics for qualitative requirements (April 2013)

- Requisite-fuzziness (April 2013)

Thence, some key principles for assessment and design:

— Acknowledge the reality of difference – don’t pretend that everything is the same, or can somehow be reduced solely to sameness. If we delude ourselves into thinking that difference doesn’t exist, we set ourselves up for guaranteed failure at some supposedly-‘unexpected’ or ‘unpredictable’ point in the system. (To be frank, it’s terrifying just how many process-designers – especially in the IT-oriented space – fall flat at this very first hurdle…)

— Acknowledge different types of difference – it perhaps sounds obvious, but many people somehow seem to package all difference into one basket, as if it’s just a different kind of sameness. Every type of difference needs to be handled in a somewhat different way – which implies that there would need to be an infinity of ways to handle each context, and which in turn might seem to imply that scalability is impossible. In practice, though, we’re helped by two key points:

- there are ‘samenesses’ within the range of difference – as implied in the Cantor-dust diagram – that can be leveraged to enable some forms of scalability

- the more that a process or mechanism supports self-adaptation for tolerance to variation, the more difference it will be able to absorb, and the more scalability it will thence enable

An everyday example of self-adaptation is in the feed-mechanism for an office printer: it has to be able to cope with paper or card with a range of different thicknesses, different textures, different weights, different dimensions, and so on. In general, the more variance it can cope with, the more useful it becomes.

By contrast, many IT-systems, and especially most IT-modelling notations, allow for precisely zero variance in descriptions, inputs and outputs. In practice, the only way they can deal with real-world variance is by externalising it elsewhere, as SomeBody Else’s Problem. That externalisation thus becomes an invisible brake on scalability of the overall system.

— Acknowledge the need to manage samenesses and differences as a unified whole – don’t attempt to split them apart, or just do the ‘easy bits’ of sameness and ignore everything else. (The same risks around IT-centrism and suchlike apply here too.)

— Identify the impact of each type of difference on scalability – and thence the design-options that they enable or prevent within the overall system-design.

For example, the core principle of a supermarket is that it provides a wide range of choice, for a wide range of people, with the minimum friction for the customer in the overall process – in other words, high-scalability of high-variation. It does this by focussing on managing the differences in the pre-retail supply-chain, and simplifying them down into the samenesses within the store itself – logistics, store-layout, aisle-labelling, in-store collection (basket or trolley), checkout – whilst leaving the differences of individual selections to the customers themselves. (This is different from, say, a fast-food retailer, where the store achieves scalability by constraining the choices that customers can make.)

However, part of the usual supermarket business-model consists of getting people to buy more than they need. One of the popular tactics to trick people into doing this is to move things around in the store, so that customers are forced to hunt around for what they need, and hence might ‘accidentally’ be enticed into making other purchases. In effect, the supermarket changes its own apparent trade-off between sameness and difference in the customer-experience, by introducing more difference over time. This sort-of works, commercially speaking, but there’s a high risk that the balance can go too far onto the difference side: the loss of repeatability from the customer’s perspective can easily translate into confusion, frustration and annoyance, and quite a few cases they will give up coming to the store at all. (For food-purchases, my elderly mother has largely switched from Marks&Spencer to Waitrose for exactly this reason.)

In short, there are trade-offs and impacts here that we need to understand, if we wish to avoid the wrong kind of ‘unintended consequences’…

— Identify types of change in each context, and their impacts on scalability – particularly the less-visible types of change such as pace-layering and variety-weather. The point here is to work with the variance of variance itself: pace-layering is about the way in which different elements of a context have different rates of change, whilst the concept of ‘variety-weather’ extends this to include a stronger understanding of how a context can seem predictable at one time but be highly variable at another – variability not just in change over time, but variability in the rate of change itself.

— Identify methods for design to adapt to the respective variance – these will depend on the needs and content of the context, of course, but the key point is to design for variance, rather than pretend that it doesn’t exist.

— Identify methods and designs to minimise friction from variance – for which tactics such as user-centred design, outside-in modelling, user-experience modelling and customer-journey mapping will all be essential.

One recent article I liked on this is Justin McDowell‘s ‘Enterprise Architecture In The Wild – Scaling Pinterest‘:

“In the span of two years they’ve grown from zero to tens of billions of page views per month and from 2 founders and one engineer to over 40 engineers. How do you scale for that growth, how does your architecture rapidly evolve, and what lessons can you learn from the experience?”

(What I particularly liked about the article was that it explored the technical aspects of scaling and the human aspects: “all enterprises (and enterprise architectures) fail in a similar manner, particularly when they are not designed for humans.”)

I’d also strongly recommend Milan Guenther‘s book ‘Intersection‘ as an excellent overview of the whole scope of disciplines and methods that can brought to bear on an overall context.

— Identify methods to guide and manage the activities in the context – the key point here being that these need to align with the respective types of scalabilities and uncertainties in that context, and not try to force everything to fit one single model of algorithms or rules.

Here, unfortunately, we hit up against a century’s-worth of assumptions about how business operates – assumptions about ‘control’ and the like’ that do work well when everything is very stable and very predictable, but don’t work well for anything that doesn’t happen to fit within those particular contraints. Of which the latter happens to be the case in most of present-day business…

‘Control’ is the core concept behind Taylorism and most current approaches to management. Unfortunately, as we’re now discovering the hard way, ‘control’ is a myth: it doesn’t ever exist in the real-world – at least, not in the sense that those business-models and methods seem to expect. Which is why we get landed with fundamentally wrong-headed ideas such as “our strategy is last year +10%”, or ‘target-based performance-management’. As soon as we’re dealing with uniqueness, the concept of prediction ceases to make sense: it really is as simple as that. But it’s not easy to get most control-oriented managers to understand this… and the consequences are frequently dire.

The real problem with ‘control’ and ‘prediction’ and the like is that not only does it inherently tend to fail, but it then assumes that the reason for the failure is ‘not enough control’ – which is exactly what not to do in that type of context. (In SCAN terms, a failure by definition shunts us over to the right-hand or ‘not-known’ side of the frame, for which we need to apply techniques for the ‘not-known’ space – not those that only work with repeatability on the far-left side of the frame.) By refusing to relinquish ‘control’ when ‘control’ can’t work, conventional management-techniques not only cause failure, but prevent access to techniques that can work in those contexts – driving a downward-spiralling descent into a full-blown disaster-area, yet without ever understanding why…

Probably the classic error here is misuse of targets – which seems endemic throughout almost every organisation, especially in the public sector. On why targets are a really bad idea in contexts with high inherent-uniqueness, see Ian Gilson, ‘What Poses More Danger To The NHS; Dirty Data Or Dumb Leadership?‘. As Ian suggests, see also just about anything by John Seddon’s Vanguard Group, the brilliantly wry ‘Systems Thinking For Girls’, or the irrepressible and always-amusing Simon Guilfoyle, aka ‘Inspector Guilfoyle‘. To get out of that kind of mess – or preferably avoid it in the first place – we need to identify metrics that actually mean something, and that don’t collapse back into meaningless ‘targets’: for good advice on this, see Simon Guilfoyle’s post ‘Spot the difference‘.

— Always provide methods to handle variances that don’t fit expectations – because (to take a somewhat Fortean view) Reality Department indicates that there will always be something that doesn’t fit…

Although there will always be limits on resources and limits on time for scalability, probably the key takeaway from all of this is that the primary limit on scalability is failure and failure-demand: each time something fails, we have to slow down or stop to tidy up the mess, and the failure also uses resources that could otherwise have been used to improve efficiency and/or effectiveness at scale.

And in turn, the primary cause of failure and failure-demand is attempts to use ‘control’-type methods in contexts that are inherently complex, chaotic or unique. It won’t work; it doesn’t work; by definition it cannot work; and yet people still try to do it, time after time after time. An interesting example in itself of misplaced ‘sameness’, perhaps?

Leave it at that for now: over to you for comment, perhaps?

I need to reread this several times, as there’s a great deal here to digest, but as far as I can tell there’s no mention of the relevance of the design and manufacturing concept of “mass customization”.

Just something that struck me immediately. More later perhaps, after I’ve had some time to think about this.

len.

@Len: “…there’s no mention of the relevance of the design and manufacturing concept of ‘mass customization'”

Oops… you’re entirely right, of course… 🙁

(In my defence, there’s only so much that I can cram into a post before it becomes book-length, but yes, that was definitely a ‘biggie’ to have missed… Oh well, can’t get everything right, can I? (or anything right, sometimes?) 🙁 🙂 )

Tome writes:

Oh well, can’t get everything right, can I? (or anything right, sometimes?)

How’s that old saw go — “the perfect is the enemy of the good enough”?

This may not be perfect, but it’s certainly more than good enough!

len.