Problem-space, solution-space and SCAN

Uh-oh, Ric Phillips is back…

And I do mean that as a compliment! 🙂 Too often, when people ‘get it wrong’, it’s because they haven’t bothered to read the description, or to think, or both. But when someone like Ric – a real master of simplicity in enterprise-architecture – ‘gets it wrong’, it’s much more likely to be my fault than his: that I haven’t explained it well enough. Which means that, yeah, I do need to have a quick go at this one, to see if I can explain it any better.

Here was Ric’s comment to my previous post ‘Can complex systems be architected?‘, talking about the SCAN framework and its depiction of complexity:

What is the thinking behind the selection of complexity as a function of time over certainty?

It does rather give the impression that levels of disorder are a psychological product. That doesn’t scan (no pun intended) with the characterisation of complexity as a state of affairs distinct from complication or confusion.

How does one make an objective assessment of the levels of certainty that pertain to any organisational system (leaving aside for a moment the deeply interesting question you have discussed about where to draw the boundaries of the system itself)?

What??? – well, that’d be my first response, anyway: to me, in at least two places there, he’s completely, completely, completely missed the point. And yet, wait a minute, hang about, this is one of the sharpest guys I know: if he’s missed the point, then even at best that point is really seriously ‘hidden in plain sight’ – so well ‘hidden in plain sight’ that it can’t be seen. Oops… definitely my fault… sorry…

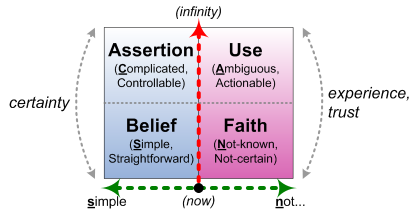

So… kinda start again, then. Let’s start with the diagram in question:

And in essence, what Ric’s done, in his first sentence above, is to ‘place’ complexity ‘in’ the upper-right region, marked ‘Ambiguous but Actionable’:

What is the thinking behind the selection of complexity as a function of time over certainty?

I can understand how people could interpret in this way what I’ve said before about complexity. And it’s even more likely to happen with people who’ve previously come across the Cynefin framework, which – as I (mis?)understand it, anyway – really does ‘place’ specific types of ‘state of affairs’ (to use Ric’s term) in specific ‘domains’ of the framework.

But it’s not what I mean here, at all – and although the difference can be horribly subtle, it’s absolutely crucial in terms of getting real value from what SCAN can actually show us. If you don’t ‘get’ this point, SCAN falls back to seeming to be just another simplistic two-axis categorisation-framework. Which, yeah, is sort-of useful for some things, but nothing special: there are plenty of other alternatives for that kind of use – Cynefin being one of them, of course.

Instead, there are two keys to getting the best use out of SCAN:

- it’s a metaframework, not a framework: its value comes in the way we use it – meld it, change it, work with it – much more than in what it shows on its nominal surface

- as a metaframework, it applies to itself – for example, we can apply any or all of those principles listed in the diagram above to the framework itself, and while we’re using it

Yeah, that kind of recursion can get tricky… but that’s where the tool’s real power resides. If you can get your head around it, it’s enormously powerful: it can be really simple – it’s just a two-axis framework – yet almost literally as rich as you could possibly want it to be.

The other crucial, crucial point here is that it’s a tool for context-space mapping. It’s not a map of the problem-space, as such; it’s not a map of the solution-space, as such; it’s a framework – graphic(s) and method combined – to help map out the overall context-space, both ‘problem-space’ and ‘solution-space’, both at the same time, and dynamically changing over time.

Perhaps even more, it’s means to map out how we perceive and interact with our own understandings of and actions in that context-space. That’s why I describe it as ‘a framework for sensemaking and decision-making’: we use it as an active guide within a kind of interactive dance with the context, making sense of something, deciding what to do, doing, making sense, deciding, doing, in just about any order or sequence or recursion of making sense, deciding and doing.

Which, in a way, should help to answer Ric’s second paragraph:

It does rather give the impression that levels of disorder are a psychological product. That doesn’t scan (no pun intended) with the characterisation of complexity as a state of affairs distinct from complication or confusion.

The answer is, yes, both are true. In physics at least, there is “complexity as a state of affairs distinct from complication or confusion”; yet when we’re dealing with sensemaking and decision-making, then yes, perceived “levels of disorder” are indeed “a psychological product”. (Another important subtlety here, though, is Cynthia Kurtz‘s concept of ‘unorder’ – in effect, ‘a state of affairs not amenable to (the usual notions of) order’ – which is not the same as ‘disorder’.

And it also answers the question in Ric’s third paragraph:

How does one make an objective assessment of the levels of certainty that pertain to any organisational system?

— because the short-answer here is that when we’re dealing with the type of context-space that we usually work on in most enterprise-architectures and the like – a complex sociotechnical system – there’s probably no such thing as “an objective assessment”: it’s always going to be some kind of complex dance between ‘objective’ and subjective. Which, in turn, also covers the second half of that last paragraph:

(leaving aside for a moment the deeply interesting question you have discussed about where to draw the boundaries of the system itself)

— because, again, the process of identifying ‘the boundaries of the system‘ is itself as recursive and fractal as anything else in this context.

(As an aside, this comment just came through from Kai Schlüter, which probably illustrates much better than I could the point about ‘objective’ versus ‘subjective’ when working on a context-space map: )

I like this statement: “Almost by definition, ‘objective assessment’ is somewhere between unlikely and impossible within a sociotechnical complex-system.” and yet, it is possible to create a consensus based ‘subjective assessment’ where all people in the room agree to the statement, which is close enough to objective to be really useful, because the involved people are buying into the assessment. You have written yourself about it in the ‘SCAN as decision dartboard‘ post which I use by (mis)using SCAN to come to a fast assessment on Past, Present and Future to drive decisions in the needed speed (typically time boxed to one hour, plenty challenges to look at in that one hour). I do though never try to look for the perfect truth, just a barely good enough version works.

(See also the last part of the post ‘SCAN – some recent notes‘ for more detail and links on how Kai uses SCAN for high-speed sensemaking and decision-making in a very busy multi-project software-development / maintenance context.)

One more point, and then I’d better stop for now. The start-point is a revisit of Ric’s first sentence above:

What is the thinking behind the selection of complexity as a function of time over certainty?

The key here is that the vertical axis in SCAN isn’t a metric of time as such: it’s time-available-before-moment-of-action. In a sense, it’s a measure of how much time we have available to us to sit back and make sense of something, before we have to take action based on a decision based on that sensemaking.

When we’re further away from the moment-of-action, we have at least some time to carry out some kind of analysis and/or experiment. But when we get right close to the action, there is no time to think – we have to ‘do’. And yet, even right up close to and in the moment-of-action, there is still a form of sensemaking and decision-making that’s going on – but it isn’t the same as that we used for analysis and experimentation. In that sense, yes, sensemaking and decision-making in relation to complication (such as analysis) and VUCA-complexity (such as experimentation) is qualitatively different from that used at or close to the moment-of-action – in the same way, in turn, that sensemaking and decision-making are qualitatively different either side of that ‘boundary of effective-certainty’ across the horizontal-axis. This is illustrated in one of the early variants of SCAN:

(To be honest, the labels in each domain there probably take more explanation than they’re worth, but at least it illustrates the point. 😐 )

Perhaps more usefully, we can also see much the same happening in the Five Elements set (another metaframework that’s equally fractal and recursive). Each of the ‘domains’ here has a significantly-different perspective on time: starting from Purpose, they’re respectively far-future, people-time, near-future, NOW!, and past. But they also have significantly-different emphases in terms of sensemaking and decision-making, which in some ways do also correspond to usage of very different parts of the brain:

If we cross-map that to SCAN, the main parallel is with the ‘Preparation’/’Performance’ (‘think’) and ‘Process’ (‘do’) domains, which respectively line up above and below the ‘transition from plan to action’ boundary on the SCAN vertical-axis. They involve different thinking-processes, different sensemaking, different decision-making, and, to a significant extent, different forms or modes of ‘doing’. In effect, the whole Five Element cycle – starting from ‘Purpose’ – runs from a potentially-infinite time-before-moment-of-action, all the way down the vertical-axis of the SCAN frame to the moment-of-action at ‘Process’, very briefly dips beyond that, as ‘the past’, at the moment between ‘Process’ and ‘Performance’, and then all the way back up again, connecting past with intent or initial-purpose. (We just need to remember, again, that the whole thing is highly fractal…)

Yeah, I know, it’s really hard to explain just in words-and-pictures-on-a-page, and I’ve possibly made it worse in this yet-another-long-ramble – but I hope it clarifies it a bit, at least?

Over to you, anyway.

Tom, I’m so pleased to read this after the first two posts in your recent rebirth (I may have missed a couple). Having a debate with the IT-centrists has only the negative value of winding yourself (and those of us who agree with you) up. The other folks are either not aware of your argument or don’t care. Well OK, there’s a small number who agree with the core but object to the argument.

This post is so much more valuable, because it provides useful and usable material, which strikes a chord not just with your supporters in the debate but people who don’t even know there is a debate. There’s an emerging group of enterprise architects (who probably wouldn’t even call themselves that) that don’t need the debate, because IT-centrism (assuming they even know it exists) is obvious nonsense to them.

So please keep this up. Thanks!

Many thanks for this, Stuart. And thanks also because it’s also sent me back to revisit another post I’m working on right now (about the misleading concept of ‘layers’ in EA) to go through it again and restructure and/or rewrite any parts that sound like they’re ‘whingeing’ again. What’s needed is tools that do work, rather than merely complaining about the ones that don’t. :wry-grin: