Learning and the limits of automation

One of the themes that came up in the Vlerick Business School session on EA-roadmaps was around how long it takes to learn how to develop the skills needed to do enterprise-architecture – and how and why to learn them, too.

What came up was that it took them 18 months to do their first pass through the architecture-cycle. People complained, loudly, that it had taken too long, and delivered not much apparent business-value. This is the point at which, far too often, the plug is pulled on enterprise-architecture in an organisation. Fortunately, in their case, they had an executive sponsor who did understand what was going on, and did support them through those challenges. Which meant that they did get the chance to do another iteration – which took less time, and delivered more business-value – because they had been able to learn from their previous experience.

In short, enterprise-architecture is a skill – a set of skills that revolve around one core idea, that ‘things work better when they work together, on purpose’. And as with all skills, it takes time, and real-world experience, to be able to learn that skill – in this example, the skill of getting things to work better and work together, on purpose.

By developing skill within a context, we also develop the ability to handle that context’s unpredictability, variance or change: not just a ‘nice to have’, but often utterly essential for survival and more. A direct corollary of that, though, is that, to be able to learn that skill, we also need to have the time to do so, and a ‘safe-enough’ yet still real-enough context in which we can develop experience without causing too much damage and disruption along the way. We could summarise all of this in SCAN terms as follows:

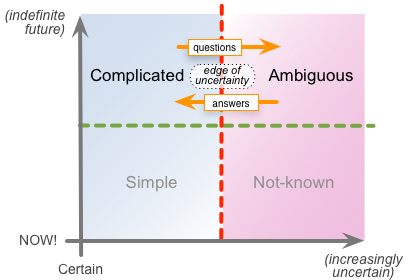

In SCAN terms, much of the learning – developing an understanding of the value of theory, yet also its limitations – takes place in the interaction between the ‘upper’ domains, Complicated and Ambiguous, across a crucial context-boundary that we might describe as ‘the edge of uncertainty’:

The catch, as we can see in both the diagrams above, is that developing skill not only takes time, but much of it necessarily takes place away from the point-of-action, the point within an organisation’s business-model where service and value are delivered, and profit returned.

The result is all too predictable: an urgent hunt in most organisations for anything that could obviate the need for that ‘inefficient’ period of skills-development. Classically, this takes two forms, often in parallel with each other:

- let someone else cover the costs, by buying-in existing skills

- use automation to try to bypass the need for any skill at all

In other words, the supposed aim is that we should end up with a bunch of automation, a few trainee-level button-pushers who don’t (in fact shouldn’t) have to think about what they’re doing; and a very small number (preferably zero) of people with master-level skills who can reprogram the machines and fix any oddities that happen to come their way. In SCAN terms, it looks a bit like this – machines and trainee-level operators alike following Simple rules, and the one or two people with Master-level skills who can help trainees respond to and recover from a transit over ‘the edge of panic’ into the Not-known:

That ‘automate-everything’ whole approach is very, very popular – or at least, openly much-desired – by just about every modern-day manager. Yet it’s not just a modern-day malaise: in fact it’s been popular since the very first ‘automation’, when someone first got innovative enough to rid themselves of that whole tedious effort and nonsense of nomadic hunting and gathering – I mean, in the name of the gods, now we have farming to feed us all, who needs the skills of the hunter any more? (Yeah, we really do need to hear the quite cacophony of hidden “Oops…” there: the list of cultures and civilisations that have collapsed in the face of climate-change and farming-failure is very long…)

The assumption and ideal, of course, is that the automation never fails: it’s perfect, once it’s started, we never need do anything else. Everything under absolute control, absolute certainty, everywhere, forever – the archetypal manager’s perfect dream! Plenty of people to pocket the profit from pushing that dream, too – whether realistic or not…

And the blunt reality is that that dream is not realistic: in fact is best described as ‘wishful thinking’, usually of the most dangerous kind – the kind of dream that turns into a really nasty nightmare, because Reality Department is just not that unchanging, certain, predictable, and nor is it that forgiving of complacency, laziness or self-delusion. The blunt reality is that, in the real world, when (not ‘if’) the automation fails, and we’ve lost all of the skills behind them, we’d have nothing left with which to recover – and we probably won’t have time to recover those skills in time, either. (Yeah, definitely “Oops…”)

An excellent article on the US National Public Radio website, ‘Hands Free, Mind Free: What We Lose Through Automation‘, warns about the real results that arise from this:

As a result of autopilot, though, pilots aren’t getting enough practice in manual flying. So when something bad happens, pilots are rusty and often make mistakes.

Want a real-world example? We need look no further than the tragedy of Air France Flight AF447 (official report). That’s what happens when people get too reliant on the automation, and don’t have the requisite skills to take over in a hurry when the automation fails.

But note that it isn’t the automation itself that’s the problem here: the same automation that was a key cause of the failure in the Air France 447 was a key factor in the success of the US Airways 1549 ditching – the so-called ‘Miracle On The Hudson’ – because the self-levelling technology of the Airbus flight-control allowed Sullenberger and his crew to concentrate fully on the water-landing itself. The crucial difference between those two incidents was that Sullenberger and his crew were able to cross over ‘the edge of panic’ that hit when the automation alone was not enough; but on the Air France flight, the crew were not able to make that jump – they each individually had the requisite experience, perhaps, but not the skills to work together as a crew to resolve the loss of automation in a real-world crisis.

To put it at its simplest, the real killer is complacency: the complacency that arises from the delusion that once the automation exists, we never have to think again. Even in the aviation-industry, we’re seeing assertions that Sullenberger and his ilk are ‘the last of their kind‘, that their skills are no longer needed:

“Twenty-five years ago, we were a step below astronauts,” says one veteran pilot. “Now we’re a step above bus drivers. And the bus drivers have a better pension.”

But Reality Department has other ideas about that – it always has ‘other ideas’, beyond any comforting delusions of certainty or ‘control’, whether we wish it so or not. And the only way to cope, when Reality Department does throw us into the Not-known, over the far side of ‘the edge of panic’, is to have the requisite skills available to make sense of that Not-known, make the right decisions in that Not-known, and take the right actions within that Not-known – right here, right now. If not, we’re dead – metaphorically if not literally. Which means that whenever there’s some form of automation in use, the skills to override and take over from that automation need to be there, and be available at all times – even though, or even because, the automation exists. As another quote from the ‘Hands Free, Mind Free’ article puts it:

If the car of the future will make decisions for us, how will it decide what to do when a collision is unavoidable and a computer is in charge of the steering? “You have to start programming difficult moral, ethical decisions into the car,” Carr says. “If you are gonna crash into something, what do you crash into? Do you go off the road and crash into a telephone pole rather than hitting a pedestrian? … Once we start taking our moral thinking and moral decision-making away from us and putting it into the hands not of a machine really, but of the programmers of that machine, then I think we’re starting to give up something essential to what it means to be a human being.”

The other blunt reality is that if we rely on the presence of those with existing Master-level skills, with no means to replace them, then over time we’ll lose even those skills too. People leave; people retire; people die. When that happens, what we’d be left with is Simple-level automation and Simple-level button-pushers, with no means whatsoever to handle Reality Department’s inevitable excursions into the Not-known: over ‘the edge of panic’ indeed, when that happens…

It no doubt looks like a winner: avoid all of the costs and disruption and ‘inefficiencies’ of skills-development – or, if absolutely essential, find some way to make them ‘Somebody Else’s Problem’ – and then reap in all of the profits from those savings. But in reality it’s a huge risk that is almost guaranteed to eventuate in the medium to longer term: a specific class of risk called a ‘kurtosis-risk‘, which, when it eventuates, wipes out and more all of the gains made from ignoring that risk.

Another key kurtosis-risk here occurs wherever potential skills-loss arises from inadequately-thought-through outsourcing. In the US a couple of decades back, for example, there was a huge rush to ‘save money’ by outsourcing all of the manufacturing to China, retaining only the design-capability in the US. This worked well, for a while – ‘well’ in the sense of greater short-term monetary-profit, at least – but quite suddenly the design-quality from the US went steeply down, at the same time as design-quality from China went steeply up. (I’m old enough to remember that much the same happened with outsourcing to post-war Japan: for a long time the quality was low, dismissed as ‘cheap Japanese rubbish’, but by the 1970s it suddenly changed to perhaps the highest quality in the world. Deming‘s work might have had something to do with that, of course…) The key reason for the change in design-capability and design-quality, for both the US and the China, is that development of design-capability depends on ‘thinking with the hands’ – direct hands-on engagement in the work, yet also in ‘safe-fail’ contexts just one step removed from front-line work. Or, in SCAN terms as above, the direct, personal, hands-on interaction between Complicated and Ambiguous, with brief deep-dives down into Simple (to do the work) and/or Not-known (to experience the real-world outcomes from that work).

We need to remember, too, that the Not-known is, by definition, the only place where new ideas, new information, new innovation, can arise. We apply those ideas in the Simple domain, but the ideas themselves arise from pretty much exactly where Simple least wants to be – a point nicely illustrated in this Tweet by Alex Osterwalder:

- AlexOsterwalder: “Individuals and companies go into slow decline when their appetite for panic slackens.” — Alain de Botton

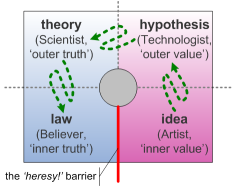

There’s another SCAN crossmap that likewise illustrates the sequence in which we identify and apply new ideas:

Yet notice that in some ways this goes the opposite way to skills-development: it takes a moment of Mastery to let go enough into new ideas, and to bring them back for experiment and test. In that sense, yes, skills-development is recursive, fractal – as is the full development and exploitation of new ideas. But crucial stages of skills-development, going one way, and idea-development, going the other, both depend on periods and contexts where we’re somewhat removed from the action – and a place where automation alone cannot reach.

If we don’t develop ideas, we won’t be able to adjust to change. If we don’t develop skill, we never gain the benefits of that skill. And no skill within a context equals no ability to handle that context’s volatility, variance or change: not a good idea…

In short: automation is useful, but we need to be aware of its limits – and of the learning we need so as to compensate for those limits, too.

Implications for enterprise-architecture

Whenever any kind of automation – IT-based or otherwise – is under consideration for use within an architecture, always remember to include the following check-points:

- Given the total system within which the automation is to operate, what are the elements of that system that the automation would not cover?

- What processes, services and skills would be required to cover the parts of the overall system that the automation would not cover?

- What processes, service and skills would be required to take over from the automation when (not ‘if’) the automation fails, or comes across contexts which it is not competent to cover?

- What signals would be required to warn that automation has either failed or moved ‘out-of-competence’ for the respective real-world context? In case of such failure, how fast will each type of transition need to be?

- What processes, services and skills would be required to handle the transition to non-automated operation, and return from non-automated operation – including any update of records and the like, from the period of non-automated operation?

- What impacts would such transitions to or from non-automated services have on overall service-delivery contracts and service-level agreements?

- What processes, services and skills would be needed to maintain the automation?

- What processes, services and skills would be needed to amend, design and develop the automation, and to cover those aspects of the total system not covered by the automation itself, in respect to changing business contexts and business needs?

- Given the list of skills identified in those questions above, how would those skills be developed and maintained? How would tacit narrative-knowledge and ‘body-knowledge’ – ‘learning with the hands’ – be transferred and maintained across the generations?

In short, know the limits of automation, and design for the learning needed for all of those contexts not covered by automation – rather than pretending that they don’t exist, or are some ‘Somebody Else’s Problem’.

Remember that whilst solution-architecture or domain-architecture may have convenient boundaries where they can seemingly handball the problem to an imagined ‘Somebody Else’, at the enterprise-architecture level there is no ‘Somebody Else’: by definition, the working of the enterprise as a whole is always our problem – so whenever someone plays ‘Somebody Else’s Problem’, we are that ‘Somebody Else’! Tricky… but that is what the job entails, after all.

Leave it at that for now: over to you for comment, perhaps?

Leave a Reply