Decision-making – linking intent and action [4]

How is it that what we do doesn’t necessarily match up with what we plan to do? How can we best ‘keep to the plan’? Or, alternatively, how do we know how to adapt ‘the plan’ to a changing context? What governance do we need for this? How do we keep everything on-track to intent in this? And what implications does this have for our enterprise-architectures?

What we’ve been looking at in this series of posts is a key architectural concern: at the moment of action, no-one has time to think. Hence to support real-time action, the architecture needs to support the right balance between rules and freeform, between belief and faith, in line with what happens in the real-world context. And it also needs to ensure that we have available within the enterprise the right rules for action when rules do apply, and the right experience to maintain effectiveness whenever the rules don’t apply.

As we saw in previous parts in this series, this implies is that within the architecture we’ll need to include:

- a rethink of ‘command and control as a management-metaphor [see Part 1 of this series]

- services to support each sensemaking/decision-making ‘domain’ within the frame [see Part 2 of this series]

- services to support the ‘vertical’ and ‘horizontal’ paths within the frame [see Part 3 of this series]

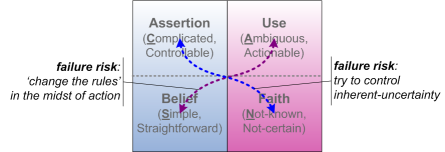

- governance (and perhaps also services) to dissuade following ‘diagonal’ paths within the frame

So this is Part 4 of the series, the final part: exploring the architecture of governance – and architecture-governance too – that we need for all of this to work well.

[Those two key reminders again: this is ‘work-in-progress’; and all of this is recursive – so you’ll likely need to do some work of your own here too.]

Within the architecture, we will need governance and governance-services to cover all of the issues that we’ve seen in the previous parts to this series. This would include guidance and governance on the services that support each of the SCAN sensemaking / decision-making domains and the links between them, and on any changes to those services and facilities.

Governance itself is usually outside of the remit of architecture – or probably should be, even if architecture sometimes ends up being landed with the role by default. A better role for architecture here is to identify what it is that needs governance, what kind of governance it will need, and where in the overall structures and story are the gaps that need to be filled – in other words, the governance to help create and maintain what does not yet exist.

As we’ve seen, the overall themes here that governance would need to cover would include:

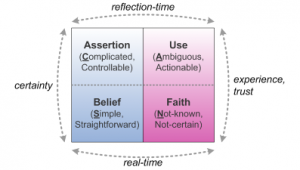

- techniques for sensemaking and decision-making at the point of action, across the whole range of modality of possibility and necessity (described in SCAN as a spectrum between Simple <-> Not-known, and between Belief <-> Faith)

- techniques for sensemaking and decision-making at varying distance-from-action – loosely categorised as operational, tactical or strategic – and again across the whole spectra of modality (described in SCAN as between Complicated <-> Ambiguous, and between Assertion <-> Use)

- techniques to bridge across the sensemaking and decision-making at real-time (Simple <-> Not-known, Belief <-> Faith) and at distance-from-action (Complicated <-> Ambiguous, Assertion <-> Use) for operational, tactical and strategic distance-from-action

- improvement-processes that link between techniques at distance-from-action and at real-time action, constrained at distinct levels of modality, from true/false (Complicated <-> Simple, Assertion <->Belief – eg. Six Sigma, Taylorist ‘scientific management‘) to fully-modal (Ambiguous <-> Not-known, Use <-> Faith – eg. OODA, improv)

- processes and techniques to develop skills and competence across the full range of modalities applicable within the context

- validation of, training in and usage of all of such techniques

And as we’ve also seen, the governance would need to maintain a balance across all of these themes:

- theory and experience

- ‘objective’ and ‘subjective’

- ‘science’ and technology

- ‘control’ and trust

- true/false and fully-modal

- organisation and enterprise

- structure and story

- sameness and difference

- ‘best-practice’ and (understanding of) ‘worst-practice’

- ‘sense’ and ‘nonsense‘

- certainty and uncertainty

- caution and agility

- rules (‘the letter of the law’) and principles (‘the spirit of the law’)

Ideally the governance should also cover any applicable management-structures, with a strong emphasis on ‘management as a service‘ rather than as a dysfunction-prone pseudo-hierarchy. At present, though, that’s still likely to be too ‘political’ and problematic for architecture alone to face… – for now, probably best to class it as an architectural-waiver, to be addressed if and when the opportunity should arise.

Decision-physics

There’s a further point that I don’t think has come through clearly enough in the previous parts in this series. This is what we might term ‘decision-physics’, by analogy with mainstream physics.

Mainstream physics has three distinct layers: the very-small, the mid-range, and the very-large.

Most of what we deal with in terms of ‘the laws of physics’ is in the mid-range: Newtonian physics and the like. Everything seems to follow identifiable rules or ‘laws’; classical physics focusses on the direct impacts of those apparent laws, whereas in some cases there are contextual ’emergent properties’ that arise from interactions between entities – though note that it’s still the same physical-laws beneath those interactions.

Our mainstream ‘decision-physics’ – what I’ve described as ‘considered’ sensemaking and decision-making – likewise seems to make sense across a very broad mid-range, from strategy to tactics to operations. And we see much the same distinction between all-predictable ‘hard-systems’ (Complicated / Assertion, in SCAN) versus complex, iterative, emergent ‘soft-systems’ (Ambiguous / Use) – though note again that’s still ultimately the same ‘laws’ on either side of that spectrum.

Yet some of those key ‘laws’ break down when we move to the far extremes. In cosmological-physics, for example, the speed of light seems to be an absolute constant across almost all timescales – yet not for the first infinitesimal instants of the Big Bang, or for the furthest reaches of time. Somewhere our present physics, there’s presumably some kind of circular self-referential assumption – but we don’t yet have the means to work out what it is.

Much the same applies in our ‘decision-physics’. Most of the time – such as in most enterprise-architecture work – the usual assumptions and decision-methods work well enough. Yet once we move to the scale of the very-large – with longer timescales, for example, or what I’ve termed ‘really-big picture enterprise-architecture‘ – some of those assumptions begin to be more evident, and become much more problematic. When we do have to work at those scales – and some enterprise-architects do so already – then we need to be aware that a somewhat-different decision-physics may apply: for example, conventional notions of ‘possession‘ and the like may no longer make sense.

The certainties of mainstream physics also tend to break down at the scale of the very-small, as we move into quantum-physics and the like. It’s actually the same underlying physics, but all manner of assumptions that we could get away with at the everyday Newtonian scale become visible as assumptions. This applies particularly around certainties versus probabilities, which at the quantum scale gives us seemingly-impossible phenomena such as ‘particles’ that can be in more than one place at the same time. (This also gives us occasional oddities in larger-scale physics, such as in signal-theory, where to guarantee perfect signal-transmission we would need a conductor of infinite size.)

In the same way, many of the assumptions of mainstream ‘considered’ sensemaking and decision-making start to break down once we get at or very close to the moment of action. The key ‘takeaway’ that I hope you get from this multi-part exploration with SCAN is that what happens at the point of action is real: everything else is an abstraction.

And it’s an abstraction that may not have much connection with reality at all. All of so-called ‘rationality’ is an abstraction: hence, for example, why ‘rational-actor theory’ and much of current mainstream economics has been such a disastrous bad-joke, and why ‘control’ is a such a misery-inducing myth in most current forms of business-management.

What we actually have at the point of action are literally-emotive decisions made on belief and faith. Just as quantum-physics underpins the larger-scale Newtonian-physics, all of our abstractions are actually underpinned by real-world emotion – literally, ‘that which moves’.

Thinking is extremely important, of course, because it clarifies intention. But neither thinking nor intention actually do anything on their own: for something to happen, we need to link intention to emotion. Which in most cases also means that we need to link the type of intention to the matching type of emotion-that-drives-action – and likewise match the emotion back to the required intention, to get the maximum effectiveness from expenditure of energy.

[Not every emotion is effective, of course: aggression, panic or ‘awfulising’ – otherwise known respectively as ‘fight, flight or freeze’ – can use up a lot of energy without achieving anything useful at all… Likewise the same does also apply to machines and IT-systems, but kind of at one step removed: plenty of motion without emotion as such, it’s true, but it’s human emotion and human choice that links all of that activity back to intent.]

So at the point of action – the quantum-level of our ‘decision-physics’ – what we actually have is a range of emotion applied across that scale of modality. And it’s here that the Inverse-Einstein Test becomes crucially important, because it provides the key distinction between what I’ve termed ‘belief’ and ‘faith’:

— belief is grounded in certainty, and in the centrality of self

Belief expects the world to work in a specific way: doing the same thing will – or should – lead to the same results. We proceed with the conviction that this is true. An abstract idea – a ‘law of physics’, perhaps, or a more mundane work-instruction or checklist-item – becomes actionable in real-world practice when we attach ourselves to it, as a personal commitment to its ‘truth’.

[Perhaps the starting-point for all belief is faith – as we’ll see in a moment – but belief itself provides a stable anchor from which to act.]

For the most part, this how most things are actioned in business and elsewhere: we follow the rules, to get the same results. For the most part, and in many if not most everyday contexts, this is what we want in business and the like: the right beliefs deliver the right results. Yet there are couple of important catches that we need to note here, both of which have their roots in that personal commitment, and both of which are common causes for ineffectiveness and overall failure.

The key to both of these is the Latin word ‘credo’ – literally, ‘I believe’. Note the ‘I’ here: I, me – the commitment of self to the belief. The commitment is what creates the drive to action, power as ‘the ability to do work’. Yet if we shift too far over to a self-centred view, ‘I’ as the centre the world, we risk falling into the social misperception that power is the ability to avoid work: ‘the rules’ are deemed to apply to others, and to drive others’ actions – with those others being viewed as extensions of self that ‘should’ be under our control yet without requiring any actual action or responsibility on our part. Hence those endless ‘shoulds’ – applied to the world in general, to machines and systems, and even more to other people. (‘They should’; ‘everyone ought’; ‘it must’; so many phrases like that – though noticeable is the relative rarity of ‘I should’…) In effect, the emotion shifts away from doing useful action, and toward trying to ‘control’ others instead – a well-proven recipe for wasting all one’s energy in ineffectual anger… There are some real governance-issues here, and some useful tools for this, such as the SEMPER diagnostic I developed some years back.

The other side of this is a classic give-away for dubious discipline in the sciences and elsewhere: getting overly emotional about the ‘truth’ of ideas, theories and beliefs. Belief is emotional, a driver for action; yet in itself, thought is neither emotional nor actionable. So when we see someone getting emotional about ideas, aggressively asserting that their ideas are ‘the truth’ – or, more especially, that someone else’s ideas are ‘wrong’ – it’s not actually about the ideas at all: it’s about that person’s ego, a demand that others’ action should place that person at the centre of their world. This is the basis of ideology, where the ‘truth’ of the belief-structure deemed to be more ‘true’ than the messy complexities of the real-world – and where those who hold that ‘truth’ deem themselves to be ‘better’ than any others, solely because they hold to that ‘truth’. (There’s a nice Freudian pun here: ideology as ‘id-eology’…) Hence, again, why ‘office-politics’ is actually a hugely-important governance-issue in enterprise-architectures.

The key effect of both of these is a disconnect from the real-world: a demand that the world ‘should’ conform to our expectations, and an assertion that the world itself is ‘wrong’ if it fails to conform to those expectations – all often coupled with a daft dependence on circular-proofs and ‘other-blame’. So whilst emotion and ego are essential to get things going, we do need to keep them in their place… hence, again, the need for appropriate governance right down to this level, all the way across the whole enterprise.

— faith is grounded in uncertainty, and in relation to ‘that which is greater than self’

Faith is what happens when we’re in the Not-known – where doing the same thing leads to different results, or to get the same result requires that we do different things. Faith is the emotive mechanism that we use when we don’t know what to do, yet don’t allow ourselves to get caught up in the classic Belief-driven panic-responses of ‘fight, flight or freeze’ – in other words, to use Susan Jeffers‘ phrase, we ‘feel the fear and do it anyway’.

Whenever we come across any context that has some component of Not-known or None-of-the-above, this is what we have to do. Somehow. Some people find this easy; yet for many people, so important is the sense of safety that comes with certainty, that this kind of ‘letting-go’ can be very hard to do… and organisations and organisation-structures that assert the absolute primacy of ‘The Rules’, and deny the inevitability of the Not-known, will make that letting-go that much harder. Hence there are some significant design and governance-issues here for enterprise-architecture.

What doesn’t work well in this space is ‘flailing’ – doing something just for the sake of doing something. Often what’s needed first is the exact inverse of the old adage: “Don’t just do something, stand there!”. We keep the panic at bay – and avoid a fallback to literally-incompetent Belief – by coming to a calm centredness within the space, and (usually metaphorically, though sometimes almost literally) allow the space itself to speak to us, to show us what to do. And at that point, take action – all in real-time.

At the first point of contact – and especially so at the further extremes of modality – this is literally a context of chaos: in principle at least, anything is possible there. That’s the advantage of the Not-known; it is, obviously, also its disadvantage, since any number of things that we don’t want to happen also become equally possible at that point. Hence the need for appropriate tactics that work within the chaos, and provide guidance towards the results that we need.

There are any number of well-known tactics that don’t work well here: for example, ‘take control!’ might be the preference for any Taylorist manager, but in practice all it does is pull us back over to Simple Belief – which is not where the solution to a Not-simple problem is likely to reside… And there are a fair few would-be ‘The Answer’ options that contain fundamental flaws in this regard – a point we’ll come back to later.

Although there’s an element of Schrodinger’s Cat in this, what does seem to work is ‘seeding the chaos’: bringing explicit aims and intentions into the space, yet also allow the space to be what it is. (I’m at some risk of making this sound a bit like “hey, ’60s counterculture, man”, but actually there’s a lot of practical sense in Timothy Leary’s notion of ‘setting and set‘, even within everyday business-contexts.) Hence, in enterprise-architectures, the real importance of ‘setting the scene’ with vision, values and more-actionable principles – all of which provide an anchor of a direction and intent which is ‘greater than self’. ‘Success-stories’ in business – especially those about the grass-roots operational levels – will often revolve around use of such ‘seeding’ to guide context-appropriate action in unexpected circumstances.

Dysfunctional diagonals

One of the key points that came up in the previous post was the importance of having explicit processes and methods to link intent and action, and also to link across the modalities. In SCAN, we would describe these as ‘vertical’ and ‘horizontal’ links:

What we don’t want, though, are ‘diagonal’ transitions that link one type of modality at the ‘considered’ level with a different modality at the point of action – such as Assertion misapplied to a Not-known context, or introducing the Ambiguous at the very moment of Belief-based action:

Probably the classic example of this is the myth of ‘control’, typified by the many, many misuses of Taylorism and its descendants and derivatives. For example, Six Sigma makes perfect sense, and is very valuable, when applied to contexts with very low variation; but it doesn’t make sense, and is often dangerously misleading, when attempts are made to apply it to anything other than a context consisting solely of literally millions of nominally-identical events. Business-process reengineering was another infamous example: one of its leading proponents, Michael Hammer, is quoted on the Wikipedia-page as later admitting that:

“I wasn’t smart enough about [the human impact]. I was reflecting my engineering background and was insufficiently appreciative of the human dimension. I’ve learned that’s critical.”

In SCAN terms, each of those is a ‘diagonal’ link of Complicated <-> Not-known – which doesn’t work. We also get the same effect, though, if we take something that does work well as a link of Complicated <-> Simple, and implicitly apply it to a modality beyond its specific true/false constraints. One example that comes to mind here is Roger Sessions‘ work on Simple Iterative Partitions as a method to reduce or eliminate ‘complexity’. It does work extremely well within the relatively-narrow scope of IT-systems themselves – where, in SCAN terms, ‘complexity’ is an undesirable excess of Complicated, leading to system-applications that are not Simple enough to work effectively in real-time action. Yet it’s misleading if we use the same concepts to talk about the application of IT-systems in real-world contexts which, because they’re real-world contexts, frequently must deal with a different type of ‘complexity’ that can’t and sometimes shouldn’t be ‘eliminated’. In effect, that’d be Complicated <-> Simple applying an Assertion that Ambiguous <-> Not-known doesn’t actually exist – taking us straight back to the Taylorist myth of ‘control’, which is not a wise move.

[A quick somewhat-personal note here: Roger has always been a great sparring-partner on this question – perhaps best described on my part by the old phrase “I disagree with every word you say, but I will defend to the death your right to say it”? 🙂 ]

Going the other direction, we could use the example of Andrew McAfee’s ‘Enterprise 2.0‘, “the use of emergent social software platforms within companies, or between companies and their partners or customers”. Nothing wrong with that definition itself, but the point here is that the application is about collaboration across the organisation – Ambiguous <-> Not-known, or Use <-> Faith – whereas McAfee placed inordinate emphasis on the technologies instead, or Ambiguous <-> Simple. Ignoring the real complexities of the human-factors was exactly the same mistake that caused so many business-process reengineering projects to fail – a point which was quickly picked up by many practitioners with real-world experience. Surprisingly, McAfee took a long while to acknowledge the problem, eventually coming out with a blog-post ‘It’s not not about the technology‘ – which was true, but still kind of missed the point.

Another example has been a bane of my professional life for the past few years: David Snowden’s Cynefin framework. In SCAN terms, it purports to cover the Ambiguous <-> Not-known link – somewhat like McAfee’s ‘Enterprise 2.0’, but with more emphasis on complexity-theory and social-systems in general rather than in one specific application. The problem here – and despite many, many explanations of this, Snowden still emphatically refuses to acknowledge the problem – is that Cynefin explicitly locks out the linkage to the Not-known / Faith space, for which the nearest equivalent in Cynefin is termed the ‘Chaotic domain’.

The Cynefin framework states that the standard tactic in the Chaotic is ‘Act > Sense > Respond’: which in principle might seem fair enough, except that in many cases – as described above – ‘doing for the sake of doing something‘ is exactly what we should not do as a response to ‘chaos’. Even when action is appropriate, the catch is in what happens next, because the documented ‘Cynefin dynamics’ in effect insist that our only method of dealing with the Chaotic domain is to not be there: our only apparent options are to ‘take’ control’ and collapse back to the Simple domain, or return to ‘considered’ sensemaking in the Complex domain. In SCAN terms, the former is a diagonal-link of Ambiguous <-> Simple, which is clearly not a good idea; the latter is a self-referential loop of Ambiguous <-> Ambiguous, which looks impressive and gives us more and more information about complexity and emergence and the like, yet clearly is not going to go anywhere that’s any actual use. In that sense, Cynefin actively prevents us from applying its insights in real-world practice: it’s valuable for ‘considered’ sensemaking, but literally useless for real-time decision-making. So although Cynefin does claim to cover the whole ‘complexity’ space, the only way to use it in practice is to not use it – which is not exactly helpful…

[There’s more on this in other posts here, if you’re interested: for example, see ‘Using Cynefin in enterprise-architecture‘ and ‘Comparing SCAN and Cynefin‘.]

For a final example, we could turn to something that’s perhaps more familiar to many enterprise-architects: Agile software-development. In principle, it’s a good response to the reality that software-development takes place in a world that has a great deal of Not-known about it. And it’s also a response to the ‘traditional’ Taylorist-style Waterfall model of software-development, in which everything would be rigidly defined ‘up front’ without acknowledgement of the reality or probability of changes in the context. In effect, it aims to take the Assertion <-> Belief link on which all conventional IT-systems depend, but apply it via a disciplined Ambiguous <-> Not-known link such that the overall development-process can adapt in near-real-time to changing needs.

That’s the principle: and with experienced, adaptable developers who know what they’re doing and how to work with inherent-uncertainty – in other words, Master skill-level, or Journeyman with a bit of mentoring from Master – it does work well. Unfortunately, it doesn’t work well with developers who don’t have that kind of skill or experience – the Apprentice or, worse, the Trainee. So what we get there instead, all too often, is an undisciplined mess: not enough skill or discipline to work with Faith-style uncertainties and the Faith <-> Belief axis, coupled with a rejection of the formal disciplines demanded by the Complicated / Assertion domain. In other words, not only a problematic ‘diagonal’ of Complicated <-> Not-known, but the worst of both as well. Oops…

And yet somehow we do still need that agility: hence this is one aspect of enterprise-architecture governance where the need for balance is perhaps better-known and better-understood. You’ll find various posts here on what I’ve termed the ‘backbone’ – for example, see ‘Agility needs a backbone‘ and ‘Architecting the enterprise backbone‘; there’s also a good summary by Vikas Hazrati on the InfoQ website, ‘Agile and Architecture Conflict‘. But perhaps I ought to leave the last word here to a software-architect I greatly respect, Simon Brown, who’s frankly brilliant at describing the practice of how to ‘seed the chaos’ to get the best results in agile-development: see his presentations and videos to explore the trade-offs that we need so as to derive an agile-architecture that works, and how to resolve the architecture-challenge of “How much architecture is ‘just enough’?”

—

Okay, that’s it for now. Plenty more to say on all of this, of course, but I’d guess it’s been more than enough already? 🙂

And many thanks reading this series: hope it’s been useful? Over to you for any comments and questions, anyway.

Hello Tom,

You always point out central questions about EA and you propose very interesting analysis and points of view. It is very helpful to build and spread best practices.

I would like to bring modestly 2 comments to this excellent paper.

First is decision process being mainly ‘experience to faith’ due to limited rationality (H Simon)

often the other side is just appearance with lot of incertainty remaining. I agree that improving this process is an EA core goal.

Second I think EA should go closest to Business minding for decision taking, I mean strategic, which is less rational than a lot of architects may think. I feel that main driver here is risk.

Best Regards

Jerome

PS. Happy new year to you and your dears. Good health to this blog, your insights and all contributors

Hi Jerome – thanks for the comments and kind words! 🙂

On “due to limited rationality”, I take the point, yes, yet I do need to stress this point again: at the moment of action, no-one has time to think. It’s not about ‘limited rationality’ in the sense that someone isn’t thinking properly, it’s that there space available for ‘rationality’ in that precise context. Before it, yes; after it, yes; but during the action itself, no. That’s the distinction to draw out here.

I need to do another post on ‘belief’ and ‘faith’: I’m probably using both terms in too much a ‘special-definition’ way, but I haven’t as yet been able to find suitable alternatives. The point there is that ‘belief’ (and I’ll use quotes for the moment to indicate that it’s this context-specific usage of the term) is about a self-oriented or ‘inside-out’ set of definitions or assumptions about ‘how the world really works’, that are brought to play, in a true/false manner, at the moment of action: the world either fits these assumptions, or it doesn’t. (There’s more I need to say about that, as well – but again, I’ll do that in another post). By contrast, ‘faith’ is a kind of ‘outside-in’ or ‘letting-go’ into the uncertainty; it’s more about trust, whereas ‘belief’ is much more about purported ‘control’.

(Again, a reminder that this is still very much ‘work-in-progress’: in places I’m really struggling to find ways to describe this with the necessary precision… – hence thanks for your tolerance on this!)

On “EA should go closest to Business minding for decision taking”, I probably haven’t fully understood you fully on this – the challenges of translation… But on “less rational”, yes, I strongly agree. As I remember, Edward de Bono argues in his ‘Six Hats’ metaphor, that ultimately all decisions are ‘Red Hat’, in other words emotional, not ‘rational’. One of the outcomes of this work has been seeing just how much of supposed ‘rationality’ is actually an abstract overlay on some definitely non-rational foundations.

On “I feel the main driver here is risk”, one of the more interesting challenges has been to get business-folk (and others) to recognise that risk and opportunity are a matched pair: we (almost?) never get one without the other. So risk is always accompanied by opportunity; opportunity always accompanied by risk. If we focus only on one side, we may fail to see the other. Some important architectural concerns there.

Thanks again, anyway!