More on principles and decision-time

Seems that that Twitter-conversation about principles and decision-making just keeps on rollin’ on. 🙂 Stijn Viaene kicked the ball rolling again with the following Tweet:

- destivia: @ebuise @tetradian @richardveryard Never forget a ‘model’ is always only a preliminary version of how we see or want to see reality.

After which, yes, the whole happy ‘passel o’ rogues’ piled in, all in their different ways:

- richardveryard: @destivia @ebuise @tetradian We can only replace a model with a better model, despite what Saint Paul says (1 Corinthians 13).

- ebuise: @destivia @tetradian @richardveryard Nice! In a way, a (coherent) set of principles is a special kind of model… #insight

- richardveryard: @ebuise @destivia @tetradian I have difficulty with the idea that a set of principles is supposed to represent some aspect of reality.

- destivia: @ebuise @tetradian @richardveryard Indeed.

- ebuise: @richardveryard @destivia @tetradian A few hours ago @krismeukens tweeted: “The core of strategy work is discovering the critical factors and designing a way of “coordinating” and “focusing” actions to deal with them.”

- Aren’t principles derived, directly or indirectly, from this proces? And as such related to reality and steering into future realities?

- ebuise: @richardveryard @destivia @tetradian Can’t aspired directionality of the future be related to reality?

- krismeukens: @ebuise (cc @richardveryard @destivia @tetradian) indeed, that is my current thinking

- krismeukens: @tetradian In near-realtime would sensemaking not just be limited to deal with it as either simple/chaotic? Sense-catorize or just act?

I caught up with the conversation at this point, and given that my name had been invoked right the way through the above – even though I hadn’t been there – I thought I’d better join in:

- tetradian: @ebuise cc @richardveryard @destivia ‘aspired directionality of future’ – agreed: that’s a primary role of principles

And, of course, the ongoing problem with Cynefin had been invoked as well:

- tetradian: @krismeukens Cynefin’s Act>Sense>Respond is inadequate/incomplete – see later part of http://bit.ly/AxCDSB and posts linked from there

I ought to expand that Tweet here, because the above ‘explanation’ suffers from the dread 140-character limit on Twitter. As described in the SCAN posts – perhaps particularly in ‘Comparing SCAN and Cynefin‘ and ‘Belief and faith at the point of action‘ – I would answer ‘Yes’ to Kris Meukens’ question “In near-realtime would sensemaking not just be limited to deal with it as either simple/chaotic?” (‘Chaotic’ being the nearest Cynefin equivalent to what I’ve termed the ‘Not-known/Faith’ domain for sensemaking and decision-making respectively). The point is that in near-real-time, there isn’t time for anything else: in particular, no time to think, hence, no time for Complicated or Complex (the equivalent of the latter described in SCAN as the ‘Ambiguous/Use’ domain).

The catch is that whilst Cynefin’s definition for tactics for the Simple-domain – ‘Sense-Categorise-Respond’ – does match up quite well with what happens on the Simple/Belief side, the defined tactics for the Chaotic side – ‘Act-Sense-Respond’ – for the most part do not line with what actually happens. Or rather, they sort-of-describe one particular type of tactic that can be used in that domain, but in many if not most cases those tactics are exactly what not to do.

More on that in a moment; for now, back to the Twitter-stream:

- tetradian: @krismeukens one-liner: Cynefin is to Chaotic as SixSigma is to Complex: its basic concepts dont match to the needs of the context

- transarchitect: @tetradian @krismeukens True Tom.

- krismeukens: @tetradian @richardveryard I have the impression that often the ‘dynamics’ aspect of cynefin is forgotten http://bit.ly/sXeDBp [PDF]

- tetradian: @krismeukens it’s the ‘dynamics’ of Cynefin that are the problem… for Chaotic, they all consist of ‘running away’… // Cynefin’s so-called ‘Chaotic’ is domain of uncertainty in real-time action: ‘running away’ is not sustainable/viable tactic…

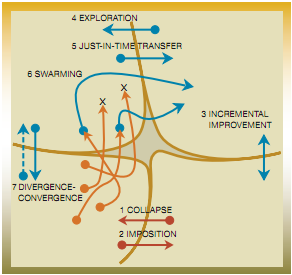

This obviously needs some further explanation, so we’ll go to the original source as pointed in Kris Meukens’ link above: Kurtz & Snowden, ‘The new dynamics of strategy: Sense-making in a complex and complicated world‘ (2003). The following (presumably (c) Kurtz & Snowden, used here under ‘fair use’) is its Figure 4, ‘Cynefin Dynamics’:

The Simple and Chaotic domains are on the lower-right and lower-left respectively. For now, we’ll ignore the paths that only go between Complex, Complicated and/or Simple (3, 4, 5 and 6), and focus only those that apply at real-time, Simple<->Chaotic (1, 2) and Chaotic<->Complex (7 and the various orange-line paths).

[Path 3 links to Simple, but tends to occur at significant distance from real-time: it’s typified by PDCA-style learning-loops and the like.]

Paths 1 ‘Collapse’ and 2 ‘Imposition’ are generally well-known and (fairly)-well-understood. When the expectations of Belief (Simple) don’t match up to reality, there’s often some kind of ‘Collapse’. (That’s actually a failure-mode: it doesn’t describe how we can intentionally move into the ‘Chaotic’ when we acknowledge that the current belief-set doesn’t work.) Once in the Chaotic, and if panic is allowed to take hold, very often there’s an attempt at ‘Imposition’ of order – an assertion of ‘truth’ that pulls the context back into the Simple. (This too is often a failure-mode, by the way – especially if the imposed ‘truth’ likewise doesn’t match up with reality.) That Imposition typically occurs because someone decides to ‘take action’, the Act-Sense-Respond sequence: but what it causes is usually a failure-mode, a collapse back into the over-Simple.

The unnumbered orange-line paths illustrate well what I mean by ‘running away to the Complex domain’. Having arrived in the Chaotic domain, the Act-Sense-Respond tactic is used to elicit and grab at a momentary idea or sense-item and ‘escape’ to the Complex domain, to assess or analyse or analyse what it is or how it could be used. Rather than ‘holding the space’, the Act part of the tactic itself causes the retreat to the Complex. And in doing so, it moves out of real-time: it doesn’t work with the Chaotic as it is. We might also note that whilst some of the orange pathways dead-end in the Complex domain – for example, ideas that, once assessed, turn out to be unusable – the paths that do ‘succeed’ all end up in the Complicated-domain. In effect, what the Cynefin-dynamics are suggesting here is that the only valid place for new ideas is ultimately in the domain of Complicated ‘control’ – in other words, right back in the same old trap of Taylorism and ‘scientific management’ again.

[This is one of several aspects of Cynefin that make it all too easy to misuse to delude worried business-folk into believing that the the deep complexity and chaos of the real-world can indeed all be subject to ‘control’. Still seems to me that there are some real ethical concerns about the structure of Cynefin that really do need to be addressed… but that’s just my opinion, of course…]

Path 7 ‘Divergence-Convergence’ indicates a slightly more refined version of the orange-lines paths: iterative rather than ‘one-shot’, but still centred on the Complex-domain, away from real-time action and real-time decision-making. This is what I mean by ‘dipping the toes into the chaos’: it’s a useful and valid way to garner new ideas, yet it still doesn’t work with the Chaotic as it is – like a mouse snatching the cheese, it’s grabbing some tasty morsel and then running away as fast as it can.

What there isn’t in any of the Cynefin-dynamics or other Cynefin descriptions is anything that does work with the actual nature of the Chaotic mode: for example, all the classic tactics for keeping the panic at bay, such as meditation and so on – and also ‘pre-seeding’ the space with principles and the like (which is where we started this long Twitter-conversation 🙂 ). In fact many of these tactics are the exact inverse of the Cynefin pattern: rather than the “don’t just stand there, do something!” of Act-Sense-Respond, what we often most need is “don’t just do something, stand there”! That’s what I mean when I say that the Cynefin required-tactics are too limited here: Act-Sense-Respond does apply in certain cases, but it only matches up with a small subset of what we need to do (or not-do), and often it is just plain wrong.

Note too that, in terms of the Cynefin-dynamics above, the only pathways that remain in the near-real-time space are the Collapse/Imposition pair – which happen to represent a classic cyclic failure-mode.

In short, the Cynefin-dynamics give us a very incomplete picture and, at best, rather unhelpful picture of decision-dynamics at real-time, and tell us almost nothing about what actually does work in the near-real-time space.

So I hope you can see from this that there are some serious problems here that are just not being addressed in Cynefin: this is serious critique, and certainly not deserving the kind of petty personal putdown-attacks that have been the usual response from that direction. Sigh…

Anyway, back to the Twitter-stream:

- krismeukens: @tetradian it is not exactly running away, it is approaching it for the moment being in a “simpler” way through a reduction of reality

- tetradian: @krismeukens ‘reduction’ – sort of. I’ve gone into this in a lot of detail in my SCAN posts http://bit.ly/wSOAm0 (still a work-in-progress)

- krismeukens: @tetradian categorization versus sensemaking?

- tetradian: @krismeukens categorisation is sensemaking – (mostly Simple-domain sensemaking, in essence, but still a form of sensemaking)

- krismeukens: @tetradian Well yes // But there are 2 things here: 1 categorize in which domain the problem is, the meta-level so to say. 2 how the make sense of it.

- tetradian: @krismeukens ‘2 things here’ – yes: recursion. without which Cynefin doesn’t make sense. and which it apparently does not allow. go figure? // “does not allow” – at least, I’ve been savagely attacked each time I’ve tried to introduce the topic. Your Mileage May Vary etc

- tetradian: @transarchitect addendum to one-liner: Cynefin fits well with Complex, as SixSigma fits well with Simple: problems arise when out of scope

- transarchitect: @tetradian @krismeukens let’s not get too academic about this. C. is just another usable lens. #complexity

- tetradian: @transarchitect yeah, true. it’s just I’ve been attacked so often about trying to make it work that it’s something of a red-rag now… 🙁

- transarchitect: @tetradian above understands what’s below; not the other way around. I’ve been defending myself #complexity 2 decades: useless 🙂

- tetradian: @transarchitect “defending myself” – my commiserations, good sir… [don’t quite agree re ‘above/below’ – more like mis-intersecting sets?]

- krismeukens: @transarchitect @tetradian yes, lens that is excellent metaphor

- tetradian: @krismeukens @transarchitect “lens” – yes – which brings @richardveryard’s concept/practice of ‘lenscraft’ back into this picture? 🙂 // problem with Cynefin is that it claims to have full lens-set for all contexts, but does not cover ‘Chaotic’

- krismeukens: @tetradian @transarchitect this must be an attractive discussion as it gains new followers in search of a? date fo?r this w?eekend haha

- tetradian: @krismeukens are there other followers to this? – i thought we were just having a Standard Academic Argument between ourselves… 🙂 🙂

I had to duck out at that point, to do some promised tech-support for a colleague: we parted, with quick thanks shared all round. But a few other Tweets popped up in the stream somewhat later:

- hjarche: @tetradian just dipping into this discussion but “Act = running away” not an inference I ever made w/ Cynefin // I’ve no time to get too deep on this today but I will dig through all the refs & links later @transarchitect @snowded

- ImaginaryTime: @hjarche @tetradian @transarchitect @snowded Neither did I. Important to note one can also enter Chaotic domain intentionally (innovation).

Innovation is described above: quick summary is that it’s sort-of implied in the Cynefin-dynamics path 7 ‘Divergence-Convergence’, but note that it only links to the Complex: there’s no path described for innovation at real-time, the Simple <-> Chaotic link.

On “Not an inference I ever made w Cynefin” – a valid point, though I hope from this post above that the reasoning behind that inference is now clear. And, in turn, the reasoning why I now strongly recommend to not use Cynefin in its standard form in enterprise-architecture.

Anyway, enough for now: over to you, perhaps?

This is, in a way, exactly what we were driving to with the principles that I shared in a previous post. Granted they aren’t all constructed in a way that reads as a belief. In this case, perfection took a backseat to involvement and a sense of being of part of something. These behaviors or beliefs is what every individual needs to consider and lean towards as they go about designing, creating or delivering value to their customers.

When something pops into the chaotic space, depending on how strong you are within your profession you can leverage the principles by which you behave and the embedded beliefs and remain in the chaotic realm… now a journeyman or apprentice on the other hand would have a hard time staying in the chaotic realm because they have little faith in themselves, therefore the principles are perhaps, useless or of little value. This is where guides, training, plans come into play. It appears to me that the cynefin would insinuate that we are all apprentices, so act…apply something to it that shows your an apprentice and come away with something bad on the other end. Unwanted effects caused by applying complex or unwarranted rules to something.

I may be over simplifying or missing the boat, but that’s my initial reaction!!

@Adam Johnson – thanks, and yes, in practical terms that’s pretty much exactly what I’m saying. I might throw in a few quibbles about Journeyman versus Apprentice in relation to the Not-known (‘chaotic’) realm, but essentially that’s it.

Two points, though. One is that I ought to reiterate that Cynefin is a very useful framework in Complex-domain ‘considered’-sensemaking. As I’ve explored over the past few posts (and years, actually), there are problems that arise when we try to use the same framework to put things into practice, and/or for real-world decision-making; but within its own domain it’s one of the best frameworks we have – which is why it’s deservedly popular. That’s something that does need to be said here.

The other is around that last comment “I may be over-simplifying”. In my opinion, you’re not over-simplifying (not to the level of ‘over-simplistic‘, anyway, which is the real concern here), because although it’s simplified, I can still see where you’re going with it, and that the overall shape of it still works. One key point here is that we must simplify in order to make something usable or actionable in real-world practice: there isn’t time for anything else. So there’s a whole load of recursion that goes on here, where we use complexity to drive simplicity, where complexity arises out of ’emergent properties’ from nominally-simple interactions, and so on. Looks like that’s another post that I need to write Real Soon Now… 🙂

Again, thanks – this really does help.

Reading this blog and there is an awful lot of knowing going on 🙂 I like Socrates famous words. “I know I know nothing”, which seem to me a good place to start.

I don’t understand the recursion you speak of and the real time nature of decision making and how that is different from ‘considered’ decision making. My guess is that you are talking about intuition here. Is that so?

My reading of Cynefin leads me to a different understanding of the chaotic realm then the one you describe here. Firstly I see the strength in the frame work as a description or explanation of how things work. I don’t see it as a prescription on how to solve problems. Something I think you elude to if I understand you right?

Ok. So as a description the chaotic realm is where we can’t make sense of events, even in retrospect. Things just happen. This to me can be an innovative space and isn’t a place to continuously run away from. The diagram shows an arrow marked divergence. Differing views and a lack of coherence can be useful. Stirring up the pot and generating new ideas. So dipping into chaos can be a deliberate choice. Brainstorming for instance. Snowden speaks about exploiting chaos in his paper. Ideas that are born in chaos find common ground and converge into complexity where they can be effectively exploited.

As individuals I agree we spend a great deal of time in chaos, which probably isn’t a bad thing. If what you mean is we act mostly on intuition. My understanding is that there needs to be a shared sense of meaning, some sense of order for groups to effectively share the fruits of chaos. A shared intuition if you like. I think this is what is meant by coherence. Without coherence there is no organisation. Just a bunch of individuals doing their own thing. Perhaps feasible for a one man band, but not very effective for any endeavour that requires collaboration.

Paul.

[A quick note to record that Dave Snowden attempted to post a comment here, despite being told many times that he has long since forfeited any right of reply on this website.

For reasons why this is regrettably essential, please see Bob Sutton’s book ‘The No-Asshole Rule‘ (Wikipedia summary).

I have no objection whatsoever to anyone discussing this with Snowden. But not here. And please leave me out of it: I’ve suffered more than enough abuse on this already.

Many thanks for your understanding on this matter.]

Hi Tom,

I’ve looked back at some of your past posts, and I think you are talking about individual decision making. Is that right? Whilst I see Cynefin as addressing group decision making. The flocking of Boyds come to mind. Groups of people attempting to act in unison.

Perhaps that explains the different perspectives? Thanks BTW for you writings. I find all such models interesting. Answers to my questions would help greatly.

Thanks,

Paul.

@Paul Beckford – thanks. There’s a lot I need to reply to here: please post another comment if I miss anything?

“Socrates famous words. ‘I know I know nothing’, which seem to me a good place to start” – that’s largely how I understand the space that Cynefin (seemingly) describes as the Chaotic-domain, and which I’ve described as the Not-known. The ‘I know I know nothing” is important, because it indicates an intentional move into that space.

“My reading of Cynefin leads me to a different understanding of the chaotic realm then the one you describe here. Firstly I see the strength in the frame work as a description or explanation of how things work. I don’t see it as a prescription on how to solve problems. Something I think you elude to if I understand you right?”

Yes, largely. The key point here perhaps is that, on its own, sensemaking isn’t much use: to be any use, it needs to be coupled with decision-making – much as per Boyd, as you allude to in your later comment. My experience of trying to use Cynefin in a coupled sensemaking/decision-making context is not only that it doesn’t give me anything for decision-making – which is arguably fair enough if it’s solely a sensemaking framework – but also that the way that it tackles the “I know I don’t know” space is misleading because it isn’t coupled with decision-making. As shown in dynamics-diagram, the only loop that it acknowledges is Act to trigger Sensing in the Chaotic, and Respond by moving to the Complex, where sensemaking then takes place. The practical problem is that that forces us out of real-time – in other words, not a usable model of how sensemaking/decision-making needs to work in real-time inherent-uncertainty.

“Ideas that are born in chaos find common ground and converge into complexity where they can be effectively exploited.”

Yes, that’s the Cynefin approach: everything ends up in the Complex. Which is fine for ‘considered’-sensemaking – and I’ve said many times that Cynefin does work well for that. What I’m talking about here is where exploitation needs to occur at real-time – which is what happens in any kind of front-line work. In that latter case, the sensemaking/decision-making loop has to occur at or very close to real-time: John Boyd territory, as you say. And my experience is that I can’t do that with Cynefin: Snowden may argue otherwise, but from my perspective as a methodologist and practitioner, there are simply too many gaps in its concept of that space.

“As individuals I agree we spend a great deal of time in chaos, which probably isn’t a bad thing. If what you mean is we act mostly on intuition.”

A lot of this depends on what you mean by ‘intuition’. One of my key guides in this is Beveridge’s classic ‘The Art of Scientific Investigation‘ (full-text available from that link) – for example, see the chapters on ‘Chance’ and ‘Intuition’, and the summary at the end of the ‘Reason’ chapter that warns about “the hazards and limitations of reason”. A particular point here is Pasteur’s quote that “In the field of investigation, chance favours the prepared mind”: that’s where ‘pre-seeding’ of the mindset with principles and the like before entering the Not-known space comes into the picture. (If you remember, this whole discussion started up from questions about the use of principles in enterprise-architecture – well, that’s one example of a practical application of Pasteur’s point.)

“My understanding is that there needs to be a shared sense of meaning, some sense of order for groups to effectively share the fruits of chaos. A shared intuition if you like. I think this is what is meant by coherence. Without coherence there is no organisation. Just a bunch of individuals doing their own thing. Perhaps feasible for a one man band, but not very effective for any endeavour that requires collaboration.”

This is the key area I’m aiming to address at the moment – and no, I don’t claim much ‘knowing’ here. 🙂 To be honest, I’m struggling with this, just like anyone else does in the Not-known space – which is why I explicitly marked all of the work on SCAN and decision-making as ‘work-in-progress’, and asking for constructive comment.

(Let’s just ignore Cynefin from here on: as per earlier in your comment, it describes quite well what happens in a collective sense once we move outside of the Not-known space, but it’s frankly unhelpful and misleading when trying to grasp what goes on within that space – which is what I’m concerned with here.)

One really key point here: at the exact moment of action, there is only the individual – there is no group, no collective. So in a collective, collaborative context, what we actually have is a mix of individuals, some of whom experience themselves as being in a Simple space and hence act on Belief, others of whom experience themselves as in a Not-known space and hence act either on Faith or else fall into panic and (probably) try to collapse back to an over-simplistic and contextually-inappropriate Belief, all of them interacting with each other, all at or very close to real-time. The Belief-sets and Faith-drivers may well all be different: hence achieving the desirable aim of coherence is likely to be, uh, interesting…

The Simple option – as in Taylorism, is to treat people as robots: pseudo-machines that act only on predefined Beliefs. (The even Simpler option, as in BPR, is to try to replace all the people by machines, which by definition can only operate on Belief, aka rule-sets and ‘business-rules.) The catch is that it just doesn’t work: it falls over in a heap as soon as it hits anything that doesn’t fit ‘the rules’. So to make it work, and make it capable of handling the Not-known, we need to include people as people, as themselves – with all that that implies.

Hence that phrase about “make it as Simple as possible, but not simpler”. Which is where we come back to your point about “I think this is what is meant by coherence. Without coherence there is no organisation. Just a bunch of individuals doing their own thing.”

Yes, it is. And yet this it gets a lot more tangled than it looks at first sight – in fact it’s probably the primary source of wicked-problems and the like.

First, there’s the crucial distinction I’ve drawn throughout my enterprise-architecture work, the distinction between ‘organisation’ and ‘enterprise’:

– an organisation is a ‘legal entity’ (or equivalent), bounded by rules, roles and responsibilities

– an enterprise is a social-construct, bounded by vision, values and commitments

As an overly-simplistic summary, ‘organisation’ operates primarily on the Simple side of modality and action (Cynefin’s ‘order’), whilst ‘enterprise’ operates primarily on Not-known (‘unorder’) side. An organisation is a means towards the aims of the enterprise; the enterprise represents the achievable and non-attainable ends – and we need to be very careful not to confuse with means with ends. Coherence is likewise a means towards shared ends, but it’s linked more with the ‘enterprise’ side rather than the ‘organisation’ side – in fact we risk creating some serious problems when we confuse coherence with ‘control’, which is how the ‘order’/organisation-side would tend to interpret coherence.

And remember that at the point of action, all we ever have is ” Just a bunch of individuals doing their own thing”. Getting a better understanding of how Belief-sets and Faith-drivers and the like interact in that ‘bunch of individuals doing their own thing’, to end up with something that actually does move towards shared-aims, is what I’m struggling with here.

It’s not easy. (And distractions and worse from the wrong-headedness of Cynefin and its ‘owner’ are not helping. That’s about all that needs to be said about that.) But that’s what I’m working on: I hope that makes somewhat better sense now?

In any case, once again, many thanks.

@Paul Beckford – thanks again: to continue with your other comment:

“I’ve looked back at some of your past posts, and I think you are talking about individual decision making. Is that right?”

Yes and no, sort-of… 🙂

As per my previous reply, I’d say that at the moment of action it’s always about individual decision-making – if you think about it, you’ll recognise that there actually isn’t anything else. As in one of the posts, the closest analogy is that it’s the quantum-level of ‘decision-physics’. What we see as ‘collective’ decision-making happens quite a long way above that level. So what I’m trying to work on here is how those distinct types of ‘decision-physics’ interact – very much like the interaction between the quantum-layer and the Newtonian layer. And, beyond that, the analogic equivalent of Brownian-motion, the first macroscopic level at which we can actually begin to see what’s going on.

“Whilst I see Cynefin as addressing group decision making. The flocking of Boyds come to mind. Groups of people attempting to act in unison.”

Again, ‘Yes and no, sort-of’. Given the impossibility of discussing Cynefin as such, I’ll say that my understanding of its purpose is that its starting-point and focus is collective sensemaking, with a particular emphasis on emergence (‘Complex’) rather than asserting/imposing a single interpretation as ‘the truth’ (as in a Taylorist-style Complicated or Simple).

The emphasis is sensemaking; yet clearly sensemaking on its own has little value, hence the necessary link to group decision-making. And thence, as you said in your previous comment, the desirability of coherence, and the processes / tactics / whatever that we use to support coherence at the collective level.

Again, in my understanding of it, Cynefin’s usage of what I’m describing as the Not-known space seems primarily if not exclusively about ‘sampling’ into that space for new ideas and the like, in order to drive collective-sensemaking and then collective decision-making. Beyond that brief ‘sampling’, all of its activities seem to focus on the collective level, or what I’ve described as the ‘considered’ layer, at significant remove or distance from real-time. And that’s where it stays.

Which is fine, in its own way. But the point is that the decisions made at that level are, in essence, little more than intentions – a statement of ‘intent to decide and act’, rather than an actioned decision.

What I’m trying to grasp here is the mechanisms by which those often ‘rational’ decisions mutate and/or link into the emotive decisions that are actioned at run-time. Again, the underlying ‘physics’ looks the same in theory, but actually is very different in practice – the difference between Newtonian-physics (the ‘considered’ layer) and quantum-physics (at the point of action).

The linkages right over on the far side of the ‘order’ domains is well-described and well-understood, particularly with machines and IT-systems: that’s the (valid) basis of Taylorism and of most software-engineering, for example. But the more we involve real-people, and the further we go over towards the ‘unorder’ realms, that ‘classical’ linkage steadily makes less and less sense. In part this is what Cynefin seems to aim to address, but, again, only seems to tackle the parts that fit most closely with ‘considered’ Complex-domain sensemaking. What aim trying to tackle here is the complete chains of linkage, in both ‘ordered’ and ‘unordered’ contexts, all the way from collective ‘coherence’ and the like, right down into individual sensemaking/decision-making loops at the point of action.

“The flocking of Boyds…” – that’s a very good way to put it. 🙂 Yet note, again, that Boyd’s OODA is very much about individual decision-making, which then also needs to link up with the collective intent. That’s where it gets tricky… 🙂

I hope that gives a better idea of what I’m trying to do here?

Thanks again, anyway.

@Paul Beckford – Hi Paul – one additional item that I’ve realised that I missed – apologies.

“I don’t understand the recursion you speak of and the real time nature of decision making and how that is different from ‘considered’ decision making.”

I’ll deal with the easy bit first: real-time versus ‘considered’. Let’s use a really simple (and, at present, topical) example: New Year’s Resolutions.

— Did you make any? If you did, that’s a ‘considered’ decision, at some distance from the point of action – an intent.

— Assuming you did make a New Year’s Resolution, did you actually keep to it, in terms of what you actually do and did – because that’s a real-time decision.

Given the above, notice how well (or not) the ‘considered’ decision-making lines up with the actual decisions made at the point of action. That’s what I’ve been working on, with the SCAN posts and the like.

(There’s also how review-processes such as PDCA and After Action Review etc link up with all of this: how review of what we intend versus what we actually did is used to challenge and re-align the linkage between what we intend and what we do next time. If there is a ‘next time’, of course: it gets even trickier if there isn’t… 🙂 )

The other point: recursion. For this context, recursion occurs when we use a framework on itself, to review or work with or refine itself. Let’s use the just the sensemaking side of the SCAN frame for this, it should (I hope!) be a safe and uncontroversial example.

So, we would say that this frame has four domains:

— Simple

— Complicated

— Ambiguous

— Not-known, None-of-the-above

And the boundaries of those domains are defined by two axes:

— horizontal: modality – true/false on left, uncertain (‘possibility/necessity’) on right

— vertical: distance in time (or time-available-until-irrevocable-decision) – from point-of-action to potentially-infinite time-available

At first glance, that’s a really simple categorisation. Note the word ‘Simple’.

Then we notice that it gets Complicated. The boundaries between the domains aren’t as fixed as they might at first seem: although there’s a definite ‘bump’ on the horizontal axis (what I’ve termed the ‘Inverse-Einstein test’), it’s actually a continuous spectrum of modality, from predictable to somewhat-variable to a lot of variation to everything inherently-unique with no pattern at all. And the vertical axis is always a completely continuous spectrum: there is a clear transition somewhere, between the ‘Newtonian’ (Complicated/Ambiguous) and ‘quantum’ (Simple/Not-known) levels, but we can’t define explicitly where it is.

Then it starts to get Ambiguous as well: we’ll see this especially when we use cross-maps, such as that one about skill-levels, where each skill-level represents a different mix of ‘order’ or ‘unorder’, again with no clear boundaries, and with a fair few emergent-properties arising as well.

And then we recognise also that there are aspects that are inherently-unique, scattered all the way through everything we’re looking at, with some bits that are definite Not-known or None-of-the-above. (In fact that’s the whole point of this kind of exploration, trying to make sense of those Not-known items and come to some useful actionable decisions about them.)

And, yes, once we dig deeper, we’ll find that the same kind of pattern recurs at another level, and then deeper again, and so on.

Fractal, self-similar, recursive; Simple, Complicated, Ambiguous, None-of-the-above, all of them weaving through each other, all at the same time.

That’s what I mean by recursion here: the framework used to explore itself, and exploring the exploring of itself, and – of course – of what it is itself being used to explore.

Makes sense? I hope? 🙂

First of all some corrections on my part. I meant the flocking of boids:

http://www.red3d.com/cwr/boids/

How independent agents following internal rules can lead to coherent emergent behaviour.

I understand your distinction between the moment of action of each individual agent, and the emergent patterns that can occur as a consequence of group behaviour. They to me seem like different areas of study. I agree that Cynefin doesn’t explain the relationship between the two.

What you describe here as chaotic, I tend to see as complex. Again I’m referring to group behaviour not the actions of individuals.

As for the actions of individuals. Well for boids each boid follows a set of simple rules. I don’t think we can make such simplifications when it comes to people though 🙂 So again I agree, that understanding how individuals make decisions “in the moment” is an area not addressed by Cynefin. It sounds to me as a subject that falls outside complexity science completely. Psychology perhaps?

Moving away from Cynefin, you’ve stirred my interest and I will explore your SCAN model further. Lots of unknowns out there, needing lots of different models I’m sure 🙂

Thanks for taking the time to explain. I believe I mostly understood you on first reading, but this clarification is useful and has given me food for thought.

Last thing worth adding, is that my interest is complexity and emergence, not so much decision making. I see successful human systems as ones were decisions just sort of happen. Given the right initial conditions and attractors people sort of work things out for them self and come to consensus (coherence). It’s a sort of macro view of group behaviour.

Using this view as a core assumption, it leads to the idea that no single person is really in charge of the whole. Whilst each individual can make decisions for themselves, no single individual can really determine the outcome of the system as a whole.

I note that you are an enterprise architect. This role in itself is based on a number of assumptions. That one that springs to mind is that a key individual should have a pre-eminence in decision-making. It sorts of assumes some sort of central designer. Architecture can emerge from group behaviour. In such a case Architecture is an emergent property of a collaborative effort by a group.

Understanding the decision process in such a scenario means understanding group dynamics. Different assumptions will lead to different questions, and ultimately different models. I think you are about a different question then the one addressed by Cynefin. Your question is an interesting question nonetheless.

I’ll get back to you as I dig deeper.

Thanks,

Paul.

Paul.

@Paul Beckford – ah, my apologies: I thought you meant John Boyd (of OODA fame). (It’s still a nice pun, though. 🙂 )

On the boids that you actually meant, yes, that’s a very good example of emergent behaviours arising from very simple rules. For an individual boid, that’s pretty much what I mean about Belief-based decision-making: simple rules, self-oriented, no ‘Not-known’.

“I understand your distinction between the moment of action of each individual agent, and the emergent patterns that can occur as a consequence of group behaviour. They to me seem like different areas of study.”

I’d agree.

I’d emphasise that the formal science and mathematics of emergence is not what I’m dealing with here. (I’m nothing like good enough at the mathematics, for a start.) What I’m really looking at, if you like, is what happens when an individual boid finds itself in a Not-known situation, where its flocking-Beliefs move the other side of the predictability-divide; where there’s an intent, but the intent is thwarted by its own actual decisions in the moment, as well the decisions of others; and yes, how that then interacts with the decisions of of others, which may well lead to emergent group-behaviours.

“What you describe here as chaotic, I tend to see as complex. Again I’m referring to group behaviour not the actions of individuals.”

By the time it becomes visible as ‘group behaviour’, it’s effectively transitioned into the complex. By analogy, group-behaviour is Brownian motion; what I’m looking at is quantum-level transactions. The Brownian-motion is complex; the quantum-level is ‘chaotic’. (Note the quote-marks there: it’s a metaphor, not an exact match.) In principle it’s all the same; in practice it isn’t – or rather, what are nominally the same underlying deep-rules play out in somewhat (or even significantly) different ways.

“I agree, that understanding how individuals make decisions “in the moment” … It sounds to me as a subject that falls outside complexity science completely. Psychology perhaps?”

The formal part would probably fall somewhere between chaos-science and psychology and quite a few other things as well. I’ll admit I’m skipping somewhat sideways from that: I’m just a lowly practitioner, going back to first-principles because I can’t find anything else that makes sense.

“I see successful human systems as ones were decisions just sort of happen.”

I’m interested in how they ‘just sort of happen’ 🙂 (and why they ‘just sort of happen’, too).

“Using this view as a core assumption, it leads to the idea that no single person is really in charge of the whole. Whilst each individual can make decisions for themselves, no single individual can really determine the outcome of the system as a whole.”

Agreed on both points. The crucial distinction here is determine, versus influence, especially in an unpredictable Not-known way. One person can literally change the world, and quite often has. That’s not emergence as such: that’s chaos-in-action. (Again, an over-simplified way to put it, but I think you’ll see what I mean there?)

“I note that you are an enterprise architect.”

That’s part of my work, yes – I work as an enterprise-architect. It’s not who I am. An importance difference…

The nearest role-definition to what I ‘am’ would be meta-methodologist: my real research-area is skills-development, and the practical applications of that. Individual skill is very much about the Not-known, facing the Chaotic and so on: it’s true there are emergent-type aspects to it, but its core is in the individual experience, not the collective.

“This role in itself is based on a number of assumptions. That one that springs to mind is that a key individual should have a pre-eminence in decision-making. It sorts of assumes some sort of central designer. Architecture can emerge from group behaviour. In such a case Architecture is an emergent property of a collaborative effort by a group.”

As far as architecture is concerned, I’m acutely aware that the notion of ‘some sort of central designer’ is largely a Simple fantasy. I’m also well aware of how emergence works in organisations. Yet neither of those are particularly important to me at the moment. What I’m especially concerned with is uniqueness – because that’s what underpins many/most of commercial-context market-differentiation and the like. So again, it’s not about emergence, group-behaviours, the everyday complex: architecturally, often all that will do is drive a ‘race to the bottom’, one boid amidst all the other flocking also-rans. It’s in the Not-known space that differentiation resides – and that’s why so important to an enterprise-architecture.

There’s also the relationship between collective intent, individual intent and actual individual choice at the point-of-action, but we’ve already covered that in the previous comments, I think?

Thanks again, anyway.

“Thanks again, anyway.”

No thank you. I’ll be following your journey with interest.

All the best,

Paul.