The science of enterprise-architecture

Just how much is enterprise-architecture a science? How much could it ever be a science? Or, to put it the other way round, how much does science even apply in enterprise-architecture? That’s what came up for me whilst watching Brian Cox’s very good BBC series Science Britannica.

Interesting questions.

To which the honest short-answer to each of those questions – at least, in terms of what most people would understand as ‘science’ – would have to be ‘Not much‘.

Hmm… tricky, that…

It’s definitely a qualified ‘Not much’, though. There are some areas where mainstream ‘provable’ science can apply: straightforward physics of power-consumption, heat-distribution and cooling in data-centres, for example, or bandwidth-constraints and disk-capacities and suchlike – though most of that we’d more often describe as detail-layer solution-architecture than big-picture enterprise-architecture. There are quite a few areas of EA and EITA where statistical-assessments and similar could apply: Roger Sessions’ Simple Iterative Partitions come to mind there, for example, or some of the more usable parts of the Enterprise Engineering methodologies. A lot of signal-theory is statistical, too; likewise risk-management, of course.

But for the rest? Not so much.

We do see a lot of what claims to be ‘science’ in enterprise-architectures – but to be blunt, not much of it matches up to any real-world test. Most seems to be little better than badge-engineering, an attempt to use a gloss of ‘scientific’ pseudo-certainty as a marketing-tool, to persuade worried executives that they can still cling on to ‘control’ where none can actually be had. (The marketing-hype of so many big-system IT-vendors comes immediately to mind here…) Let’s be blunt: most of that so-called ‘science’ is a little more than a mangled mess of bulimic bluster, blundering BS, and smug self-satisfied self-delusion. And we need to do a lot better than if we’re to get much further in enterprise-architecture.

Hence, as usual, best to start by going right back to first-principles: what is ‘science’, anyway?

There’s an important distinction here that’s usually missed, between ‘science as portrayed’ – the end-results of science – and ‘science as practised’ – the processes and practices via which those end-results are achieved. We’ll need to cover both here, but for most people, the only one they’d know about, and recognise as ‘science’, is the former: ‘science as portrayed’, the outward-form of ‘science’.

For this, there are some clearly-defined rules and principles:

- reproducibility – the same results must be reproducible by others, following the same methods under the same conditions

- predictive – the results may be used to predict the behaviours of phenomena in the future

- consistent – the assertions and outcomes are consistent, both internally within and in relation to itself, and externally in relation to other scientific laws and themes

- simplicity – Occam’s Razor should apply

- completeness – the results should cover all phenomena within the context

And, to cover any questions about any of the above:

- peer-review – establish consensus about what is true and what is not

Which, when we put them all together, would at first seem to give what so many managers so desperately desire: the possibility of certainty and control over what happens, both now and in the future. Hence the much-lauded notions of ‘scientific management‘ and the like.

But there’s a catch.

A big catch.

A catch so big that, for most practical purposes in business – and often elsewhere as well – it renders the whole concept of ‘control’ down to little more than a dangerous delusion. If there is such a thing as ‘scientific management’, it ain’t that kind of science…

The catch centres around that small clause about “following the same methods under the same conditions” – because, in reality, we never do have exactly the same methods, or exactly the same conditions. And if we don’t have exactly the same methods or conditions, we can’t have reproducibility, or consistency, or simplicity, or completeness or – most important to those managers – predictability. It really is as final as that – and there’s no way to get round that fact, either.

The reason why people tend to think that such things are possible is that in practice, in some contexts, we can get quite close to that ideal, because there’s not much variation there. That’s certainly true for the mid-range of physics, much of chemistry, and some aspects of biology: technologies that derive directly and only from those themes can be surprisingly predictable.

Yet there’s a lot more to the real-world than just that subset of themes – and that’s where predictability will often fall apart. For example, many phenomena in sub-atomic physics can at present be described only in statistical terms, as probabilities. In some cases we can be very precise about those probabilities: half-life for radioactive isotopes is one such example. Yet that precision never changes the fact that it is only a probability, not a certainty:

- high-probability does not mean ‘will definitely happen’

- low-probability does not mean ‘will never happen’

(In business and elsewhere, how many people do we know who’ve got that one wrong? – probably including ourselves, if we’re honest…)

If we’re dealing only with large numbers, those variations may not seem to matter all that much: actuarial estimates in insurance should balance out over the whole, for example, as should most forms of betting in a casino. There is a science of probabilities, to help us handle that – but note that it’s not a ‘predictable science’ in the same sense as earlier above.

Likewise for the probability-oriented parts of information-technologies, such as the whole concept of ‘noise’ in signal-theory. Again, there’s a definite science there, but it’s not ‘predictable science’: for example, to get a perfect guarantee of signal-reception, every time, we’d need a conductor of infinite dimensions, which ain’t gonna happen in the real-world – which means we have to accept, and devise work-arounds for, the fact that sometimes the signal itself will not and cannot be perfect.

Yet if we’re dealing with only small numbers, those variations matter a lot – so much so that it’s a fundamental principle, both in mathematics and in physics, that some things are inherently uncertain. In other words, we will never be able to achieve absolute certainty, about anything – all the way down to the very roots of matter itself. Or, in short, control is a myth – it does not and cannot exist. Ouch…

Part of the problem is that which way we look at things can also matter a lot. To give a physics example, if we look at fissile atoms in terms of half-life – in other words, from the statistical perspective – we might well seem to have very high levels of predictability. But if instead we look at the same phenomena from the perspective of a single atom – a uniqueness perspective – things look very different: the half-life tells us the period at which there’s an exact 50:50 probability that this atom should split, but tells us nothing at all about when the atom will split – that part is inherently-uncertain.

So to bring this same point to the business-context, consider the huge difference in the respective views onto a ‘customer-journey’ – as in the zigzag line in the diagram above – between the organisation’s view of an interaction-process, from the inside out, and a customer’s view of that same process, looking inward from the outside:

— To the organisation, the interactions would seem to conform to the usual large-number rules for statistics: it’s a big market out there, and any variations can be all but ignored, because they should get smoothed-out by the overall patterns in the overall flow. It looks predictable, possibly almost controllable: at worst, it’s just probabilities, a ‘numbers-game’.

— But to the customer, their experience is as a ‘market-of-one’: in most cases they won’t see or interact with any other customers. And whatever the context, some aspects of their needs will be unique to them – if only from the fact of being human. Which means that from their perspective, it’s a quantum-event, inherently unique, inherently different from every other.

And by definition, concepts of ‘control’ do not and cannot make sense with things that are unique: what works for one thing of the same apparent type may not work at all with another.

[If this isn’t obvious, and you’re a parent with more than one child, consider the upbringing of your own children: did everything work exactly the same way with each child? If you’d answered ‘Yes’, that in itself would be almost unique… 🙂 ]

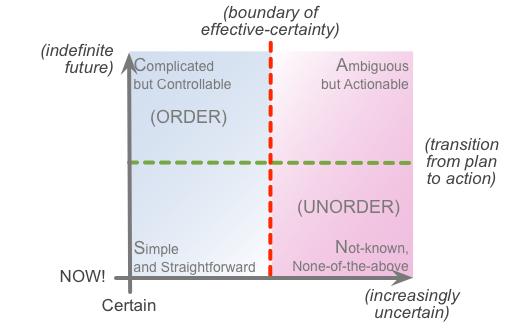

So let’s describe this in terms of the SCAN framework:

For the moment, we’ll ignore the vertical-axis (from plan to action) and focus only on the horizontal-axis, from absolute-certainty on the far-left, towards absolute-uncertainty and uniqueness out towards infinity on the far-right.

We’ve already established two key points about this:

- the public perception of ‘science’ is that it makes things predictable and certain

- in real science, it’s clear that absolute-certainty is almost certainly impossible in almost any real-world context

In SCAN’s terms, absolute-control and absolute-certainty – the kind of ‘control’ claimed in ‘scientific-management’ and its various offspring – could only be portrayed as an infinitely narrow line right over on the far-left of the frame: so impossibly thin that for all practical purposes it does not and cannot exist. It’s a myth.

Which, for ‘the science of enterprise-architecture’, leads to two more points:

- the public perception of ‘science’ makes little to no sense in any real-world business context

- whatever science enterprise-architecture might use, it will and must be different from that myth of pseudo-certainty

By definition, an enterprise-architecture must be able to cover ‘just enough’ of the overall context – the whole the space covered by the SCAN context-space map – so as to deliver the required results for the enterprise. Yet this fact leads us to one more, rather important but definitely-painful point:

- no matter how much our clients may desire ‘control’ and ‘scientific’ management of their organisations, there’s no way that any real science of enterprise-architecture could ever deliver it

Ouch…

Tricky indeed…

Fortunately, there is a way out of this trap – and a real scientific one at that. But to make it work, we depend on two crucial understandings:

- the way that science actually works – the process and practice of scientific enquiry – is itself not ‘scientific’ as per that public-perception above (or, more precisely, the public-perception relates only to one specific subset of the overall process of science)

- whilst absolute-certainty may never be possible, effective-certainty may well be achievable

Effective-certainty is what we might also describe as ‘good-enough’-certainty: it isn’t actually certain, but it’s certain-enough for the organisation to be able to deliver the required outcomes to the overall enterprise.

In SCAN, the boundary of effective-certainty is indicated by the vertical red dotted-line. It’s what’s happily nicknamed the ‘Inverse-Einstein test’, after a much-quoted assertion attributed to Einstein: “the definition of insanity is to do the same thing and expect different results”. To the left of the red line, Einstein’s dictum holds true: we do (effectively) the same thing to get (effectively) the same results. But to the right of that line – and this happens a lot in any real-world business – the inverse may apply: we may have to do different things to get the (effective) same results, and/or doing the same thing may lead to different results.

The catch is that once we go past that red line, what happens becomes inherently uncertain: the only thing we can be certain of is that the further we go to the right of that red line – metaphorically speaking – the more uncertain this will get.

And – just to make things even more fun – the red line itself is only a metaphoric marker for the boundary of effective-certainty. Which itself is not certain: in effect, it will move back and forth along that axis, according to what’s happening in the context. Which could be Simple, Complicated, Ambiguous, maybe even entirely, truly Not-known before: in other words, a SCAN applied to the SCAN itself. Hence, straight away, we’re into a kind of recursion here…

Again, tricky indeed: right down the rabbit-hole, if we’re not careful…

Yet that’s exactly what we should expect, if we’re to tackle the science of enterprise-architecture in a proper scientific way.

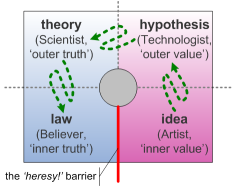

What we discover, when explore those texts that describe the actual process of science – such as William Beveridge’s The Art of Scientific Investigation – is itself something that’s decidedly messy and uncertain. There is a kind of progression there, from ‘idea’ to ‘hypothesis’ to ‘theory’ to ‘law’, but overall it shows a pathway through a context-space that maps out very much like SCAN:

In many ways, it should be a continual cycle, much like PDCA or the like: but the catch is that the Believer doesn’t letting go of their certainty of belief… (What most people describe as ‘science’ is actually much closer to a kind of pseudo-religion of science, or ‘scientism‘ – a smoothed-out structure of belief about ‘science’, without much connection to the complexities of its real-world practice.) The result is that the cycle can become blocked at that point, often with highly-emotive accusations of ‘heresy!’ and the like: and yet the literal meaning of ‘heresy’ is simply ‘to think differently’, which is what we need if we are to have any chance of breaking out of self-referential loop of circular-reasoning – or, for that matter, for finding the innovations needed in a changing business-context.

Hence one of the characteristics of real science is that it has to be able to let go of beliefs that it may currently think of as science. Since some of these beliefs may be those that it’s currently using as the basis for its work, this can present an interesting lift-yourself-by-your-own-bootstraps challenge…

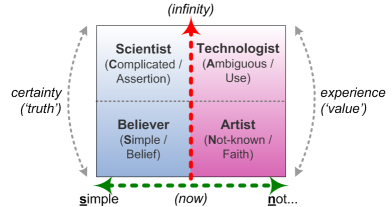

One way out is to view the process of enquiry as dependent on a set of distinct paradigm-modes – each with their own often mutually-incompatible disciplines – that we could summarise visually as follows:

We then use explicit tactics to switch between disciplines, according to what we’re doing and what we need to do – a literal ‘path of discovery’ around the context-space.

There’s also a useful cross-map to SCAN here, which in part indicates when and why we need to keep switching between those various modes and their respective disciplines:

What most people think of as ‘science’ – certainty, predictability, control – sits right against the left-hand edge of the frame. As long as we accept that we’re actually fudging things quite a bit, we can get something resembling ‘control’ anywhere between the left-hand edge and that vertical red dotted-line – the ‘inverse-Einstein boundary’, the boundary of ‘effective-control‘. But beyond that, no: as with science itself, we have to start using things that actually aren’t what most people think of as ‘science’.

Up in the upper-right we have what are often called the ‘soft-sciences’: sociology, psychology and so on – all of them useful and important in enterprise-architecture, yet actually never certain. It’s where we find ‘complexity-science’ and suchlike, about which, to be honest, I often have serious doubts – not least because so much of it, on closer inspection, actually turns out to be yet another form of inherently-uncertain ‘soft-science’ dressed up in a pseudo-certainty guise. (Real complexities masked by largely-spurious pseudo-certain statistics and Complicated equations, and then further reduced to Simple so-called ‘laws’: in other words, straight back to Taylorism again, but in almost the worst possible way. When complexity is dumbed-down to a mere marketing-gimmick, all we can really do is sigh…)

Yet even complexity-science – even when it actually is for real – is often not enough here. What we’re actually dealing with, in business and elsewhere, is mass-uniqueness – an infinity of individual instances, events and incidences, which we can sometimes filter or interpret in terms of samenesses, but which ultimately always remain unique. Which, for sciences, places us closer to chaos-science than complexity-science: the latter deals with emergent-properties of relationships between those unique instances, but doesn’t help us at all with the actual uniqueness itself. But as yet, most chaos-science doesn’t really deal with the kinds of uniqueness-phenomena that we need to work with in enterprise-architecture, in business-areas such as:

- healthcare

- customer-service

- clothing and fashion

- sales and market-design

- information-search

- farming – weather and micro-climate

- city-planning – topography, geography and ‘local-distinctiveness’

So there we have it: not only is ‘scientific-management’ almost no use to us, but there’s not much support available to us from mainstream science either.

Which kinda leaves us on our own…

To illustrate the point, let’s go back to that initial list of attributes of ‘science’ again, and compare it to what we’re actually dealing with, most of the time, in enterprise-architecture:

- reproducibility? – even with a so-called ‘best-practice‘, it’ll never be quite the same methods or same conditions (and often the key point anyway, for business-competition, is that the same results should not be reproducible by others!)

- predictive? – when the context itself is undergoing almost continual and (these days) accelerating change, any prediction based on current conditions is going to be uncertain at best

- consistent? – creating even a consistent terminology in a single business-domain across a whole enterprise is often enough of a nightmare in itself, let alone anything larger in scope or scale

- simplicity? – rather than Occam’s Razor, most of the time we’ll find ourselves struggling with our own contextual equivalent of Hickam’s Dictum, that “patients can have as many diseases as they damn well pleases!”

- completeness? – across a large, complex, context, some of whose aspects will be changing dynamically on a second-by-second basis, or less, any notion of ‘completeness’ is inherently no more than a fantasy of wishful-thinking…

- peer-review? – when what we’re dealing with is unique and new, who exactly are our peers anyway?

So what does work? Where – if anywhere – is the science for architecture?

What we do know is where that science isn’t – what kind of science doesn’t work for our kind of work. The certainties of supposed ‘science’ – the certainties that so many managers crave – exist only on the furthest left-hand edge of the SCAN frame: everything else is, at best, an illusion of certainty…

Yet whilst we’re tasked with bringing things over towards that left-hand side as much as possible, most of our work occurs over on the right-hand side, on the opposite side of the inverse-Einstein boundary to the world of those managers – which means that we need to be careful not to allow their notions of ‘science’ to get too much in our way. For example:

- where managers and solution-designers need frameworks, to give clear instructions on what to do, we need metaframeworks – frameworks to help us design context-specific frameworks

- where they need methods and methodologies, we need metamethods and metamethodologies – the tools and techniques to identify and define those context-specific methods and methodologies

- where they need schemas and models and metamodels for data, we need metametamodels and metametametamodels – the tools and techniques that can create any context-specific metamodel for any required scope or level

- where they need toolsets with distinct notations to tackle distinct business-questions, we need tools that can cover every scope at every level of detail across the entire toolset spectrum

Hence it looks like the most useful short answer is in three parts:

- other than specific areas such as in the simpler parts of IT, it’s probably best almost to forget about ‘science-as-portrayed’ – the ‘science’ of certainty and suchlike

- instead, focus on ‘science-as-practised’ – the processes of ideation, experimentation, sensemaking and decisioning that underpin the actual science

- always remember that logic and the like have real limitations – they are useful, so we should probably use them wherever we can, and wherever it’s appropriate, but they’re by no means the only tool in the toolbox

There should be no doubt that it would be useful, and valuable, for enterprise-architects in particular, learn and maintain the disciplines of ‘science-as-practised’ – but it’s really important that those should not solely be the comfort-blanket of ‘science-as-theory’. For example, in Beveridge’s The Art of Scientific Investigation we find whole chapters on the role of chance, the use of intuition, the importance of strategy and politics, and the hazards and limitations of reason. There are distinct disciplines around each of those themes – and those are the disciplines of science that we need most in enterprise-architecture.

(There’s more detail on those disciplines in my book Everyday Enterprise-Architecture, if you’re interested, and that’s also what this whole weblog is about, really.)

Ultimately, though, we need to remember that we’re enterprise-architects, not scientists: science has a useful role to play in our work, but it’s not the real reason why we’re here! Our work is not about the would-be ‘completeness’ of science, but more about ‘just enough’ – just enough detail, just enough scope, just enough science – coupled with an awareness of ‘it depends‘, that everything depends on everything else, and that there’s always a larger scope in which things will inherently become more blurry and ambiguous anyway.

And that’s why the real answer to “Is enterprise-architecture a science?” must be “Not much” – and always will be, too.

Science is not why we’re here: value is. Our emphasis should always be on usefulness, on real value, not imaginary ‘truth’ – delivering real business-value for our real business-clients with their real business-questions. Everything else is merely a possibly-useful means towards that end.

And if we ever forget the real reason for our role, we’d have kinda lost the plot, wouldn’t you think? 🙂

Over to you for comments and suggestions, anyways.

I liked this one Tom. My initial thoughts went to a bunch of entrenched dichotomies – many of which inform our thinking on what constitutes the validity of information. And in the EA sphere I guess that nearly always comes down to what is it that makes information valid as a basis for action – whether that is a design, management, or strategic action.

Empirical versus Rational.

Causal versus Intentional.

Reduction versus Construction.

Cartography versus Colonisation.

Collaboration versus Competition.

Means versus Ends.

(Not that these dichotomies are strictly mutually exclusive in any instance.)

But…

Science tends to empiricism, causality, reduction, cartography, means, and collaboration.

Business tends to empiricism, intentionality, construction, colonisation, ends and competition.

And while they share a predisposition to the empirical, your discussion on the appropriateness of the scientific method shows that what counts as empirical data in business and science is not necessarily the same. Nor should it be.

I agree with your conclusion. However, I would remove the scare quotes from truth and drop the imaginary.

I think it is correct to say our emphasis is “usefulness not truth”.

I think it is also important to add our emphasis is “function not form” as well.

(For while a rigorously scientific approach is an dead-end for EA, overly Platonic rationalist approaches are another “elephants graveyard” for the noblest EA intentions. And I suspect it has quite a few more rotting hulks than the former.)

And if business is essentially intentional this leads the conversation to some interesting corollaries:

What is it that tempers those intentions against evidence?

What is it that tempers those intentions against evil?

A culture of doing sill requires a decent relationship with reality, and, of course, some ethics / values.

Being a regular reader of your blog I know these are questions you have already given some very good thought to.

Cheers.