The science of enterprise-architecture (short version)

(The previous post, ‘The science of enterprise-architecture‘, covered a lot of ground, but ended up kinda long – again… So for those with tendencies towards TL;DR, here’s a somewhat shorter version. 🙂 )

How much is enterprise-architecture a science? To put it the other way round, how much does science even apply in enterprise-architecture?

The honest short-answer is ‘Not much‘.

We do see a lot of what claims to be ‘science’ in enterprise-architectures – but to be blunt, not much of it matches up to any real-world test. To make sense of this, it’s best to start by going back to first-principles: what is ‘science’, anyway?

The key distinction is between what most people think of as ‘science’ – ‘science as portrayed’, the end-results of science – versus real ‘science as practised’, the processes and practices via which those end-results are achieved. For science-as-portrayed, there are explicit rules and principles:

- reproducibility – the same results must be reproducible by others, following the same methods under the same conditions

- predictive – the results may be used to predict the behaviours of phenomena in the future

- consistent – the assertions and outcomes are consistent, both internally within and in relation to itself, and externally in relation to other scientific laws and themes

- simplicity – Occam’s Razor should apply

- completeness – the results should cover all phenomena within the context

- peer-review – establish consensus about what is true and what is not

Which suggests the possibility of certainty and control over what happens. Hence ‘scientific management‘ and the like.

But there’s a huge catch, around “following the same methods under the same conditions”. In enterprise-architecture – especially for high inherent-uniqueness, such as in healthcare, customer-service, clothing, fashion, sales, market-design, information-search, farming or city-planning – what we actually see is more like this:

- reproducibility? – even with so-called ‘best-practices‘, it’s never quite the same methods or conditions (and often the key point is that the results should not be reproducible by others!)

- predictive? – where the context itself is undergoing almost continual and accelerating change, any prediction is always going to be uncertain

- consistent? – creating even a consistent terminology across an enterprise is often a nightmare, let alone anything larger in scope or scale

- simplicity? – rather than Occam’s Razor, we’ll often struggle instead with our equivalent of Hickam’s Dictum, that “patients can have as many diseases as they damn well pleases!”

- completeness? – across a large, complex, dynamically-changing context, ‘completeness’ is a fantasy of wishful-thinking…

- peer-review? – whenever we’re dealing with something new or unique, who are our peers anyway?

If we don’t have exactly the same methods or conditions, we can’t have reproducibility, consistency, simplicity, completeness or predictability – which also places tight constraints on how much we can use ‘science-as-portrayed’. And there’s no way to get round those facts, either.

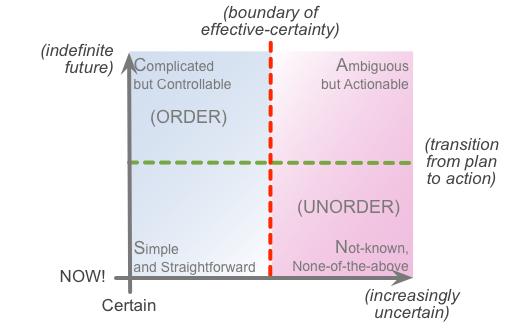

We can describe this with the horizontal-axis of the SCAN framework, from certainty on the far-left, versus uncertainty and uniqueness extending towards infinity on the far-right:

In SCAN’s terms, absolute-control and absolute-predictability exist as an infinitely narrow line on the far-left. Yet by definition, an enterprise-architecture must cover the entire context-space covered by the SCAN frame. Hence, in practice:

- the public perception of ‘science’ – as certainty and control – can be dangerously misleading in any real-world business context

- whatever science enterprise-architecture might use, it will and must be different from that myth of ‘certainty’

- no matter how much our clients may desire ‘control’ and ‘scientific’ management, there’s no way any real science of enterprise-architecture could deliver it

To use science in enterprise-architecture, we depend on two crucial understandings:

- the way that science actually works – the process and practice of scientific enquiry – is itself not ‘scientific’ as per that public-perception above

- whilst absolute-certainty may never be possible, effective-certainty may well be achievable

Effective-certainty is what we might describe as ‘good-enough’-certainty: it isn’t actually certain, but it’s certain-enough for the organisation to be able to deliver its required outcomes.

In SCAN, the boundary of effective-certainty is indicated by the vertical red dotted-line. Once we go past that line, what happens becomes inherently uncertain: and the further we go to the right of that line – metaphorically speaking – the more uncertain it gets.

Yet that’s exactly what we should expect, for a true ‘science of enterprise-architecture’. Texts that describe the actual process of science – such as William Beveridge’s The Art of Scientific Investigation – show that it’s decidedly messy and uncertain. It also has to be able to let go of beliefs that it may currently think of as science – an interesting bootstrap-challenge…

One option is to view the process of enquiry as a set of distinct paradigm-modes – each with their own distinct disciplines – that we could summarise in SCAN-like form as follows:

We then use explicit tactics to switch between disciplines, as required – a literal ‘path of discovery’ around the context-space.

Whilst we’re tasked with bringing things toward that left-hand side of the SCAN frame, most of our work occurs over on the right-hand side. For example:

- where managers and solution-designers need frameworks, we need metaframeworks – frameworks to help us design context-specific frameworks

- where they need methods and methodologies, we need metamethods and metamethodologies – tools and techniques to define context-specific methods and methodologies

- where they need schemas and metamodels for data, we need metametamodels and metametametamodels – tools and techniques to create a context-specific metamodel for any scope or level

- where they need toolsets with distinct notations to tackle distinct business-questions, we need tools that can cover every scope at every level of detail across the entire toolset spectrum

Hence it looks like the most useful key to an answer is in three parts:

- other than for some aspects of IT and machines, it’s probably best almost to forget about ‘science-as-portrayed’ – the ‘science’ of certainty and suchlike

- instead, focus on ‘science-as-practised’ – the processes of ideation, experimentation, sensemaking and decisioning that underpin real science

- always remember that logic and the like have real limitations – they are useful, but they’re not the only tool in the toolbox

Enterprise-architects should learn and maintain the disciplines of ‘science-as-practised’. For example, Beveridge’s The Art of Scientific Investigation describes the role of chance, the use of intuition, the importance of strategy and politics, and the hazards and limitations of reason. There are distinct disciplines around each of those themes – and those are the disciplines of science that we need most in enterprise-architecture.

Our work is not about the would-be ‘completeness’ of science, but more about ‘just enough’ – just enough detail, just enough scope, just enough science – coupled with an awareness of ‘it depends‘, that everything depends on everything else, and that there’s always a larger, more ambiguous scope.

And that’s why the real answer to “Is enterprise-architecture a science?” must be “Not much” – and always will be.

Ultimately, too, we need to remember that we’re enterprise-architects, not scientists. Science and its ‘truths’ are not the reason we’re here: value is. We need to keep the focus on usefulness, on real value, not imaginary ‘truth’ – delivering real business-value for our real business-clients with their real business-questions. Everything else is merely a possibly-useful means towards that end.

Good work Tom!! I will write more later…