Hypotheses, method and recursion

What methods do we need for experiment and research in enterprise-architecture and the like? Should and must we declare exactly what our hypothesis will be before we start?

Those are some of the questions that Stephen Bounds triggered off for me with this tweet, a couple of days back:

- smbounds: All sciences should preregister their hypotheses, but esp soft sciences like #psychology and #km Guardian: Psychology’s ‘registration revolution’

I duly read the article, which, yes, all seemed fair enough on the surface. But I do have some severe reservations about how well those kinds of ideas actually work in real-world practice, as summarised in the post that I referenced in my reply-tweet:

- tetradian: “all sciences should register their hypotheses” – yes, sort-of… e.g. see my ‘The science of enterprise-architecture‘ #entarch

Which triggered off a really nice back-and-forth on Twitter:

- smbounds: A good read & the uncertainty of a single event is worth emphasizing. But playing the odds still pays off in the long run. // so scientific method is still important for EA and KM, even if it is just making predictions in the aggregate.

- tetradian: strong agree scientific-method important in #entarch – yet must also beware of its limits – eg. see also ‘Enterprise-architect – applied-scientist, or alchemist?‘ // relationship b/w repeatable (‘scientific method’) and non-repeatable (not suited to method) is fractal & complex in #entarch etc // not using scientific-method where it fits is unwise; (mis)using it where it doesn’t fit is often catastrophic (e.g BPR) #entarch

- smbounds: scientific method is not about repeatability or cause & effect. It is about testing hypotheses in a way that minimises our bias.

- tetradian: oops… scientific-method is exactly about repeatability and predictability – that’s its entire basis and raison-d’être… 🙂 // confirmation-bias and other cognitive-errors are real concerns – yet ultimately it’s all about repeatability // re science limits, recommend ‘Art Of Scientific Investigation‘ and ‘Against Method‘

- smbounds: disagree: the *method* needs to be repeatable, but the hypothesis could be one of non-repeatability

- tetradian: “the *method* needs to be repeatable” – this is where it gets tricky 🙂 – method vs meta-method, fractal-recursion in method etc // probably best I blog on this (method and meta-method in #entarch etc)

I asked for permission to quote the tweets above, which Stephen kindly gave – hence this post.

The first part of my response would be to quote from the Wikipedia page on Paul Feyerabend‘s book ‘Against Method‘:

The abstract critique is a reductio ad absurdum of methodological monism (the belief that a single methodology can produce scientific progress). Feyerabend goes on to identify four features of methodological monism: the principle of falsification, a demand for increased empirical content, the forbidding of ad hoc hypotheses and the consistency condition. He then demonstrates that these features imply that science could not progress, hence an absurdity for proponents of the scientific method.

(See the Wikipedia page for the respective page-numbers in the original printed book.)

The relevant point here is that one of the key assertions that’s made in that Guardian article is a requirement for “the forbidding of ad-hoc hypotheses” – and yet, as in the quote above, that is explicitly one of the four foundation-stones of ‘scientific method’ whose validity Feyerabend demolishes in Against Method. Beveridge, in The Art of Scientific Investigation, perhaps isn’t quite so extreme as Feyerabend, but not far off: there’s a whole chapter on hypothesis, anyway, including a section on ‘Precautions in the use of hypothesis’ (pp. 48-52). In short, it’s nothing like as clear-cut as the Guardian article makes it out to be – and a lot more problematic than it looks, too.

One of the things that makes problematic is a fundamental trap I’ve mentioned here a few times before now, known as Gooch’s Paradox: that “things not only have to be seen to be believed, but also have to be believed to be seen“. That’s where those cognitive-errors such as confirmation-bias arise: our beliefs prime us to see certain things as ‘signal’, and dismiss everything else as ‘noise’. Defining an a priori hypothesis is, by definition, a belief: that there is something to test, and that this chosen method and context of experimentation is a (or the) way to test it. Which, automatically, drops us straight into the trap of Gooch’s Paradox: and if we don’t deliberately compensate for the all-too-natural ‘filtering’, we won’t be able to see anything that doesn’t fit our hypothesis. Oops…

And, yes, I’m going to throw in a SCAN frame at this point, because it’s directly relevant to the next part of the critique:

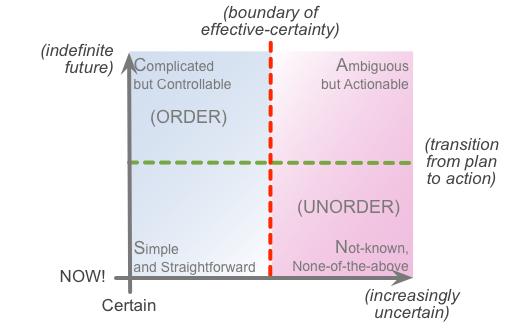

In practice, predefined a priori hypotheses will only make sense with ‘tame-problems’ that stay the same long enough to test an hypothesis against them. Which, in SCAN terms, pretty much places us well over to the left-hand side of SCAN’s ‘boundary of effective-certainty’. There can be quite a bit of variation – in fact the variation is exactly what an hypothesis should test, and test for – yet even that variation itself has to be ‘tame’ enough to test.

In the mid-range of the hard-sciences – between the near-infinitesimally-small and the near-infinitely-large – things do tend to stay tame enough for a priori hypotheses to work, or at least be useful. yet outside of that mid-range, and even more so in the ‘soft sciences’ such as psychology and large parts of knowledge-management – the two areas mentioned in Stephen’s initial tweet – we’re much more likely to be dealing with wild-problems rather than tame-problems: in other words, more often over to the right of that ‘boundary of effective-certainty’. Where, courtesy of Gooch’s Paradox, those a priori hypotheses are more likely to lead to exactly the kind of circular self-referential ‘junk science’ that this ‘registration revolution’ is intended to prevent…

The other real trap here is fractal recursion – that, to use SCAN terminology again, we have elements of the Simple, Complicated, Ambiguous and Not-known themselves within things that might seem to ‘be’ only Simple, Complicated, Ambiguous or Not-known. (See my post ‘Using recursion in sensemaking for a worked-example of this, using the domain-boundaries of the SCAN frame itself.) No real surprise there, I’d guess: to my mind, it should be obvious and self-evident once we understand the real implications of experiential concepts such as ‘every point contains (hints of) every other point’. And the reason why this is such a trap is that a priori hypotheses will sometimes work even in the most extreme of the Not-known, but then suddenly make no sense – which is what we’d more likely expect out in that kind of context, but confuses the heck out of someone who does assume that it’d continue working ‘as expected’.

I’m glad to see that there is some hint of awareness of this in the Guardian article:

The reasoning behind [the ‘registration’ initiative] is simply this: that by having scientists state at least part of what they’re going to do before they do it, registration gently compels us to stick to the scientific method.

It does at least acknowledge some of the uncertainties, in that point about “at least part of”, rather than an assertion of ‘must’, or ‘always’. Yet what there still doesn’t seem to be there, in that quote, is enough awareness of the very real limits of the ‘scientific method’…

As Stephen says, there is a real need for consistent method. Yet given that there are real limitations to the validity and usefulness of the classic scientific-method, what’s more needed instead is meta-method, generic or abstract methods for creating other context-specific methods dynamically according to the nature of the context. For example, if we again use the SCAN frame as a base-reference, we can use one of its cross-maps, of typical ‘themes’ in each of the SCAN domains, to suggest appropriate techniques to work with and use for tests in each type of context:

As described in the posts ‘Sensemaking and the swamp-metaphor‘ and ‘Sensemaking – modes and disciplines‘, the ‘swamp metaphor’ provides another worked-example:

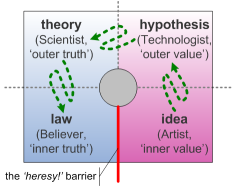

Which in turn links up with the classic scientific-method sequence of ‘idea, hypothesis, theory, law’:

The crucial part in both of these examples is that we use the respective cues to select methods that match up with the underlying criteria of the respective domain: don’t mix them up! Which, unfortunately, is very easy to do, particularly when we’re dealing with fractal-recursion…

To get another view on how meta-methods work, perhaps take a look at some of the posts here on metaframeworks, such as ‘On metaframeworks in enterprise-architecture‘, and the series of posts starting with ‘Metaframeworks in practice – an introduction‘.

Overall, though, the key point here is that a single method will not be sufficient to cover all of the different types of context that we deal with in enterprise-architecture and the like – perhaps especially for the ‘soft-sciences’ elements of the practice. And yes, that ‘no single method’ constraint does apply to the fabled ‘scientific method’ too: trying to use it where its assertions and assumptions inherently do not and cannot apply is not a wise idea!

Leave it there for now: over to you for comments, perhaps?

Hi – thanks for this really interesting article.

Two quick points:

1. You say: “The relevant point here is that one of the key assertions that’s made in that Guardian article is a requirement for “the forbidding of ad-hoc hypotheses”

That’s not quite right. There is no problem in generating an ad hoc hypothesis from the data. The problem is when researchers pretend, after the fact, that this hypothesis was a priori (the practice of HARKing). In other words, we shouldn’t use the same data to simultaneously generate a hypothesis and test the same hypothesis. This is circular and an act of scientific deception, particularly when the HARKed hypothesis is pitched against “stooge” competitor hypotheses that the author knows never had a chance of being supported. It also falsely elevates the prior belief in the HARKed hypothesis. According to the H-D scientific method, we should instead conduct a new experiment to test the ad hoc generated hypothesis in an a priori way.

2. “Defining an a priori hypothesis is, by definition, a belief: that there is something to test, and that this chosen method and context of experimentation is a (or the) way to test it. ”

Yes, and pre-registration is only appropriate if there *is* a hypothesis to test. It isn’t necessary for purely exploratory work that focuses instead on hypothesis generation. The problem with the publishing culture in psychology, and in many life sciences, is that there is no proper avenue for publishing purely exploratory research. Scientists are instead forced to shoehorn exploration into a confirmatory, hypothesis-driven framework, which requires HARKing. We’re in the process of addressing too by creating a new article format at Cortex called “Exploratory Reports”, specifically for exploratory studies.

2. “the key point here is that a single method will not be sufficient to cover all of the different types of context that we deal with in enterprise-architecture”

I agree. Pre-registration has never been proposed as a universal or mandatory solution for all science. We’ve only ever proposed it as an option for researchers who want to embrace transparency in hypothesis-testing. I should emphasise too that pre-registration places no limits on exploratory analyses and hypothesis generation. These are simply reported in such a way that readers can tell the difference between them and the a priori hypotheses. How can transparency be a bad thing?

For an extended discussion of these issues: http://orca.cf.ac.uk/59475/1/AN2.pdf

Hi Chris – I presume from the comments and context that you’re one of the key promoters of the ‘pre-registration’ proposals? In which case I’ll assume two things: one, that you’ve almost certainly been somewhat misquoted or misrepresented in the Guardian article; and two, given that, and the background, I’d probably best mostly shut up and listen? 🙂 At the very least, many thanks for taking me seriously on this!

(Your linked PDF duly read and possibly-digested… 🙂 Bear in mind that my main work-focus these days is on enterprise-architectures [EA], including their social aspects: it’s related to psychology, obviously, but it’s not quite the same, probably with a much higher level of intentional and often-necessary localised-uniqueness. My last experience closer to your kind of field was in meta-analysis of so-called ‘research’ on domestic-violence, where what you call ‘Questionable Research Practices’ turned out to be not so much the exception as the actively-condoned norm… Anyway, on to your specific points.)

On your 1:

— “The problem is when researchers pretend, after the fact, that this hypothesis was a priori”

Strongly agree that that’s a huge problem – especially when people aren’t even aware that they’re doing it…

— “we shouldn’t use the same data to simultaneously generate a hypothesis and test the same hypothesis. This is circular and an act of scientific deception”

In your field – and, as above, in my experience of ‘research’ on domestic-violence – I’d agree that that would indeed be scientific-deception. It’s a bit trickier in some of the areas that I work in, though, because we can quite often need a little bit of deliberate ‘deception’ (or, less pejoratively, ‘by stealth’) to get people over the hump of what I term Gooch’s Paradox’ – that “things not only have to be seen to be believed, but also have to be believed to be seen”. Methodologically speaking, there are some recursions here that can become truly horrible if we’re not darn careful – but we actually do need those recursions if we’re to get anywhere useful. (You can probably see from that that I’m quite a strong fan of Feyerabend – and Pirsig, for that matter – though my real guiding light is Beveridge’s ‘The Art of Scientific Investigation’, referenced and linked in the post above.)

— “According to the H-D scientific method”

My apologies: I don’t what this is, and it doesn’t seem to be referenced as such in your paper – advice/links, please?

On your 2(a):

— “pre-registration is only appropriate if there *is* a hypothesis to test. It isn’t necessary for purely exploratory work that focuses instead on hypothesis generation”

Okay, that makes me a lot happier about this – not least because much of our work in EA and the like fits more in the latter category, of ‘exploratory work [for] hypothesis generation’.

— “The problem with the publishing culture in psychology … which requires HARKing.”

Yuk. 😐 Yeah, I really do see your point there – and your problem, too.

— “creating a new article format … specifically for exploratory studies”

Would be very interested to see that, and more about how it works, because that may solve one of the major problems we have around publishing and the general EA ‘body of knowledge’, precisely because so much of our work has high levels of inherent-uniqueness, and hence necessarily exploratory.

On your 2(b):

— “Pre-registration has never been proposed as a universal or mandatory solution for all science. We’ve only ever proposed it as an option for researchers who want to embrace transparency in hypothesis-testing.”

Good to hear that – and it’s a key point that didn’t come over clearly enough in the Guardian article.

What also wasn’t made clear enough in that article, and is a really key point in your paper, is that ‘pre-registration’ is actually more about guaranteed-publication: null-results and negative-results are extremely important from a science-perspective, but tend to get shut out from publishing in a culture that insists on (purported) positive-results (or, in the domestic-violence context, ‘politically-correct’ results…). Hence the huge pressures towards HARKing etc, in order to re-spin any results into supposedly-positive confirmation of the post-propter-hoc hypothesis. In that sense, I strongly agree with your aims, and what you’re doing about it – making it ‘safe’ to publish null- or negative-results.

— “How can transparency be a bad thing?”

Depends on whose perspective…? </irony> When politics and/or big-money get involved – and, for that matter, big reputations or big egos – it’s fairly safe to assume that there will be a lot of pressures against transparency, and a lot of people who will think that transparency is a very bad thing indeed. :bleak-wry-grin:

The catch, for us in EA and the like, is that transparency is far from unproblematic – in the methodological sense of the term. A lot of things we deal with do require either an explicit lack of transparency, or very careful juggling of trade-offs around transparency: trade-secrets, the various forms of secrecy around so-called ‘intellectual-property’, strategic-secrets (including military, in my own case), financial-secrets, legal-secrets, and more mundane forms such as personal-privacy and personal financial-security (credit-card details and suchlike). Although it’s by no means sufficient, often the first level of security is via obscurity – the exact inverse of transparency. It’s tricky, to say the least… your relatively-straightforward form of transparency would be a real luxury for us! 🙂

(Would love to continue the conversation on this, if you wish?)

Hi Tom –

So I think we are in basic agreement. I must say it’s really interesting and useful to get a perspective on this from somebody in a different discipline.

I should point out that I am the author of that Guardian piece. You’re right that some points could have been presented more clearly – e.g. that pre-registration isn’t being suggested as mandatory or universal. As always, getting all the nuances into a short blog post is a challenge and not something I have yet mastered!

H-D scientific method = the hypothetico-deductive model of the scientific method (see Figure 1 in the PDF I linked to in my first comment).

You’re right also that a key feature of the pre-registration model we’ve proposed is that the article is accepted provisionally by the journal. We initially set up this format at the journal Cortex (where I’m on the editorial board). Last summer we called for it to be offered across the life sciences, and so far 9 journals have done so – and more to come. You can read the open letter here:

http://www.theguardian.com/science/blog/2013/jun/05/trust-in-science-study-pre-registration

The advantage of journal-based pre-registraton is exactly as you point out: it not only eliminates HARKing and other questionable practices – it also ensures that journals don’t accept which articles to publish based on the results. So it effectively eliminates the file drawer.

I don’t think it’s the answer to everything, and I don’t think it should be mandatory, but it is helping to make the life sciences more transparent.

See also the Open Science Framework which supports pre-registration and other excellent transparency initiatives such as data sharing: https://osf.io/

Chris

@Chris: “So I think we are in basic agreement.”

Yes, I’d say we are, and were, anyway. There’s one point I’d like to explore a bit more, but I’ll come back to that after replying to your points above.

@Chris: “I should point out that I am the author of that Guardian piece.”

Oops… my apologies, I should have noticed that. 😐 Hence not exactly ‘misquoted’, unless you’d consider that you’ve misquoted yourself? :wry-grin: As for “getting all the nuances into a short blog post is a challenge”, have you noticed yet how insanely long so many of my blog-posts here have ended up to be? 😐

@Chris: “H-D = hypothetico-deductive”

Okay, understood – thanks. That’s another part of what I want to come back to in a moment.

@Chris: “see Figure 1 in the PDF”

Oops – sorry… (Another classic example of ‘RTFM’ on my part, perhaps? – or maybe ‘RTFP’, ‘Read The… uh… Fine Paper’, in this example… 😐 )

@Chris: “See also the Open Science Framework”

Yes, that I had heard of before this – if not properly read about, though… Agreed that both that and ‘pre-registration’ are great examples of tactics to improve transparency, sharing and better research-methodologies overall.

(Earlier – and coming back to what I’d like to expand on: )

@Chris: “I must say it’s really interesting and useful to get a perspective on this from somebody in a different discipline.”

Strong agree there.

To be blunt, though, I’d have to admit I’m more than a bit jaundiced about academia and the soft-sciences: the scope and scale of the (self)-dishonesty and, all too often, outright fraud that we came across in that meta-analysis of the domestic-violence ‘industry’, and its political and social consequences, all became a bit too much to bear. For example, in Australia, we couldn’t find any item of so-called ‘research’ on DV that had a fully-defensible methodology: most were riddled with really basic methodological errors such as circular-reasoning, and much of even the arithmetic literally didn’t add up. In every case, the errors either inflated the female victim-rate, reduced or outright eliminated the male victim-rate, or both – there were no exceptions. As for the citation-trails… – well, frequently we found that in the rare case where they did lead to a methodologically-defensible study, the re-interpretations applied were often completely opposite to the original (such as in one US case, were male DV-victims were all reclassified as female in the subsequent Australian report).

And in our area of EA and the like, much of so-called ‘complexity-science’ is barely any better: I’ve frequently seen arbitrary-opinion repackaged as ‘absolute fact’, or assigned a much broader scope than actually applies: one well-known case was a study of information-flow in a very narrow subset of the largely-imaginary world of economics, repackaged as ‘complexity-science’ applicable to all aspects of business and beyond. Hence, again, a bit too over-sensitive to the scope and scale of bulimic bullshit too often experienced in this field… And hence, in turn, my apologies if I over-reacted a bit too much to the much more sensible ‘pre-registration’ proposals. 😐

Moving on from that, though, there are real challenges that we face that perhaps may not be so extreme in your field, but definitely would (or should?) apply. Chief amongst those is how much the H-D model is even applicable, if at all, to wild-problems (aka wicked-problems): as I understand the methodological logic of it, an H-D approach could only make sense with tame-problems – or at most, mostly-tame problems with identifiably-constrained wild-problem elements, surely?

How would the H-D model cope with ‘variety-weather‘, where the variety upon which an hypothesis is based is itself undergoing unpredictable change?

How would you design an H-D based experiment in a context with high or extreme inherent-uniqueness, where by definition it must be all but impossible to pre-formulate any hypothesis? (That’s what the ‘Not-known’ region in the SCAN frame – novelty and/or uniqueness at real-time – helps to highlight and, we’d hope, address.)

And if you can’t use an H-D model for your research, what do you use? How do you describe it, document it, explain it to others, assess, validate and verify your approach? It ain’t easy… 😐

Those are the kinds of challenges that we have to work in EA, pretty much all of the time. We have to be able to work out which approach – including H-D – applies in any context, often on-the-fly, and still be able to deliver a meaningful result, time after time, even if and where no-one else (and possibly even we ourselves) can understand or even describe how the heck we got there… Kinda explains why there ain’t much hair still left on top here, perhaps? 🙁 – and also why I’m sometimes a bit too over-antsy about the purported universality of H-D and other academic-style approaches? Hence, again, my apologies, if so – but hope this helps in your work someways too, anyway.

Haha. That’s of course THREE points. Consequences of commenting before coffee: lose power to count!

No comment. Definitely no comment. 🙂 (Because have you noticed yet the number of missing-words and other errors scattered throughout this blog of mine…? 🙁 🙂 )

(And yes, coffee is an essential requirement. In large volumes. Not quite intravenous, yet, but headin’ there… 🙂 )

For what it’s worth, I labelled my replies to your second and third items as ‘2(a)’ and ‘2(b)’ respectively – I presume that makes sense enough?

Thanks again, anyways.