Towards a whole-enterprise architecture standard – 6: Training

For a viable enterprise architecture [EA], now and into the future, we need frameworks, methods and tools that can support the EA discipline’s needs.

This is Part 6 of a six-part series on proposals towards an enterprise-architecture framework and standard for whole-of-enterprise architecture:

- Introduction

- Core concepts

- Core method

- Content-frameworks and add-in methods

- Practices and toolsets

- Training and education

This part explores what training, education and certification we might need to cover all of the previous material: the core-concepts from Part 2, the metamethod from Part 3, the metaframework from Part 4, and the practices and requirements for toolsets and suchlike from Part 5.

Rationale

What skills do we need for whole-enterprise architecture? How do we develop those skills? If the effective scope is the entirety of the enterprise, how on earth do we train people for this? And how do we assess whether people are capable to do that work in the first place?

All of which brings to the vexed questions of training, education and certification…

For which, to be blunt, most current approaches for the enterprise-architecture context are somewhere between woefully-inadequate and just plain wrong. In short, another concern around which we need to go right back to first-principles, and start again pretty much from scratch.

First, then, is there any difference between training and education? And if there is, does it matter anyway? The short-answers are yes, there is, and it does:

- training almost literally puts people on a predefined track – the ability to provide and act on predefined answers to predefined questions for predefined needs

- education invites and invokes an ability to literally ‘out-lead’ appropriate responses to ambiguous, uncertain or previously-unknown contexts and needs

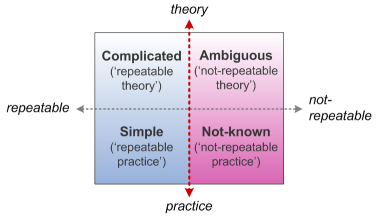

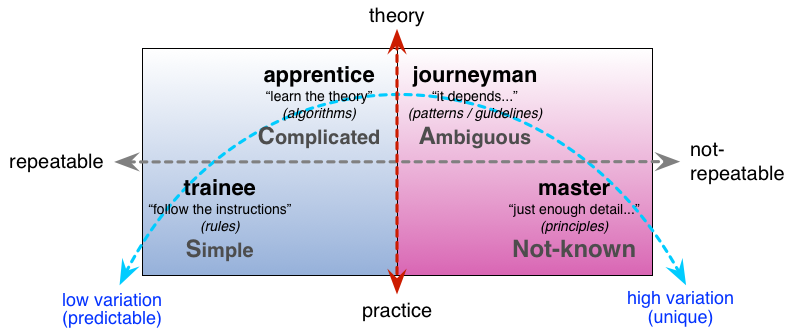

We can illustrate the difference with a SCAN mapping:

The horizontal axis on SCAN is an indeterminate scale from absolute-certainty or absolute-sameness on the left, to infinitely-uncertain or absolutely-unique, somewhere way over to the right. The red dotted-line across that axis represents a variable ‘boundary of effective-certainty’:

- to the left of that boundary, doing the same thing leads to the same result

- to the right of the boundary, doing the same thing can, may or will lead to different results, or we may or must do different things to get the same effective result

In short, training only make sense in those parts of the context that map to the left-side of that boundary. To the right, we’re going to need real skills, which in turn arise only from some form of education or self-education.

The vertical axis on SCAN is an arbitrary scale of the amount of time available for assessment and decision-making before action must be taken – the latter indicated by the ‘NOW!’ as the baseline, with time-available extending ever upward towards an infinite future relative to the ‘NOW!’. The green dotted-line across that axis represents a highly-variable yet real transition from theory to practice, or from plan to action. For humans at least:

- above the boundary, there is time for considered or ‘complicated‘ evaluation, and plans and decisions are rational – or may seem so, at least

- below the boundary, there is time only for simple evaluation in real-time, and plans and decisions are emotional

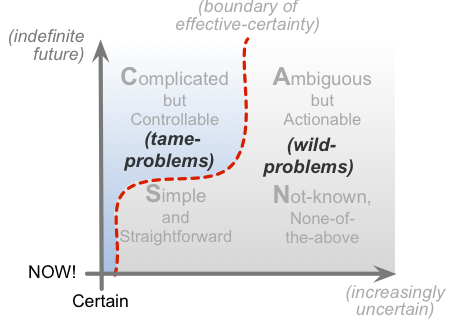

Which, overall, we can summarise visually as follows:

Which, going back to the previous graphic above, gives us the typical decision-base and assessment-tactics in each of those four ‘domains’ in the SCAN context-space:

- Simple: rule-based; assessment via rules, rotation (switching between views, work-instructions etc)

- Complicated: algorithm-based, assessment via reciprocation (balance across system), resonance (feedback, feedforward etc)

- Ambiguous: probablility- or guideline-based, assessment via recursion (patterns, self-similarity, fractality etc), reflexion (holographic ‘whole contained within the part’)

- Not-known: principle-based, assessment via reframe, rich-randomness (guided-serendipity etc)

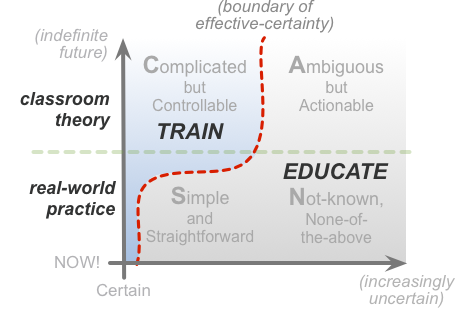

And which, when we map all of that to the question of training versus education, gives us this quick summary:

- Training focusses more on ‘correctness’ and content, which fits the needs of the Simple and Complicated domains

- Education focusses more on ‘appropriateness’ and context, which fits the needs of the Ambiguous and Not-known domains

Which, given that whole-enterprise architecture must, by definition, always cover the whole of an enterprise’s context-space, in turn tells us that content-based training is not enough for enterprise-architecture.

Which is kinda awkward, since most current ‘EA’-training is content-based, for an arbitrarily-predefined type of context, and with little to no means to assess the context itself. Oops…

(One EA-group has recently reframed its entire ‘EA-education’ model around a Boy-Scout style system of content-based ‘badges’ – which to my mind is just plain daft… But easy and cheap to teach, of course, and easy to whip up ‘collect-them-all’ idiocies, too. Sigh…)

The catch is that content-based training assumes that the context stays the same, and will always match up with the expectations of theory. But the real-world doesn’t work that way: there are some things that are ‘tame’ enough to stay the same as we expect, but most real-world contexts contain a lot of ‘wild’ elements that keep changing on us in unpredictable ways. And that’s especially true as we get closer to real-time – things that look certain enough in theory often don’t work out how we expect in real-world practice:

The classic analogy is the difference between dogs and cats. We can train a dog: they’re relatively easy to tame. But we can’t train a cat: however much we might wish otherwise, it always remains somewhat wild. To get a cat to do what we want, we have to provide conditions under which it wants to do what we want. And to do that, we have to understand how a cat thinks, decides, acts, the nature of the cat – in other words, the context of cat.

So whilst the technical aspects of whole-EA are often reasonably-tame – dog-like, if you like – the human aspects of whole-EA are a lot more like that infamous metaphor of herding cats…

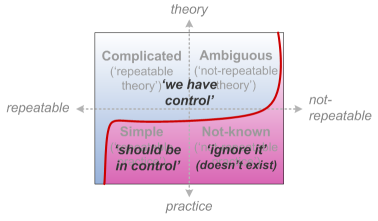

Just to make things worse, there’s a strong tendency in mainstream IT-centric ‘enterprise’-architecture to over-focus on the technical, and to dump everything not-IT into the ‘out of scope / Somebody Else’s Problem‘ basket:

This is made worse again in extreme or ‘reflexive’ IT-centrism, in which it’s assumed or asserted that every possible problem has an IT-based ‘solution’, and that that ‘solution’ is the best possible option because it is IT-based:

This kind of skew, or disconnect from reality, is derived from a common yet deeply-held delusion that everything in the world can somehow be made predictable, safe, certain, tame. The reality is that it can’t – that there will always be something that can’t and won’t fit our expectations, our desire for the kind of spurious pseudo-certainty that misplaced IT-centrism would seek to provide. And hence, as whole-enterprise architects, we need to work with that fact – and not pretend that it doesn’t exist.

In short, for whole-enterprise architecture, there is no such thing as ‘out-of-scope’. And everything in an enterprise is also always Somebody’s Problem – so we need to know whose problem it is…

The result is that whilst content-based training for EA is undoubtedly useful, it’s a lot less useful than it might at first seem. For whole-enterprise architecture, we need much more focus on skills-based education:

What skills does a whole-enterprise architect need? The short answer that we need some level of skill about everything within the scope of the context. The obvious catch is that we can’t be experts in everything: for most real-world contexts, learning everything that we’d need to know would take several lifetimes, and most of it would be long out of date long before we’d got far anyway. We’re going to need a different way to do this…

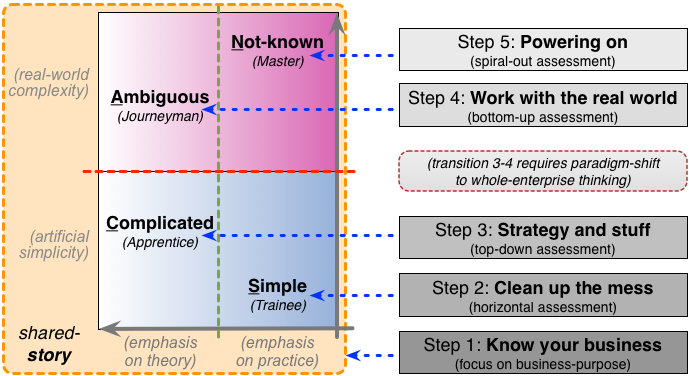

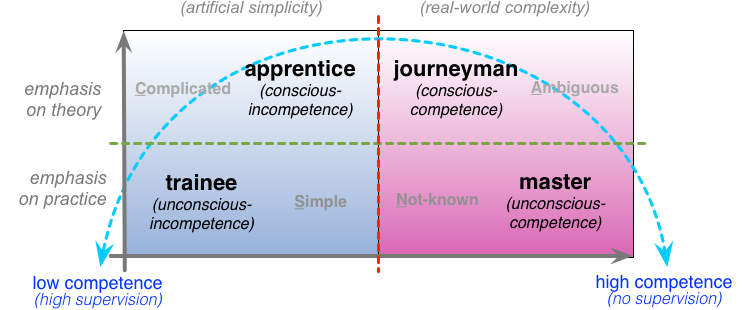

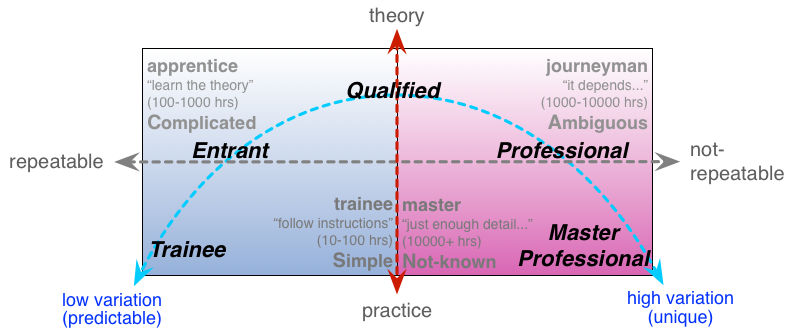

The way out is to understand that the specialism of a whole-enterprise architect is to be a generalist. To make sense of that, though, we need to map out skills not so much in terms of deep-specialism in a given domain of content, but more generally in terms of skill-level in any type of content. Classically, there are four distinct levels – trainee, apprentice, journeyman and master – that are distinguished by minimum numbers of hours to reach a typical minimum capability at that level of skill:

- trainee (10+ hours) – “just follow the instructions…”

- apprentice (100+ hours) – “learn the theory”

- journeyman (1000+ hours) – “it depends…”

- master (10000+ hours) – “just enough detail… nothing is certain…”

These pairings in turn map quite closely to the SCAN domains:

Which in turn give us a crossmap between the skill-levels and the decision-base and assessment-tactics for each of the SCAN domains:

And, via another crossmap, to the types of skills needed for each of the different steps in the Maturity-Model seen in the previous parts of this series:

But if whole-enterprise-architecture needs to focus more on skills than on content-based rote-learning, what skills do we most need to develop in ‘the trade’? What do we need as the core-skills for whole-enterprise architecture – particularly if we’re looking to test those skills through certification and the like?

In my experience, what’s needed most are meta-skills – the skills of how to make sense of a context, and how to learn new skills. For example, we’ll need skills on:

- systems-thinking and making sense of context in terms of whole-as-whole

- fractal sensemaking, such as with OODA, CLA and the like

- ability to work with layered-abstractions, metaframeworks, metamethods, metatools and more

- strong facilitation-skills, ‘translation’-skills and other ‘soft-skills’

- ability to do ‘deep-dive’ (usually with the assistance of others) into any part of the enterprise

That last item indicates that perhaps the most crucial skill for a specialist-generalist is to be able to pick up fast the basics of new skills – for example, to get to the ‘conscious-incompetence’ level of any new skill, within days rather weeks:

The key point for all of these skills is to be able to resolve the Enterprise-Architects’ Mantra:

- “I don’t know…” – but I know how to find out

- “It depends…” – and I know how to find out what it depends on

- “Just enough detail…” – and I know what level of detail will be needed

The fine detail is where the specialists come into the story, of course. But the role of the architect is to weave bridges between all of the different specialisms in a context – and if we’re to be able to build meaningful bridges between those domains, we need to have ‘Just Enough Skill’ in every aspect of the respective context.

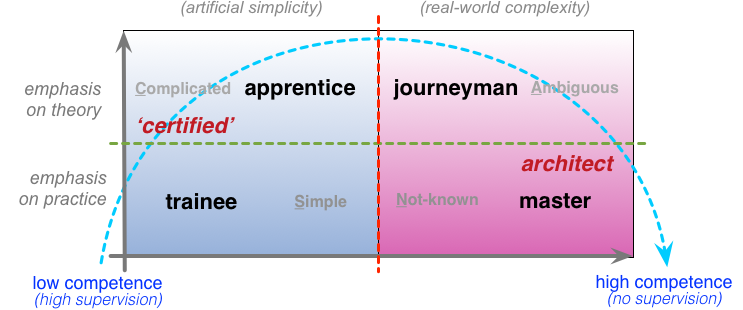

Which brings us to the vexed question of certification for enterprise-architects.

Conceptually speaking, most current so-called ‘training’ for enterprise-architects is just that: training. In other words, content-based rote-learning, way over on the far-left of the SCAN frame. In most cases I’ve seen, there’s no skill-component at all – hence nothing to help people use that rote-learning in dealing with the myriad of real-world uncertainties and ambiguities over on the right-hand side of the SCAN frame. In other words, only slightly better than useless, at best.

(At worst, a lot worse than useless… But that, perhaps, is another story…)

The great advantage of that kind of training is that it’s cheap to teach, and cheap to test, through automated multiple-choice tests and the like. Worse, trainers too often tend to teach to the test – not to the real-world needs for the skill. When we map it out onto the SCAN/skills crossmap, it becomes clear just how little in terms of skill the much-vaunted status of ‘Certified’ would typically mean:

One thing we discover fast in enterprise-architecture and elsewhere is that the typical end-product of a typical ‘Certified XYZ’ training is someone who’s wildly over-certain about their knowledge of the respective skill – with, yes, some grasp of the theory, but almost none of the real-world practice. In short, exactly what we don’t need – someone with just enough knowledge to get into deep trouble in the real-world, with nothing like enough knowledge to get out of it again. Not A Good Idea…

(They also tend to be arrogant and irritating in the extreme. I’ve had too many daft run-ins on LinkedIn with ‘TOGAF newbies’ telling me that obviously I know nothing about enterprise-architecture because I’m not quoting the TOGAF spec chapter-and-verse, and hence “seriously, old man, do your homework, you really ought to do a TOGAF course, you know…” The fact that I rewrote the darn TOGAF-manual from scratch to make it possible to use for whole-enterprise architecture, and that I’ve been practicing enterprise-architecture pretty much since before they were born, won’t figure in that kind of assessment, of course. Sigh…)

And if that’s not insult enough, the ludicrous over-hype from some of the providers gives us ‘certification-schemes’ that little better than certification-scams – “a tax on employment”, where we can’t even get a job without some largely-meaningless so-called ‘certification’. There’s an ongoing pretence or delusion – particularly amongst lazy tick-the-box recruiters – that ‘Certified XYZ’ means a lot more than it actually does: as we go forward on this, it’s absolutely essential that we don’t let recruiters pretend that a shallow rote-learning five-day course is more important than twenty years of real-world experience!

What we need is a clear pathway to get people past that delusory hump of ‘Certified XYZ’, that’s also consistent across all the skill-types and content-areas. One recommendation that might help to get us out that mess is to split certification into five distinct levels:

- Foundation: trainee – basic knowledge of terminology (1-2 day content-oriented course, test by multiple-choice); competent to understand and act on instructions for basic tasks only

- Entrant (equivalent of ‘Certified’): first-level apprentice – basic knowledge of practice (4-10 day content-oriented course, test by multiple-choice); competent for supervised practice only

- Qualified (BA/BSc equivalent): full apprentice – full theory, some practice (1-2 years practice-oriented course and/or monitored practice, test by combination of exam-paper [theory] and basic peer-review [practice]); competent for mentor-reviewed practice

- Professional (MA/MSc equivalent): full journeyman – full theory and real-world practice (2-5 years practice, test by full peer-review); competent to do unsupervised practice in increasingly-complex contexts

- Master Professional (PhD equivalent): consultant-level (5 years+, demonstrate by ‘masterpiece’ that contributes to the profession, reviewed by other ‘master’-level practitioners); competent for fully-independent practice

We can map these onto the SCAN skills-crossmap as follows:

Some certifications for the higher levels do already exist: the Open Group’s Open-CA schema, for example. But most of them are deeply IT-oriented, or even openly IT-centric, which isn’t much use to us; and none of them as yet address the full complexities of whole-enterprise architecture. In that sense, we still have to go our own way on much of this question of training, education, assessment and certification.

Application

From the above, we can derive suggested text for the ‘Training and certification’ section of a possible future standard for whole-enterprise architecture. Some of the graphics above might also be included along with the text.

— Purpose of training and education: Whole-enterprise architecture is a skills-based discipline. However, many of the skills required for whole-enterprise architecture rarely feature at present in any mainstream curriculum for ‘enterprise’-architecture, business, management, IT, strategy or even academic disciplines such as science or humanities. Whilst some people do show natural aptitude for generalism, deep-abstracts and bridging between domains, it is likely that training and education will be needed even in those cases – and even more so for most others. Retraining may be particularly important for those with previous experience in current mainstream ‘enterprise’-architecture, as a ‘detox’ against its deeply-ingrained incipient IT-centrism.

— Nature of training and education: The primary focus will be on working with any given context as a whole – both the ‘order’ and ‘unorder’ of the context-space, to use SCAN terminology. A core requirement will be to maintain explicit balance between content and context. A key theme will be that “everywhere and nowhere is ‘the centre of the architecture’, all at the same time”, bridging across all domains in the context, from big-picture to fine-detail, and from strategy to design, implementation, operation, analytics, decommission, re-strategy and redesign.

— Nature of required skills and experience to be developed: Whole-enterprise architecture requires strong generalist ability, plus an ability to deep-dive anywhere into the architecture and its context – the latter usually working in conjunction with specialists in the respective domain. Core skills would include:

- whole-of-context sensemaking, systems-thinking and context-space mapping

- bidirectional abstraction and instantiation via metaframeworks, metamethods, metatools etc

- facilitation, soft-skills and presentation-skills

- skills in ‘translation’ between domains, and ‘learning to learn’

The latter will be essential in order to develop and support a (very) broad portfolio of content-based skills, though often only at sufficient skill-level to engage in meaningful conversation and exploration with specialists in the respective domains.

— Delivery of training and education: Delivery would take at least three distinct forms:

- classroom-based training or online/text-based self-training, working from predefined texts – suitable primarily for content-oriented elements

- on-the-job mentoring – typically required to develop skills in sensemaking and ‘It depends…’ context-specific architectures

- peer-based community support – typically required for long-term development of skills and professional mastery

— Identification of skill-levels and needs for further training or education:

- Foundation: trainee, or non-architecture specialist, in need of basic knowledge of terminology, in order to engage in actions or discussions on whole-enterprise architecture – deliver via classroom-based training or self-training; test and verify via content-based exam

- Entrant: first-level apprentice, learning to engage in architecture-practice – deliver via classroom-based training or self-training, preferably also with some mentoring; test and verify with content-based exam and open-ended test-case

- Qualified: full apprentice, to be competent for mentor-supervised practice – deliver via mentored guidance supported by classroom- or online-based theory units; test via theory-exam and mentored practice

- Professional: full journeyman, to be competent for unsupervised practice – deliver via mentor-guidance and peer-community engagement, supported by selected ‘professional development unit’ content-elements; test and verify via portfolio of test-cases

- Master Professional: transition to consultant-level – self-deliver as ‘masterpiece’, and verify via review by other ‘master’-level practitioners

— Engagement of recruiters and the broader business-community: Extensive engagement will be needed with recruiters, and with the broader business-community, to ensure that the role, skills, competencies and skill-levels of whole-enterprise architects and whole-enterprise architecture are properly understood, and that recruiting and hiring or contract processes properly reflect this understanding. This is essential to prevent a repeat of the problems that arose for mainstream ‘enterprise’-architecture and related disciplines, where Entrant-level ‘certifications’ were frequently misinterpreted as representing a higher level of priority and competence than Qualified, Professional or even Master Professional skill-levels.

The above provides a quick overview of what’s needed for training and education for for whole-enterprise architecture, and how we can test and validate competence and skill at varying skill-levels. As before, I do know that it’s nowhere near complete-enough for a formal standards-proposal, but it should be sufficient to act as a strawman for further discussion, exploration and critique.

This completes the main part of this series of posts, though there’ll be a follow-on post shortly that provides a worked-example.

Before we go there, though, any questions or comments on the series to date?

With you on training versus skills.

I’m convinced that the only way we that can learn something is by doing it. So, the worth of a training generally can be measured in how much ‘doing’ there is.

Take for instance certified ArchiMate training. It teaches you to reproduce the standard. It doesn’t teach you to model *well*. Modelling well (skill), in the end, comes with a lot of practice. Certification is mostly useless there.

It is not for nothing that serious training (school, university) takes years and includes a lot of ‘doing’. It is the ‘doing’ that results in ‘skill’. Sometimes the skill is something quite different from the subject. E.g. some parts of some subjects in school, the content of what you learn is less important than the way it trains your brain learning it. The skill you learn is ‘remembering and reproducing’, something that comes in handy when you study quite something else later.

Awesome blog…