Tools and metatools

Meta-this-that-and-the-other – metamodels, metaframeworks, metamethodology, metatheory, even metatools? What is all this stuff about ‘meta-‘?

And what is ‘meta-‘, anyway?

One answer is that it’s about a kind of recursion that we often need in our work, in which something is applied to itself, or creates a context-specific variant of itself. Hence, for example, a UML-model is a model created in terms of the UML (Unified Modelling Language) metamodel which itself is based on the MOF (Meta-Object Facility) metametamodel. In that sense, a metamodel is a model-for-models, a metaframework is a framework-for-frameworks, metatheory is theory-on-theory, and so on.

Another answer would be to quote from the post ‘On meta-methodology‘:

The core concept [here] is recursive meta-methodology. For example:

- a method in solution-space acts on the problem in problem-space

- a methodology selects an appropriate method

- a meta-methodology selects an appropriate methodology

- a meta-meta-methodology selects an appropriate meta-methodology

…and so on. A methodology is a path within solution-space; a meta-methodology is a path in another layer of solution-space; in effect, the layers may be nested indefinitely, but must ultimately all resolve to a set of methods that address the actual problem in problem-space.

In his brilliant ‘Frankenframeworks‘ post, Chris Lockhart explained the reason why we need metaframeworks:

We need to do more of more value and do it more quickly. Put aside the endless soul-searching over frameworks. Pick one. Pick ten. Pick two and smoosh them together. Keep them and reuse them. But above all, use what works for the problem you have regardless of what the experts say.

To which I expanded a bit more on the ‘smooshing together’ part in my post ‘On metaframeworks in enterprise-architecture‘:

Every metaframework is just another framework, in exactly the same way that a metamodel is just another type of model. It’s not the framework itself that is ‘meta-‘: it’s how we use the framework that makes it ‘meta-’.

Which is what we’re actually exploring here: not frameworks as such, but using frameworks with ‘meta-ness’.

Hence, to metatools. Tools and frameworks are much the same: in essence, ‘tool’ is a somewhat less fancy way of saying ‘framework’, for something that’s usually a bit simpler and, yes, less fancy than a full-blown framework, but otherwise much the same idea. Both provide a pattern and/or a way of working on a context: hence a metaframework or, simpler, a metatool, provides a pattern and way of working on a context that helps create a more context-specific pattern and way of working on that context.

(Yeah, I know, that’s already somewhat into ‘My brain ‘urts…’ territory – but it really is fundamental to how we work in enterprise-architectures and the like, so let’s just keep going with it for a while.)

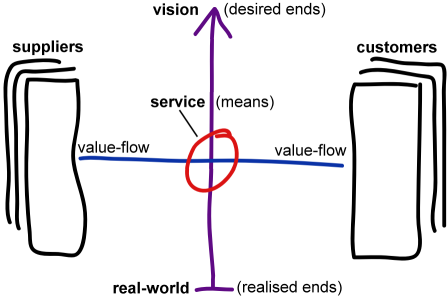

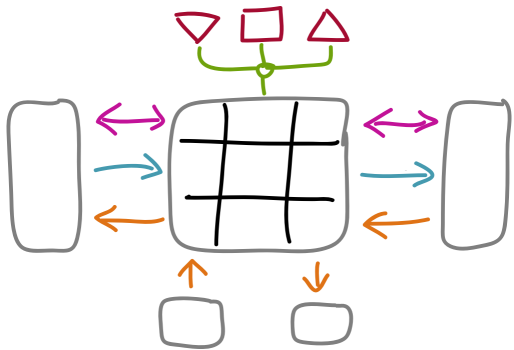

To illustrate Chris Lockhart’s idea of ‘smooshing [frameworks] together’, I’ll give a real example from my own work. First, we start with the idea that ‘everything is or represents or implies a service‘, and, from there, we note that there’s a useful pattern in that each service kind of sits at an intersection between purpose – which we could describe as a ‘vertical’ axis – and value-flow – which we could describe as a ‘horizontal’ axis. Or, visually, something like this:

It’s useful in itself, as a quick scrawl of a model – or, more accurately, a metamodel as a template for other models – to remind us that when looking at services, we need to consider how value-flow connects with overall purpose, and vice-versa.

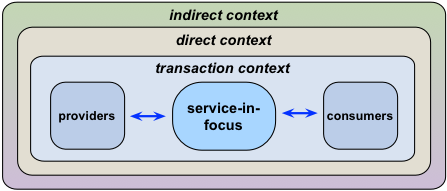

Then here’s another useful idea or pattern, again for looking at services, that describes that broader-context within which a service operates. From experience, we say that the context we need to explore for any given service is likely to be at least two or three steps broader than the immediate transactional-context of that service. Or, in visual form again, a pattern that’s something like this:

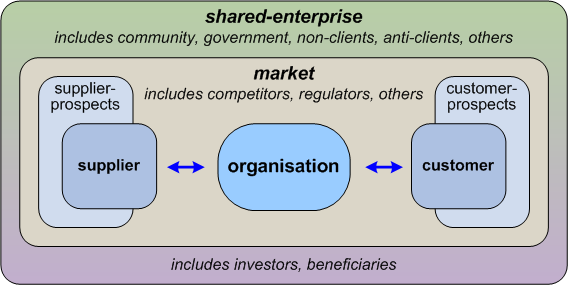

Or, in somewhat more context-specific form, like this:

Which, again, is useful in itself as a quick scrawl of a metamodel or template, to guide discussions about service-context.

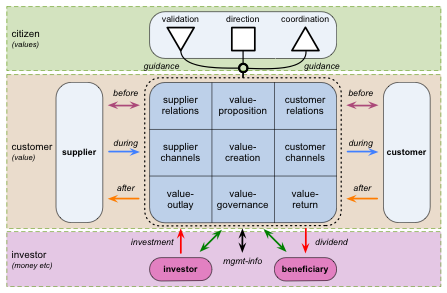

Yet when we ‘smoosh’ those two metamodels together, we can use them to create yet another metamodel, the core-diagram for the Enterprise Canvas framework – which we could use as a more formal template or checklist:

Or as the basis for a quick scrawl on a whiteboard:

Which we use to explore the interactions of services across their broader context – including the ‘non-transaction’ service-relationships needed to keep that service on-track to its guiding values.

Another metatool approach is to apply ‘meta-ness’ to an existing tool, to make it more versatile, powerful and multidimensional. For example, let’s take good old SWOT – the simple two-by-two matrix of Strengths, Weaknesses, Opportunities and Threats:

We could then make it a bit more ‘meta-‘ by noting that, recursively, there are strengths, opportunities and threats implied in each of our weaknesses, or weaknesses, strengths and threats implied in each of our opportunities.

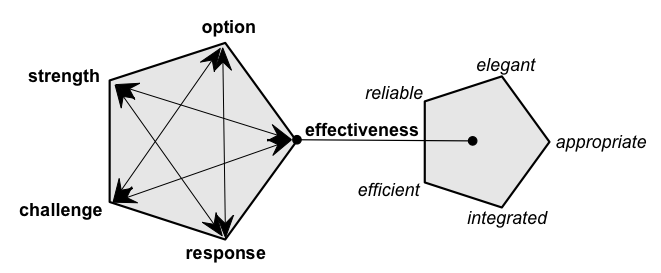

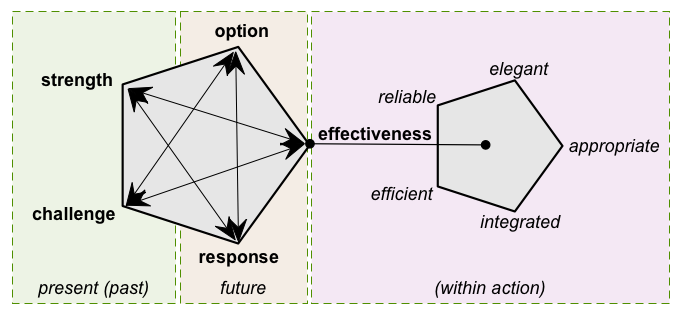

And then, as I described in my post Checking the SCORE , we can usefully extend this still further by reframing SWOT into a form that more explicitly supports the recursive and fractal ‘meta-ness’ of the real-world strategy and tactics, and the real need to focus on their effectiveness. Hence the SCORE framework:

And, for some purposes, we could again usefully extend that frame still further by ‘smooshing it together’ with an additional dimension of time:

The part of this ‘working with meta-ness’ that does tend to throw some people is that, at first, there doesn’t seem to be any fixed or predefined ‘the method’ as to how to do it. Yet it turns out that the same is just as true in science, as Paul Feyerabend famously explained in his now-classic book ‘Against Method‘:

Without a fixed ideology, or the introduction of religious tendencies, the only approach which does not inhibit progress (using whichever definition one sees fit) is “anything goes”: “‘anything goes’ is not a ‘principle’ I hold… but the terrified exclamation of a rationalist who takes a closer look at history.

And, once again, from Chris Lockhart’s ‘Frankenframeworks’ post:

Frameworks should be easy to interpret, fast to deploy, amenable to adaptation. They can be picked up and used or tossed aside in favor of something that is a better fit. The framework shouldn’t fall apart if you decide to remove part of it because it doesn’t suit the problem at hand. The framework can be simple or sophisticated, but either way it should be extensible. Multiple frameworks can be put together or merged to become the methodology for a given situation. These frankenframeworks can be reused. I myself have dozens of them that I reuse or partially reuse on every project I’m on. Keeping this mini-library of frameworks enables me to be agile and flexible.

In reality, though, it’s not as simplistic as “anything goes”: there is a real method to this, a real discipline. But again even the method itself is kind of ‘meta-‘, because it must somehow find a way to resolve Gooch’s Paradox, that things not only have to be seen to be believed, but also have to be believed to be seen. Whatever model we choose acts as a filter on belief, on what it allows us to see, what it conceals from our view: so as Chris implies above, we need to bounce back and forth between model and context, searching always for “a better fit” that gives new insights into the context.

The ‘meta-ness’ comes in the feedback-loop that we use here, in which, to gain new insights, we explore the context-space as-if the current ‘smooshed-together’ model is ‘the truth’, whilst also being aware that ultimately it isn’t ‘the truth’ at all – it’s just a view, a filter on our view of the context. We then watch carefully for hints, suggestions, implications that we might also need to look at the context-space in another way – hints that also often suggest what such other useful views might be.

We can only do this, though, because we know and accept that the frame or tool that we’re using is only a tool, one tool amongst any number of them that “can be picked up and used or tossed aside in favor of something that is a better fit”. It’s much harder to do this if the tool is kind of static, designed to be used in only one way – such as with SWOT, for example. (Or, worse, the tool purports to be ‘The Truth’ – such as A Certain Framework that insists that its concept of ‘complexity’ is ‘The Only Answer’, or the obsessively IT-centric frameworks that predominate in so much of current enterprise-architecture, or those many misuses of scenario-modelling in which ‘The Answer’ is always in the upper-right quadrant of the frame. Sigh…)

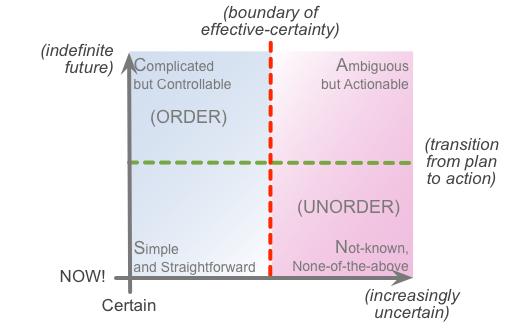

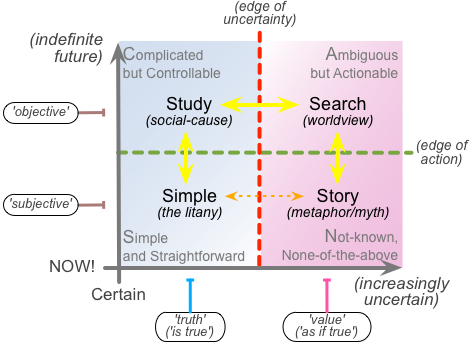

Let’s use SCAN as a worked-example. At first glance, it’s just a two-by-two matrix, a ‘simplistic categorisation tool‘:

Which, yes, it is. And it’s often useful even in just that basic form – or even less, sometimes, as in the preparation-for-surgery example described in the previous post, which doesn’t even bother to use the divider-boundaries in that frame.

But let’s apply a bit of ‘meta-ness’ to this: we use the tool to explore and extend itself – and then see what insights arise. For example, as discussed in a ‘Cryptic conversation‘ some years back, we could apply the domains recursively on the boundaries between those domains:

— A Simple view accepts the boundaries exactly as they are, as depicted on the diagram above.

— A Complicated view takes note of the fact that those boundaries are arbitrary.

The boundary on the vertical-axis represents the transition between theory and practice, or plan and action – but we choose where that is, what point in time relative to the ‘NOW!‘ that that boundary represents. What happens when we rethink where that transition would take place – such as in Agile development, for example? What happens when we allow there to be multiple transitions – not just one boundary, but any number of boundaries that we might need?

And the boundary on the horizontal-axis represents what I sometimes term ‘the Inverse-Einstein test‘: on the left-side side of that boundary, doing the same thing should lead to the same result, whereas on the right-side of the boundary, doing the same thing may or will lead to different results. For each person, or each context, that boundary may be different: hence, for example, what will happen when the tolerance for uncertainty is changed? How can we improve our system’s tolerance for uncertainty – metaphorically moving that boundary towards the right-side of the frame? What are the practical limits of uncertainty that our automated systems can handle? – and what needs to take over when what’s happening in that context moves to the far side of that system’s boundary?

These are all useful, practical questions that ‘freeing up the boundaries’ would allow us to ask.

— An Ambiguous view acknowledges that the boundaries not only can move around, but are always somewhat porous. We see this particularly in the horizontal direction across the context: research-and-development will bounce back-and-forth between Complicated and Ambiguous, for example, whilst many types of real-time action – for example, anything in business that’s customer-facing – will bounce back-and-forth from Simple work-instructions, with principles and values to give guidance when working with the Not-known, and action-checklists to bridge between them.

— A Not-known view extends even further that point about ‘all boundaries are arbitrary’, by reminding us that ultimately the boundaries don’t even exist as such: they’re both ‘there’ and ‘not-there’ all at the same time. The boundaries are often useful, highlighting certain themes that are often important in assessing a context – but with the emphasis on ‘useful‘, rather than some spurious ‘The Truth’. If we don’t need to use them, then we don’t use them: simple as that, really.

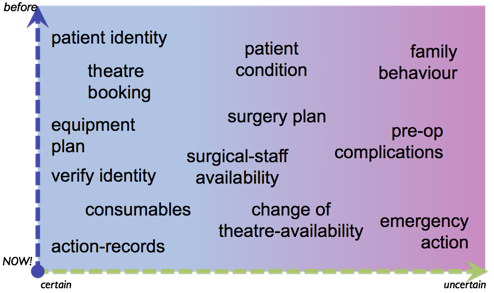

Next, we also remember that the domains themselves are recursive. Hence in a hospital, for example, we might want to explore the processes of preparation for surgery, and map out each of the elements in a SCAN-based context-map:

Yet we could also do a SCAN for each of these individual elements: each of these elements implicitly has its own Simple, Complicated, Ambiguous and Not-known, its own boundary-of-effective-certainty, its own transition(s) from plan to action – and for some purposes, it might well be useful to map any or all of them each on their own SCAN context-map. That’s one form of metatool recursion we’re likely to use quite a lot.

And then there are crossmaps – a key category of Chris Lockhart’s ‘smooshing frameworks together’. With SCAN we already have quite a wide variety of documented cross-maps, such as on decision-making and ‘decision-dartboards‘, on ‘manager-time’ versus ‘maker-time’, on skills and limits of automation:

Then there are crossmaps on problem-solving, crossmaps on themes of knowledge-management or on the process of scientific-research, or this crossmap with the futures-technique Causal Layered Analysis:

And yes, there are plenty more where those came from… 🙂 Being created all the time, in fact, by different people, for different purposes. Yet the crucial point to note is that whilst all of them are different, as individual tools, they’re also all the same, as instances or adaptations of the same metatool. Which means that we can do crossmaps of the crossmaps, and then crossmaps with those crossmaps, and so on, almost ad infinitum, yet always ultimately linked back to the same consistent base-frame. That should give some idea of just how powerful these metatools can be – even from what may be (as in this case, with SCAN) a very simple base.

As a final example, let’s take this one step further. One of the more valuable SCAN crossmaps is a categorisation of tactics for sensemaking and decision-making, crossmapped with the respective SCAN domains to which they most usefully apply:

That crossmap is useful in itself: it gives us clear guidance on tactics that work well and don’t work well with elements in a context that map to the respective domains. Yet following the same logic, we can place the crossmap itself as the context for review, and apply the same recursive crossmap recursively – as ‘a metatool applied to itself’ – and see what insights arise:

— Regulation: Use the assumptions behind SCAN – ‘time available before commitment to action’, and ‘certainty versus uncertainty’ (or various of its analogues, such as repeatability versus non-repeatability, or sameness versus uniqueness) – as ‘rules’ to assess, in a bootstrap-like manner, the validity of the assumptions themselves.

— Rotation: Use the domains and boundaries as checklist to provide views on the context of SCAN itself. (For example, what aspects of SCAN are best used within real-time action versus for ‘considered’ analysis and experimentation away from the point of action? – in other words, the distinctions between the Simple / Not-known and Complicated / Ambiguous pairings respectively.) How do map aspects of the context in terms of those views? How do we switch between views?

— Reciprocation: Using the same checklist, what are the flows and balances across the context, as indicated by the SCAN frame? (For example, we might note that SCAN explicitly includes everything within a context as a theme for assessment: there are no arbitrary exclusions.) How does SCAN itself balance out?

— Resonance: Using the same checklists and the views of flows and balances, where are there feedback-loops, damping, feedback-delays and the like? (For example, what would happen if we place too much emphasis on one domain alone? – such as the Ambiguous domain, which some would view as an exclusive analogue for ‘complexity’?)

— Recursion: Using the same checklists, what patterns can we identify within the context? – and at what levels of abstraction? In what ways do they repeat and/or recurse within or between levels of abstraction? In what ways do those patterns not repeat exactly – true-fractal, rather than simple-recursive? What methods would be needed with SCAN to ensure that usage does not revert solely to a Simple checklist, but can also accept and support usages that are Complicated, Ambiguous and Not-known?

— Reflexion: Using the same checklists, methods and patterns, in what ways can the whole be seen in any part? Working fractally, what are the samenesses that we can see within usages across each different context? In what ways are they not the same? – how can we reduce the risk that SCAN itself may “become restrictive and self-disqualifying when attempting to ‘translate’ it to practicalities” (to quote Paul Jansen)?

— Reframe: Picking another framework that likewise be used as a base-frame for context-space map for a whole context (PDCA, OODA, Five Elements, Galbraith Star, Spiral Dynamics, maybe even a more simplistic frame such as Cynefin), what ideas and insights arise from using it from ‘smooshing it together’ with SCAN in and as a crossmap? How would you apply those outcomes of the respective crossmap in your usage of SCAN, or, for that matter, in your usage of the other framework?

— Rich-randomness: Whilst working with and on SCAN, what ideas and insights arise via serendipity from the work-context itself? In what ways can you usefully incorporate those insights into your own usage of SCAN?

Apply all of this recursively, fractally, such that the frame is used to review itself, to create whatever richness of views that we need.

Now apply all of the above to your own favourite framework: what ideas and insights arise as you do this? How would that change your practice with that framework? How easy – or not – is it to use that favourite framework as a metatool to be ‘smooshed-together’ with others? And what happens to your perception and valuing of it as a tool for your work, in that shift in perspective from tool to metatool?

That’d be more than enough to play with for now, I guess? 🙂

For more on metatools – how they work, how to assemble them and ‘smoosh them together’ as useful tools – see the series of posts on ‘Metaframeworks in practice’:

- ‘A matter of meta‘

- ‘On metaframeworks in enterprise-architecture‘

- ‘Metaframeworks in practice: Introduction‘

- ‘Metaframeworks in practice, Part 1: Extended-Zachman‘

- ‘Metaframeworks in practice, Part 2: Iterative-TOGAF‘

- ‘Metaframeworks in practice, Part 3: Five Elements‘

- ‘Metaframeworks in practice, Part 4: Context-space mapping and SCAN‘

- ‘Metaframeworks in practice, Part 5: Enterprise Canvas‘

- ‘Models and reasoning-processes in enterprise-architecture‘

And also, for more on how the recursion works in a metatool such as SCAN:

Thanks for reading this far, anyway, and hope it’s of use to someone: any comments and suggestions, perhaps?

Leave a Reply