Tools for change: Back to the basics

Keep it simple. That’s always the challenge.

The themes we’re working on with that ‘bucket-list’ of tools for change, it’s all too easy to go running off down the rabbit-hole, making things more complicated and complex than they need to be.

What’s helped a lot is to keep coming back to the basics – back to a simple set of principles that can help to guide all of our sensemaking, decision-making, action and review.

Which, recursively, are also what the tools themselves are all about.

Which, in turn, give us not only a set of guidelines to develop the tools, but test their validity too. Kinda running the rabbit-hole backwards, if you like.

Most of these guidelines have appeared as tag-lines in various articles over the years in this weblog, and I’ll link back to those articles where/if I can find the respective references. But in any case, here they are:

— Things work better when they work together, on purpose

The aim is effectiveness, over the medium- to longer-term – not just short-term efficiency, which is still the most common (and most-often destructive) focus.

And we can always do it better – hence an inherent need to support continuous learning in every context.

- Post: ‘Enterprise effectiveness‘

- Post: ‘A tagline for enterprise effectiveness‘

- Slidedeck: ‘How to build continuous-learning into architecture-practice‘

— Any context, any scope, any scale

One key part of getting things to work better, together, on purpose, is that we need to be consistent in how we work across domains, scopes and scales. Unfortunately, that’s exactly what does not happen with most current approaches, which are built around domain-specific specialism, with nothing to link all of the different specialisms and perspectives together. The result is, all too often, a fragmented mess – ‘dotting the joins’ rather than helping us join the dots.

To make things work together, we need to ensure that things can work together – tools, methods and more, consistently, across every context, scope and scale.

- Post: ‘Dotting the joins (the JEA version)‘

- Post: ‘Toolsets, pinball and undotting the joins‘

- Post: ‘Selling EA: What and how‘

- Post: ‘Towards a whole-enterprise architecture standard – 3: Method‘

- Post: ‘Towards a whole-enterprise architecture standard – 4: Content‘

- Slidedeck: ‘Disintegrated EA?‘

— Find the right question

The usual assumption is that what we need most is ‘the right answer’. But in contexts of change, where each ‘the answer’ is always context-specific and context-dependent, what often matters more is we can find the right question. To quote Eliyahu M. Goldratt:

An expert is not someone who gives you the answer, it is someone who asks you the right question.

The common experience is that the answers we need will arise all but automatically when we find the right way to frame the question. We therefore need our tools to provide explicit support to ‘finding the right question’. And, especially, to support us in being our own ‘the expert’, wherever – as is so often the case in contexts of change – there could be no other expert but ourselves.

- Post: ‘The bucket-list: Changing direction‘

- Slidedeck: ‘ICS/IASA: How I learned to stop worrying…‘

— Work with uncertainty

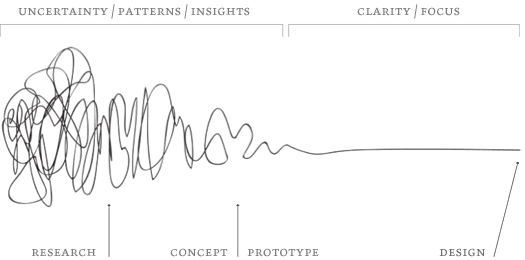

Every enquiry within a context of change is inherently uncertain – by definition, when we start out we do not know (all of) ‘the answer’ that we should arrive at by the end. Often, we’re likely to bounce around in uncertainty for quite a while, like a pinball, before any kind of certainty arises at all. This pattern is perhaps best summarised by Damien’s Newman’s now-classic ‘the Squiggle‘:

Most of our existing tools – especially in enterprise-architecture, seem designed solely to work with ‘the easy bit’ right at the far end of Squiggle, where all we need to do is tidy up the few loose-ends of uncertainty. By contrast, we need our tools to work with the entirety of the pattern – from often-chaotic beginnings to near-certain endings – and work consistently and with full connection across all parts of that process.

- Post: ‘Pinball-wizard‘

- Post: ‘Catching ideas in flight‘

- Post: ‘Toolsets for associative modelling‘

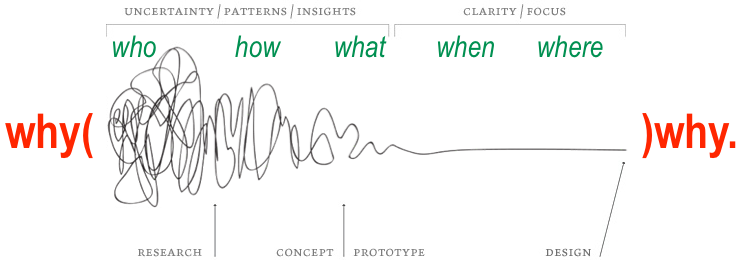

— Each question is a quest

The phrase ‘Each question is a quest’ should be taken literally – to ask a question is ‘to go questing’ out into the unknown, with all the fears and more that that implies. To continue with the metaphor of ‘the Squiggle’, as above, we move from a ‘Why?’ that begins the quest, to a ‘that’s Why.’ – an answer, a ‘Because.’ – that marks the quest’s end:

Our tools need to support exploration of each question as a quest – to include and acknowledge the process of ‘questing’, as much as ‘the answer’ that is arrived at via that quest.

- Post: ‘Two kinds of Why‘

- Post: ‘Why and Because‘

- Post: ‘Why before Who‘

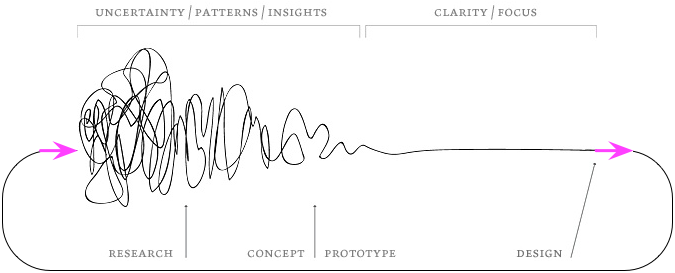

— It’s iterative, fractal, recursive, re-entrant

…although we probably shouldn’t use any of those terms when explaining this to anyone else!

The point is that the Squiggle-pattern is a loop, not a one-shot process. As each quest ends, another begins:

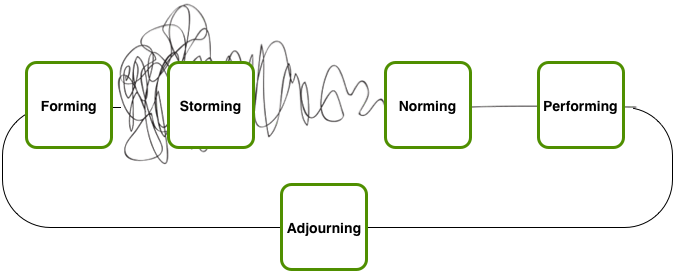

The nominal end of each Squiggle-pattern is not only where we loop back to start on the next quest, or part of a larger quest, but also where we gain an opportunity to reflect on what we’ve achieved, and what we can learn. In Bruce Tuckman’s classic ‘Group Dynamics’ sequence, this is the ‘Adjourning’ phase of the cycle:

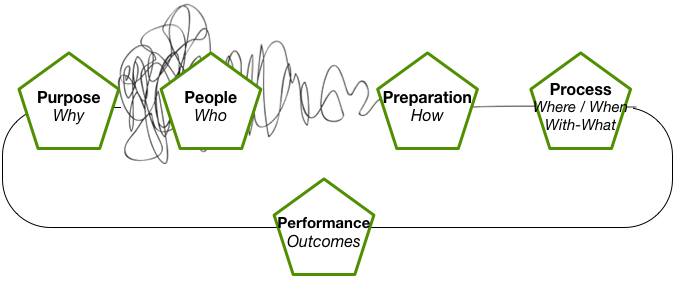

…whilst in the Five Elements model it’s the ‘Performance’ or ‘Outcomes’ phase:

Our tools need to be able to use and support this same pattern, and every part of this pattern, for every type of context, at every scope and scale, with each iteration linked appropriately to those that precede and/or follow it, and/or are enclosed within it or within which it is enclosed.

- Post: ‘Towards a whole-enterprise architecture standard – 3: Method‘

- Post: ‘Sense, make-sense, decide, act‘

- Slidedeck: ‘How to build continuous-learning into architecture practice‘

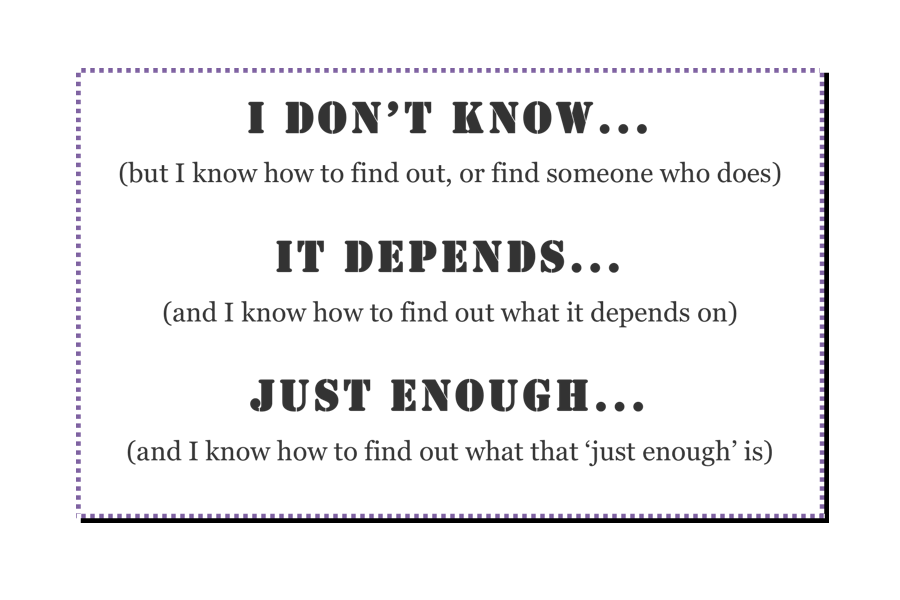

— A guiding mantra: “I don’t know… It depends… Just enough…”

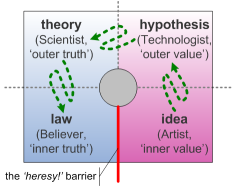

This is one that we can visualise as follows:

“I don’t know”: We need to be able to say this, in order to face and work with inherent-uncertainty – and our tools need to support us in saying this.

“I don’t know”: We need to be able to say this, in order to face and work with inherent-uncertainty – and our tools need to support us in saying this.

“It depends”: Our tools need to be able to help us in exploring dependencies in any type of context, any scope, any scale, any level of fractality.

“Just enough”: Our tools need to support us in ‘just enough detail’ to enable appropriate decision-making without drowning in ‘analysis-paralysis’; ‘just enough process’ to guide exploration without getting in the way; ‘just enough certainty’ to enable confidence within action; and many other forms of ‘just enough’.

- Post: ‘An enterprise-architects’ mantra‘

— Discipline at all times, even within uncertainty

Another essential ‘Just enough…’ concern that all tools must support is ‘just enough discipline‘ – discipline in naming, taxonomies, structures, processes and more. By the nature of the work, the forms of discipline needed will necessarily change throughout the sequence of the Squiggle, or whatever other metaphor we’re using to guide a quest – such as the ‘swamp-metaphor’:

On this weblog I’ve written extensively about this concern over the years – in some ways almost every post has been about discipline in some sense or another.

- Post: ‘Declaring the assumptions‘

- Post: ‘Fractals, naming and enterprise-architecture‘

- Post: ‘What What? and other taxonomic tangles‘

- Post: ‘Unravelling the anatomy of Archimate‘

- Post: ‘Metatheory and enterprise-architecture‘

- Post: ‘Sense, make-sense, decide, act‘

- Post: ‘Sensemaking and the swamp-metaphor‘

- Post: ‘Sensemaking – modes and disciplines‘

- Post-series on ‘Seven sins of dubious discipline‘

- Post: ‘Seven sins, sensemaking and OODA‘

- Post: ‘Seven sins and the Hype Cycle‘

- Post: ‘The anti-want for enterprise-architecture‘

- Slidedeck: ‘How do we think? – modelling our own modelling‘

— Each tool or template is a guide to thinking – not a substitute for thinking

Perhaps the single most important assertion is that no tool does anything on its own – it is an aid to thinking and exploration, and not a substitute for either. Although a tool can and should provide some form of guidance, the questing is always done by the people involved – not by the tool itself.

The primary role of a tool is to provide guidance – typically in the form of a visual-checklist, to elicit insights – and to provide a structured means to capture insights.

It’s not merely about making lists – as per SWOT, for example – but about eliciting insights by moving from place to place in the frame. Although each could be viewed (and even used) as a simple tick-the-box checklist, the more usual role of tools such as SCORE or Five Elements is more like a spreadsheet – each thing we add or do may imply changes elsewhere in the spreadsheet, that in turn ripple elsewhere throughout the spreadsheet.

- Post: ‘Checklists and complexity‘

- Post: ‘Principles and checklists‘

- Post: ‘Enterprise Canvas as service-viability checklist‘

- Post: ‘Towards a whole-enterprise architecture standard – Summary‘

- Slidedeck: ‘Bridging enterprise-architecture and systems-thinking – an introduction to Enterprise Canvas‘

There’s always more I could add, of course – but in the spirit of ‘Just enough…’, perhaps best to leave it there? 🙂

Over to you for comments, anyway, if you wish.

Tom, you mention:

“The primary role of a tool is to provide guidance – typically in the form of a visual-checklist, to elicit insights – and to provide a structured means to capture insights”

So True, and so relevant and especially in the world of MVP creation. The ability of the tool to provide the relevant insights to enable us to keep track of the MVP’s impact on the transition architecture is a critical part of the overall journey.

Governance is not a welcome word in the Agile world but if we are to frame the next important question we need to be able to visualise the journey to date.