Services and disservices – 3: The echo-chamber

Services serve the needs of someone.

Disservices purport to serve the needs of someone, but don’t.

And therein lie a huge range of problems for enterprise-architects and many, many others…

This is the third part of what should be a six-part series on services and disservices, and what to do about the latter to (we hope) make them more into the former:

- Part 1: Introduction (overview of the nature of services and disservices)

- Part 2: Education example (on failures in education-systems, as reported in recent media-articles)

- Part 3: The echo-chamber (on the ‘policy-based evidence‘ loop – a key driver for failure) (this post)

- Part 4: Priority and privilege (on the impact of paediarchy and other ‘entitlement’-delusions)

- Part 5: Social example (on failures in social-policy, as reported in recent media-articles)

- Part 6: Assessment and action (on how to identify and assess disservices, and actions to remedy the fails)

In Part 1 we explored in some depth the structure and stakeholders of services, and explored how disservices are, in essence, just services that don’t deliver what they purport to do. In Part 2 we illustrated those principles with some real-world examples in the education-system context. All clear enough so far, I’d trust?

Where we ended up at the end of the second post was that there are two key causes for disservices: the echo-chamber, and assumed or asserted priority and privilege. In this post we’ll explore the background behind the first of these, the echo-chamber of cognitive-bias.

The ‘echo-chamber’ of belief

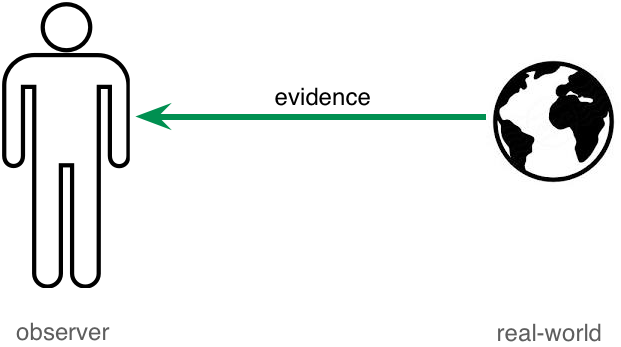

How do we make decisions? Perhaps more important, on what do we base those decisions? The short answer we’d usually give would be “on the basis of the evidence”:

Yet ‘evidence’ literally means ‘that which is seen’. And here we hit right up against a fundamental problem of cognitive-bias, sometimes known as Gooch’s Paradox: that “things not only have to be seen to be believed, but also have to be believed to be seen”. Or, as Ruth Malan put it in a recent Tweet:

- RT @ruthmalan: “In a world full of ambiguity, we see what we want to see.” ‘How Your Brain Decides Without You‘

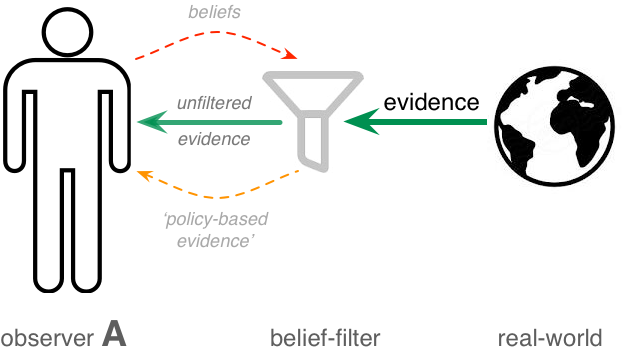

Everyone does this – it’s an inherent and in some ways necessary outcome of the way that human sensemaking works. The result, though, is that, to a varying extent, and varying in different contexts, what we each see may seem like evidence to us, but at least some of what comes through is actually first filtered via our beliefs into something more like ‘policy-based evidence‘:

The danger here is that of circular-reasoning and the like. In that part of the evidence that is filtered by our current beliefs, we see what we expect and want to see. This returns ‘policy-based evidence’ that confirms our current beliefs, but which may also exclude any information that does not conform to those beliefs, yet may be crucial for viable decision-making.

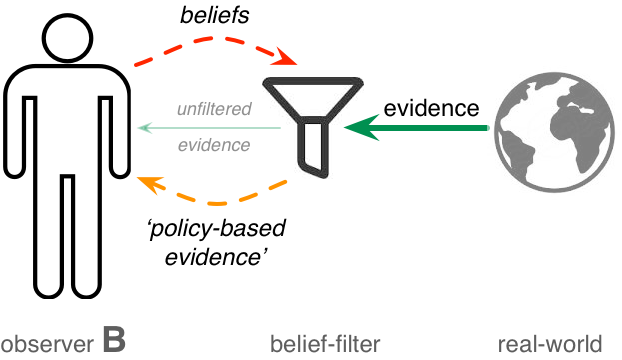

All of us do this at least some of the time: we can’t help it, it is literally an inescapable part of who we are. That much we do know, beyond doubt. (Or, since that too is of course likewise subject to Gooch’s Paradox, perhaps more accurately as “as much beyond doubt as it seems possible to be at the present time”…). And there are disciplines that can help us keep our risks of the policy-based evidence loop to as low as can manage it – of which more later, in the ‘Practical implications’ section of this post. Yet probably all of us have weak-points where we can fall into a full-on echo-chamber of belief, in which, as Ruth Malan quotes above, “we see what we want to see”, and almost nothing else at all – but believe it to be real-world evidence:

Which, for anything where we do have to work with the real-world rather than solely an imaginary one, is definitely Not A Good Idea…

The simplest summary here is that if a service is based in some way upon policy-based evidence, it is almost certain to deliver a disservice – if only in the form of misservices and non-service breakdowns. Which, again, is Not A Good Idea. Which, for service-design, means that we definitely need to take some care over this.

At this point, we’re going to need an example.

Back when I was at art-college, rather too many decades ago, the government of the day decided that all art-colleges had to be merged with a university or a trade-school or, or, or, anything, really – even a theological-college, in one case – because art-colleges, they, they, well, they shouldn’t be independent. They just shouldn’t. Unacceptable. (Yep, you can see the first unquestioned-assumption right there.)

The nominal justification for this policy (beyond the, uh, rather obvious politics, anyway) was that this kind of merger between fundamentally-different types of education-establishment would enable cross-disciplinary courses. In other words, a new kind of service.

Which, for us generalists, would be hugely valuable. If it ever happened, that is…

The catch, of course, was that all existing courses were structured around defined specialisms. A new kind of course would require a new kind of administration. Because, as everyone knows, administration is the central and most important part of all education, without which education is impossible. (Yep, there’s another unquestioned assumption.)

So a committee of administrators duly was convened, to work out what this new form of administration would be. Each of those administrators needed their own secretaries, and eventually their own specific team. Each of which worked largely without connection to any other team, because each of them were specialists in one specific area. (Note the hidden assumption there, that administration and education work only in siloed specialisms.)

And because progress on this knotty problem was so slow, and so obviously difficult to manage, the university’s administrators decided that they needed to add another layer of administration over these teams of administrators. And every administrator in this new layer needed their own secretaries, and their own specialised teams. (Note another hidden-assumption, that all administrative-work requires an ever-larger fiefdom of staff and self-referential services: John Seddon would have much to say on this…)

You can see where this is going, yes? And you’re right. By the time I left the college, three years later, the supposed administrative-support for this new service had grown and grown and grown so much – each administrator now with their own secretary’s secretary’s secretary, and more – that the university had had to build a whole new campus for them, at enormous expense. A campus without students, or teaching-staff, or technicians or anyone like that: just administrators.

Yet they weren’t actually administrating anything, other than perhaps their own endless inter-team communications. Those much-promised interdisciplinary courses – the service that was the nominal reason for that whole administration’s existence – well, they still hadn’t even gotten started by then. To my knowledge, they never did.

In short, a supposed service for one group of stakeholders – the students – skewed into a non-service (nothing happening), misservice (huge cost to the taxpayer and others, for no actual return) and disservice (betrayal of the students’ hopes and needs) as a result of a swathe of circularly self-referential beliefs on the part of one dominant cluster of stakeholders – the university’s administrators, and the so-called ‘public servants’ behind them. Not A Good Idea…

(For a counterpart example with a happier ending, where another group of administrators in a business context nearly caused the same kind of failures, but were eventually able to refocus successfully around the real service through reframing of belief and role, see the later part of Chapter 3, ‘A Defining Goal’, in Ed Catmull’s book Creativity Inc. – in that specific case, about the role and function of line-management in the production of the film Toy Story.)

Given all of that mess caused by a circular ‘ignore-ance’ of both needs and reality, where does such an echo-chamber get started?

Much as implied in the administrators-example above, probably the most important keyword here is belief.

And in essence, a belief is that which we hold to be true.

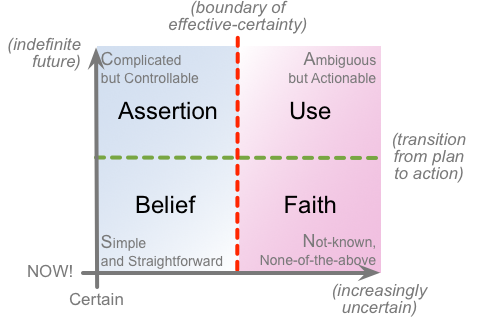

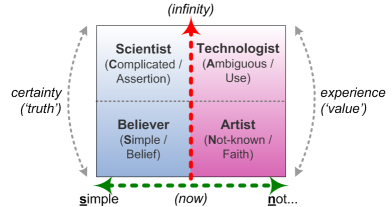

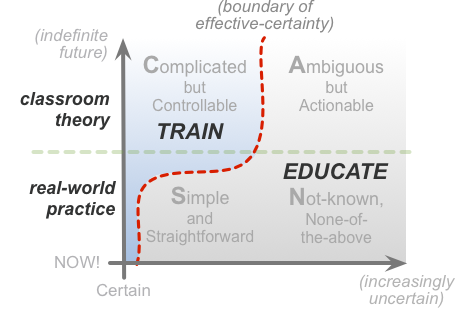

It’s one of a set of distinct decision-mechanisms, which we could illustrate in SCAN terms as follows:

Each of these decision-mechanisms is typically associated with distinct tools and techniques:

And each is also typically associated with distinct ‘modes‘, or ‘ways or working on the world’, which, as described in the post ‘Sensemaking and the swamp-metaphor‘, we could crossmap to SCAN as follows:

In terms of function, a belief is a preselected decision or pre-interpretation that is imposed upon reality. Belief presumes that the world will work in accordance with that interpretation or belief: in other words, that its pre-selected decision will be correct and valid – ‘true’ and useful – within a given real-world context.

(By the way, this is actually the basis of the concept of cause-and-effect: it’s usually dressed up in prettier and more ‘scientific’-looking terms, but in essence cause-and-effect is just an interlocking meshwork of belief.)

Note that there’s nothing inherently ‘wrong’ with belief. For most purposes, we rely on belief to get things done, to make sense of what’s going on in real-time, and more – because in real-time action, one thing that we don’t have is the luxury to stop and think. Our beliefs allow us to pre-filter the endless tsunami of real-world information, to get things mostly done mostly right in real-time.

The catch – and it’s a crucially important catch that too many people seem to miss – is that belief has no means to test its own validity. Its whole function is to act on the assumptions that underlie it, in almost literally robotic fashion: it must assume that the real-world will conform to the expectations of the belief. And, importantly, if the real-world does not conform to those expectations, the activities guided by the belief may well fail – but the entity operating on those beliefs will have no means as such to respond to that failure, because it only knows how to work in a world that does conform to those expectations. In computing terms we’d call that kind of failure a ‘crash’; in more human terms, we’d probably just call it a mess…

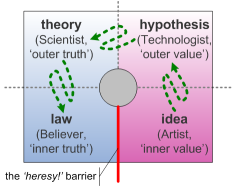

All of which means that if we want to test beliefs for validity, we’re going to need something else beyond belief alone. For example, in any would-be scientific context, we would typically use the classic sequence from idea to hypothesis to theory to law (with ‘law’ otherwise known as ‘belief’), which we could crossmap to SCAN as follows:

The sequence of idea-hypothesis-theory-law is only one way of making sense within SCAN-style context-space: there are many others – probably an infinity of others – each variously successful (or not) in their own ways.

Yet note that, for many people, the idea-hypothesis-theory-law sequence is not only supposedly the only permissible path through the context-space, but that it always comes to a final, immutable stop at the end of that sequence: the law is the law, and once we reach ‘the law’, there’s supposedly nowhere else to go. But in most cases, maybe even all, that assumption itself is a belief – a belief (sometimes known as the heresy-barrier) that can and often does block sensemaking, and actively feeds an echo-chamber of its own.

The catch here is grounded in the notion that ‘the law’ – scientific or otherwise – is also supposedly the pinnacle of ‘the True’: and the very notion of ‘the True’ has especial resonance for many people. It signifies absolute certainty, permanence, immutability, predictability, trustworthiness and more. But… that much-wanted aspect of ‘the True’ only works on those parts of the context that always remain the same. Which in many cases they don’t. Oops…

The contexts we deal with in enterprise-architecture and service-design and so on are riddled with aspects that don’t, can’t and won’t always stay the same. One of them is that theme around ‘same-and-different’, or ‘mass-uniqueness‘, that we explored briefly in the previous post. We see it in themes such as ‘variety-weather‘, or what I’d nicknamed ‘the inverse-Einstein test‘ – a key feature of the SCAN framework. There’s so much ‘It depends…‘ in enterprise-architecture that, to be honest, it often more closely resembles art or craft, or even alchemy, than any more conventional kind of science. Even science itself is ultimately just another belief-system – and it doesn’t much help if we pretend that it isn’t. One commenter here summarised the catch really well by drawing a distinction between ‘science’, as a set of practices, and ‘Science’, as a closed belief-system more akin to a kind of religion:

Too much belief in officials or technology (i.e. tools) can be counterproductive in the pursuit of science, but that belief is a pillar of Science.

Thus it’s possible to be for one and against the other, even though they share the same name and are conflated in popular culture.

Another aspect of the same catch is that people often seem to get very muddled about ‘objective’ and ‘subjective’, with a belief that decisions should only ever be based on fact, and that only supposedly-‘objective’ things can be considered ‘fact’. The first part of that assertion might perhaps be true, or at least useful, but the second part is onto a loser before it even starts: as soon as we enter a context where things don’t always stay the same – which seems to be so for most real-world contexts – and anyway are observed differently by each person – as we’ve seen earlier above – then what exactly is meant by the term ‘objective’? Beyond that, supposedly-‘subjective’ feelings are facts in their own right, whereas opinions about those feelings are not facts – a point that becomes extremely important when a customer-service rep comes face to face with an angry client who’s just suffered yet another disservice from the company…

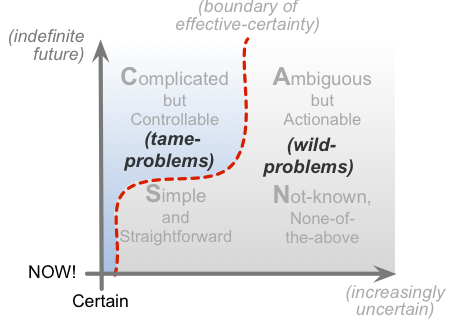

Perhaps a simpler way to put is that ‘the True’ only works on tame-problems, where things do stay the same each time we come to them. But the real-world also includes all those other things that haven’t been ‘tamed’ yet, and perhaps never will be: all the ‘wicked-problems‘ (or, as I would prefer to put it, the wild-problems), for which belief alone is inherently-unreliable. We could map out in SCAN terms the respective scopes of ‘tame’ and ‘untamed-wild’ somewhat as follows:

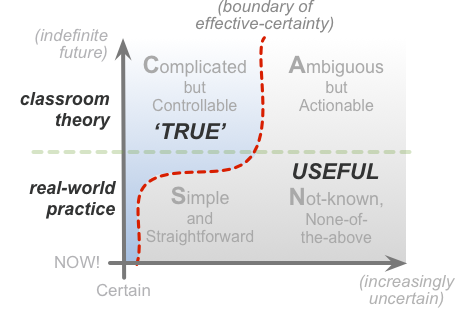

We can also map it out in terms of the scopes within which a ‘true/false’ decision would be valid, versus where that kind of concept can be inherently misleading, and we instead have to focus more on qualitative concerns such as usefulness:

And much the same occurs in that important distinction between training and real-education that we saw whilst exploring the structures of education-systems in the previous post:

The briefest summary there is that the echo-chamber of belief will work well – or, at least, not be a problem – anywhere in that blue-zone towards the left-hand side of each of those SCAN cross-maps. (We might note, though, the implicit warning in those crossmaps that beliefs and ideologies tend to work better in theory than they do in real-world practice…) But the moment we move outside of that often-quite-narrow domain of effective-certainty – into wild-problems, into the domain of the ‘useful’, into realms where only real skills and real-education will help us survive the storms of variety-weather – well, that’s when we’ll need to keep a very careful check on what we believe, and keep the echo-chamber turned right down as much as we possibly can.

As I see it, there are two major causes for the echo-chamber to rise in volume enough to block out any view of the real-world: fear, and over-investment in a specific belief.

In a business-type context, the fear is most often a fear of loss of ‘control’, or loss of certainty. It’s typically triggered as soon as something stops working ‘as expected’, in effect forcing a transition over ‘the edge of panic‘ into the realm of the Not-known:

What does work at this point is to let go of control, and switch over to the tactics and techniques appropriate to the Not-known domain, such as reframe, rich-randomness and reference to guiding-principles, and maybe even a touch of faith.

What doesn’t work at this point is to cling on to the previous belief, ignore any evidence that contradicts that belief, and retreat into an assertion that it’s the real-world that’s ‘wrong’. Yeah, it sounds daft when phrased like that, but it’s disturbingly common – and all of us do it from time to time.

To put it at its simplest, when we get into that kind of mess, we may think we’re ‘acting rationally’, that we’re ‘in control’ and all that: but in reality it’s the fear that’s in control, not the rational mind… It’s all too understandable, though, and largely forgivable too, because all of us do fall into that panic-state from time to time, when a strong-enough mythquake catches us unawares.

Yet when we get to an over-investment in a specific belief, that is much more of a conscious choice: we choose to hold to a belief – sometimes even when we know that that belief is not valid – simply because we want to believe in its purported ‘truth’. We hold on to a market or a business-model long beyond its ‘use-by date’, solely because we have so much investment in it – financial-investment, infrastructure-investment, emotional-investment or whatever. We fail to update an industry-framework because the training-providers have too much investment in training-materials and the like for the existing model, and refuse to acquiesce to any new costs to themselves. We stay in a job we hate, year after year, solely because we believe that that’s the only way that we can support our families – and ignore the repeated hints from Reality Department that other options do indeed exist. The list goes on and on…

As we’ll see in the next post in this series, both of these two causes for the echo-chamber – fear, and over-investment – will often interweave intensely with the other key driver-mechanism for disservices, namely purported ‘priority and privilege’. But before we turn to that, let’s first explore what we can do to mitigate the impacts of the echo-chamber in our everyday enterprise-architecture practice.

Practical implications for enterprise-architecture

The fundamental problem that Gooch’s Paradox warns us about is this: if we are unable to question our existing beliefs, how are we going to see any evidence that contradicts those beliefs?

This is extremely important in enterprise-architecture, service-design and the like, because in practice it’s usually Not A Good Idea to ignore or block out warnings from the real-world that our beliefs and assumptions are not valid. Most of us by now will have learnt about that point the hard way… – and yet we all still fall for it, time after time. That’s what can make all of this kinda hard…

It really does apply everywhere. The problem is endemic throughout every aspect of enterprise-architecture, including its underlying theory and metatheory; it’s inherent in all academic disciplines, perhaps especially in the social-sciences; it’s a core concern even in physical-sciences such as biochemistry; it even creates doubt about the validity of science itself. If you want a first-hand demonstration of how easy it is for you yourself to fall into the same trap, take the admittedly somewhat-extreme test in my post ‘Bending reality‘. There’s no easy way to escape it.

What does seem to work, though, is a specific kind of deliberate practice: to learn how to use beliefs as tools, not ‘truths’. Stan Gooch himself summarised this well in a comment that I’ll paraphrase somewhat here:

Whilst undertaking any enquiry, accept every statement at face-value, exactly as if true – whilst keeping one small corner of your mind aware that it isn’t.

(Note that point about ‘as if‘ true, not ‘is‘ true – that distinction might often seem subtle, yet is absolutely crucial for this type of work.)

For a practical point-by-point guide on this, using a framework called the ‘swamp-metaphor’, see the post ‘Sensemaking – modes and disciplines‘. It’s based somewhat on a Jungian-type frame, but also loosely crossmapped to the SCAN frame, as per earlier above:

Each ‘domain’ within the frame represents a mode or ‘way of working’ within the overall context-space, each bounded by different assumptions, different priorities, different focus-concerns. We then ‘move around’ – metaphorically-speaking – within the context-space, switching between modes in a deliberate and disciplined manner, using the respective descriptions for each mode as a guide. In the swamp-metaphor framework, the guidance for each mode is laid out in a consistent format, under the following headings:

- This mode available when…

- The role of this mode is…

- This mode manages…

- This mode responds to the context through… (i.e. prioritises, for sensemaking)

- This mode has a typical action-loop of…

- Use this mode for…

- We are in this mode when…

- ‘Rules’ in this mode include…

- Warning-signs of dubious discipline in this mode include…

- To bridge to [other mode], focus on…

Within each mode, it’s especially important to watch for the respective “warning-signs of dubious discipline”. If we don’t do so, we’re likely to find ourselves walking straight into the echo-chamber trap, or some other crucial methodological-mistake, without realising what we’ve done: once again, Not A Good Idea…

More generally, some specific keywords or keyphrases to watch for in everyday language: in particular, phrases such as ‘must be‘, ‘it’s obvious‘, ‘it’s self-evident‘, or ‘as everyone knows‘. If we see or hear any of those keywords, alarm-bells should go off straight away – because it’s very likely that policy-based evidence is somewhere in play. You Have Been Warned?

Enough for now on this: time to move on to the next post in this series, and to the other key factor in disservices – the tendencies towards self-selected ‘priority and privilege’, and the damage that that can cause.

In the meantime, though, any questions or comments so far? Over to you, anyway.

The two main inoculations agains echo chambers. from my experience, include the one takeaway from a freshman philosophy overview – “Question all givens”, and from much later study, the great takeaway from Alfred Korzybski – “Whatever you SAY a thing is, it is NOT.”

The caveat here is that, this style of thought leaves almost zero room for a ‘comfort zone’. Tra la … 🙂

Great reminders, Doug – thanks.

On “The caveat here is that, this style of thought leaves almost zero room for a ‘comfort zone’” – yep, true indeed. I think I did warn somewhere above that this ain’t easy (and that it applies to everyone, to all of us, not just some easily-blamable ‘Them’…). But if we need to warn folks that this one is hard, watch out for the next one, around ‘priority and privilege’ – that one really is challenging… 😐