Seven sins – 4: The Meaning Mistake

Enterprise-architecture, strategy, or just about everything, really: they all depend on discipline and rigour – disciplined thinking, disciplined sensemaking and decision-making.

But what happens when that discipline is lost? What are the ‘sins’ that can cause that discipline to be lost? How can we know that it’s been lost? And what can we do to recover?

This is part of a brief series on metadiscipline for sensemaking via the ‘swamp-metaphor‘, and on ‘seven sins of dubious discipline’ – common errors that can cause sensemaking-discipline to fail or falter:

- Seven sins of dubious discipline – introduction to the series

- Sin #1: The Hype Hubris

- Sin #2: The Golden-Age Game

- Sin #3: The Newage Nuisance

- Sin #4: The Meaning Mistake

- Sin #5: The Possession Problem

- Sin #6: The Reality Risk

- Sin #7: Lost In The Learning Labyrinth

- Seven sins – a worked-example

And this time it’s Sin #4, the mind-bending muddles of the Meaning Mistake:

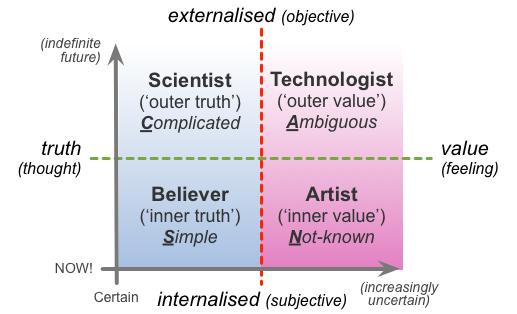

This one occurs mainly in and around the Scientist-mode, where we aim to establish outer-truth, otherwise known as ‘fact’. There are clear rules in this mode for deriving fact; the Meaning Mistake occurs whenever we’ve been careless with those rules, leading us to think we’ve established that ‘truth’ when we haven’t.

The simplest way to describe this is with a cooking metaphor: the end-results of our tests for meaning should be properly cooked, but if we’re not careful, they’ll end up half-baked, or overcooked, or just plain inedible.

Interpretations go half-baked when we take ideas or information out of one context, and apply them to another, without considering the implications of doing so. One form of this is what logicians describe as ‘induction‘, or reasoning from the particular to the general – such as in that common misuse of the feminist slogan “the personal is political”, where we assume that whatever happens to us must also apply to everyone else. We then leap to conclusions without establishing the foundations for doing so – which can cause serious problems when we try to apply any of it in practice in the Technologist-mode.

(‘Half-bakery’ is a common characteristic of newage, but it occurs often enough in the sciences too. A classic example in archaeology is RJC Atkinson‘s infamous ‘reconstruction’ of how the bluestones were brought by sea from the Prescelly mountains in Wales to Stonehenge. Alan Sorrell‘s drawings for Atkinson show wild savages propelling a raft with makeshift paddles and a wind-tattered sail – and the four-ton bluestone lashed on top of the raft.

Atkinson was a competent archaeologist, but clearly knew nothing about boats – the whole thing is best described as ‘suicide by sea’. You could just about get away with it on a placid inland river, but offshore the first real wave would flip the whole thing over, drowning everyone on board. As maverick archaeologist Tom Lethbridge pointed out, a more viable solution would be to sling the stone underwater, as a stabilising keel between two sea-going canoes – as he’d seen Inuit hunters do in the Arctic.)

Over in the New Age domains, a good example would be the (mis)use of terms such as ‘frequency’, ‘vibration’, ‘radiation’ or ‘energy’ – each of which has a precise meaning in science, but in those domains is often little more than a loose metaphor. It is useful as a metaphor: but the catch is that as a metaphor, it has no definitions on which to anchor either itself or any cross-references. So if we then take the metaphor too literally – the ‘frequency’ of an imagined ‘vibration’, perhaps – we soon end up with something that’s meaningless to anyone else, and probably to ourselves as well. The occasional moves towards standardised terms do help – but there’s no way to define meaningful values for frequencies and the like when the only possible standards reside in people’s heads. There doesn’t seem to be any way round this problem, either. Tricky…

Once again, though, don’t laugh at other people’s mistakes, because the enterprise-architecture field is barely any better. If you ask for a standard definition of obviously-important terms such as process or service or capability – let alone enterprise or architecture – you’ll discover very quickly why the collective-noun for people in our trade is ‘an argument of architects’. It’s possible, with some care, to build definition-sets that are consistent within themselves for some aspects of architecture: but there’s still no consistency across the overall space at all – and, by the nature of what we’re dealing with, probably never will be, either. Which means it’s up to us to maintain the discipline here: because if we don’t – if we instead blithely assume that “I know what I mean, so everyone knows what I mean too” – then the chances of not going half-baked would be slim at best. Not A Good Idea…

Interpretations risk going overcooked whenever we skip a step in the tests, or ignore warnings from the context that we’re looking in the wrong way or at the wrong place. It’s the right overall approach – deduction, or reasoning from the general to the particular, rather than induction – but even a single missed step can soon take the reasoning so far sideways as to invalidate the lot.

(As described back in the post on ‘the echo-chamber‘, one of the classic clues that’s something’s gone overcooked is the phrase “must be…”.

Some years back the physicist John Taylor became interested in dowsing [US: ‘water-witching’], and decided to do some tests, looking for ‘the mechanism’ by which it worked. We could have told him beforehand this was not a good idea – other physicists over the past century or so have shown that many different mechanisms can be involved, and can switch between them almost at random. But no matter, at least he started the right way, by going back to first principles. “There are only four forces in the universe”, he asserted: “weak nuclear, strong nuclear, electromagnetic, gravitational. It must be one of those.”

So far so good, sort of. But then his Scientist-mode certainty got the better of him: “It must be electromagnetic, and it must be in this frequency band.” By then the smell of burning was beginning to be noticeable to everyone – yet still Taylor kept going. “I couldn’t find any effect in that band”, he said, “therefore it must be that dowsing does not exist.”

To those who don’t know how science works, that chain of reasoning might at first seem to make sense: but in fact it’s so overcooked as to be charcoaled toast. And when even the normally staid journal New Scientist hauled him over the coals for it, so was Taylor’s reputation…)

Deduction works by narrowing scope, narrowing the range of choices. In formal scientific experimentation, we’re supposed to change only one parameter at a time, to ensure that we don’t accidentally skip a step and narrow the scope inappropriately. But if we don’t know what all the parameters are, it’s all too easy to change more than one as we change the conditions of the experiment. A lot more than one parameter, sometimes… And as soon as we do so, we go overcooked.

(The problem here is that it’s very easy to go overcooked without realising it: one missed check that we didn’t even know that we’d needed can ruin the whole dish.

At Belas Knap a colleague and I had been doing some experiments to check for possible sources of very low-frequency sound, using a home-built detector constructed by an engineer friend. This was strict physics in this case, but still looking for ‘earth mysteries’-type anomalies. The sensor had a microphone at one end of the box, with a loudspeaker on the side to give an audible signal.

The first time we used the box, we thought we’d found a consistent effect, very similar to the apparent ‘bands’ often found by other means on standing-stones and the like. The speaker was quiet at ground-level, but went louder about six inches above the ground, then quiet again a bit higher, and so on – a repeating pattern, at roughly six-inch intervals. Very exciting – we demonstrated it to some visitors at the site, too.

But when we talked it through with the engineer, he couldn’t make sense of it: the sensor hadn’t been designed to work that way at all. Then a horrid realisation, followed by some quick calculations, and crestfallen faces all round his workbench: the pitch of the sound from the speaker had the exact same wavelength – distance from peak to peak – as the pattern we’d found. In which case, what we’d found was merely an instrument-related artefact, a feedback-effect between speaker and microphone – nothing to do with the site at all. We’d gone overcooked, but didn’t know it, because we hadn’t known until then how the sensor worked.

Embarrassing, to say the least, given how much we’d hyped it up to those visitors. Oh well, back to the drawing-board…)

For the dowsers I worked with all those decades ago, one essential defence against going overcooked was to include an ‘Idiot’ response in the dowsing-instrument’s vocabulary. In other words, some kind of response that is different to those for neutral, Yes, No, or any of the directional responses, and which indicates that the current question – whatever it is – can’t be answered in any meaningful way by Yes or No or suchlike. “Un-ask the question” is what the ‘Idiot’ response really means.

To give a simple example, if the only available responses are ‘Yes’ or ‘No’, how would we resolve a double-question such as “Should I go left or right?”, or a double-negative such as “Is this not the right way to go?” The usual yes-or-no answers can’t make any sense here – so we’ll need another kind of response to warn us of that. That type of ‘un-ask the question’ response is also helpful for providing feedback to improve the skill in developing questions that can be answered meaningfully just by Yes or No – and as any scientist knows, the hardest part of the discipline is designing the right questions for an experiment to answer.

In a sense, though, the dowsers have it easier than we do as enterprise-architects: at least they have a clear way to access that warning-signal of ‘un-ask the question’. We don’t. (And waving a ring-on-a-string or whatever over our reference-architectures and roadmaps is probably not a viable option, either – not if we want to keep what little credibility we currently have…!) Instead, the only way that works is to develop fingerspitzengefühl, an intuitive sense of what’s right or wrong, what’s missing, of what’s not there as well as what is. And that’s hard – especially over the scope and scale of a true ‘the architecture of the enterprise’. Which is why learning how to do this game properly and well will take most of us a lot longer that we’d expect or like: certainly a lot longer than the infamous “learn enterprise-architecture in just one week!!!” certification-scams would purport, at any rate.

So to sum up so far, it should be clear that if we’re not careful, we can find ourselves stuck in the half-bakery, or with our questing at risk of becoming uncomfortably overcooked.

Yet worse, we can also end up with ‘answers’ that really are inedible – that are both half-baked and overcooked at the same time. Courtesy of the Newage Nuisance, the New Age fields are riddled with it – and it doesn’t exactly help that that incompetence is then often glossed over with hype (Sin #1) or some kind of golden-age myth (Sin #2). Oops…

(One example I often quote was an Australian who insisted that dowsing be described strictly as effects of physical ‘radiations’ – which, bluntly, we know that it isn’t. He had some strange ideas about limits, too: he asserted that only certain ‘special people’ – people like himself, that is, without any tooth-fillings, scars or eye-glasses – could use what he called the ‘radial detector’.

Some years later, he came across the idea of map-dowsing – using the same techniques, but working solely from a map to find things in the real-world. But he still desperately clung to his assertion that it worked only with physical radiations: the place somehow ‘knew’, he said, that it had to send these ‘radiations’ to every map and photograph of itself – even a hand-drawn sketch-map. Which stretches credibility a bit, to say the least. And by the time he’d insisted that such dowsing could be done only at midnight, beneath a bare electric light-bulb, by a person who must have no clothes on, it was clear that he’d lost the plot completely. Might have worked for him that way, perhaps – but most of us find somewhat simpler ways to do it…)

And once again, don’t laugh too long or loud at others’ mistakes, because enterprise-architecture often isn’t all that much better. One of our well-known problem-areas, for example, is those insistent attempts to assert that enterprise-architecture ‘must be’ some kind of ‘science-based Enterprise Engineering‘, or at least built on some kind of ‘science-based’ theory. To me, that’s a Meaning Mistake error that we really must reject: its over-certainties not only don’t help us in dealing with practical concerns such as mass-uniqueness or requisite-fuzziness or variety-weather, they’re often seriously misleading overall. And whilst, yes, the Scientist-mode does indeed have real importance in our work, the reality is that most of EA aligns more with technology, or craft, or even alchemy, than it does with most mainstream notions of ‘science’. In enterprise-architecture, an over-focus on ‘science’ is a Meaning Mistake that we really can’t afford.

One useful hint comes from what Edward de Bono describes as his ‘First Law of Thinking’: “proof is often no more than a lack of imagination”. (There’s also a rather more forceful version which asserts that “certainty comes only from a feeble imagination”…) The problem here is that, in itself, the Scientist-mode doesn’t have any imagination: it just follows the rules. So to complement the Scientist, we need to be able to dive into one of the ‘value’ modes – usually the Technologist-mode, but also the Artist-mode – to collect new ideas to play with, and bring them back for the Scientist-mode to test. We do have to remember to distinguish between the new ideas – the imagination – and facts – the results of tests – but with care it does work well. This is just as true in the formal sciences, too: Beveridge’s classic The Art of Scientific Investigation talks about the use of chance, of intuition, of dreams, and the hazards and limitations of reason – “the origin of discoveries is beyond the reach of reason”, he says.

What won’t help here is the Believer-mode: in fact it can cause the Meaning Mistake, because it treats its beliefs as facts – taking them as true ‘on faith’, so to speak. Muddled ideas about ‘spirituality’ can easily lead to the Newage Nuisance; but when self-styled ‘scientists’ start treating their beliefs as fact, they end up with a bizarre, aggressive pseudo-religion called ‘scientism‘ that can be a real nuisance for anyone working in the subjective space. The classic giveaway clue here is that as soon as emotion enters the picture, it’s no longer the Scientist-mode – it’s the Believer, masquerading as the Scientist.

And as with the ‘must-be mistake’, another warning-sign of potential ‘inedibility’ is any use of a phrase such as “it’s obvious” or “of course it’s the same as…” – because they usually mean that a key step or test has been skipped (‘obvious’), or that a simile or metaphor has been mistaken for fact.

But the only true protection against the Meaning Mistake is to recognise that the Scientist-mode has strict rules for meaning, to derive what it calls ‘fact’ – and we cannot be careless with those rules if we want our work to be meaningful in that mode. We can go off briefly to other modes to gather new ideas to test, but within the Scientist-mode itself, there’s no middle ground: either something is fact, or it isn’t. We ignore that point at our peril…

Mapping to ‘swamp-metaphor’ disciplines

To map the above to the set of disciplines for sensemaking and decision-making from the ‘swamp-metaphor‘:

And in turn cross-mapped to the SCAN framework for sensemaking and decision-making:

This time, we don’t need to map what’s going on here to those two key problem-areas of ‘applied-science’ (scrambled Technologist-mode) or ‘The Truth’ (scrambled Believer-mode): it’s mostly stuck in the Scientist, with occasional confusions from the Believer. In essence, we’ve summarised all the key mapping-issues above:

- sensemaking goes half-baked whenever we take ideas or information out of one context, and apply them to another, without considering the implications of doing so

- sensemaking goes overcooked whenever we skip a step in the tests, or ignore warnings from the context that we’re looking in the wrong way or at the wrong place

- sensemaking goes inedible when we allow it to go both half-baked and overcooked at the same time

- we need to beware of De Bono’s ‘First Law of Thinking’ – that purported ‘proof’ is often no more than a lack of imagination

- whenever emotion enters the picture in context of something that purports to be ‘fact’, it’s no longer the Scientist-mode – it’s the Believer, masquerading as the Scientist

- within the Scientist-mode itself, there’s no middle ground: either something is fact, or it isn’t

Note that these concerns apply primarily to the two certainty-modes, the Scientist and the Believer. In the Technologist-mode, and even more in the Artist-mode, it is okay to go half-baked, or even go overcooked – in fact it’s something we not only might do but should do, in certain stages of idea-development and the like. The key is to know that we’re doing it, and why we’re doing it – and above all, not mistake it for ‘fact’.

In summary:

— the Meaning Mistake arises from:

- carelessness with the rules for the Scientist-mode

- blurring with the Believer-mode by treating ‘scientific’ beliefs as fact

— to resolve or mitigate the Meaning Mistake:

- watch carefully for keyphrases such as “of course” or “it’s obvious” or “must be” – all of which indicate a high probability of meaning-mistakes

- watch carefully for misplaced emotion in what purports to be the Scientist-mode – it’s an indicator that sensemaking is actually stuck in the Believer-mode

- include an ‘Idiot’ or ‘un-ask the question’ response in the vocabulary to reduce the risk of overcook

- develop rigour and discipline around appropriate usage of formal-reasoning, including deductive, inductive and abductive reasoning-modes

Anyway, enough on the Meaning Mistake for now: time to move on to Sin #5, The Possession Problem.

—

(Note: This series is adapted in part from my 2008 book The Disciplines of Dowsing, co-authored with archaeographer Liz Poraj-Wilczynska.)

Leave a Reply