Services and disservices – 6: Assessment and actions

Services serve the needs of someone.

Disservices purport to serve the needs of someone, but don’t – they either don’t work at all, or they serve someone else’s needs. Or desires. Or something of that kind, anyway.

And therein lie a huge range of problems for enterprise-architects and many, many others…

This is the final part of a six-part series on services and disservices, and what to do about the latter to (we hope) make them more into the former:

- Part 1: Introduction (overview of the nature of services and disservices)

- Part 2: Education example (on failures in education-systems, as reported in recent media-articles)

- Part 3: The echo-chamber (on the ‘policy-based evidence‘ loop – a key driver for failure)

- Part 4: Priority and privilege (on the impact of paediarchy and other ‘entitlement’-delusions)

- Part 5: Social example (on failures in social-policy, as reported in recent media-articles)

- Part 6: Assessment and action (on how to identify and assess disservices, and actions to remedy the fails) (this post)

In previous parts of this series we explored the structure and stakeholders of services, and how disservices are, in essence, just services that don’t deliver what they purport to do.

We then illustrated those principles with some real-world examples in the education-system context.

Following that, we outlined some of the key causes for disservices – in particular, the echo-chamber of misplaced, unexamined belief, and power-against, a dysfunctional misconception of power as ‘the ability to avoid work’ – and derived a set of diagnostics to test for these.

In the previous posts to this one – Part 5 of the series, split into four sections, as above – we started to put all of that into practice, in assessment of real-world service-provision for a key social concern, working through assessments and thought-experiments that drew on a set of recent media-examples about that context. And at the end of that, we turned back towards the more everyday end of enterprise-architecture – which brings us to here, and to the whole point of this series: what do we do about disservices?

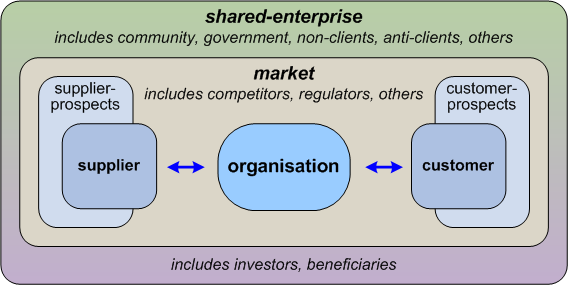

All of this discussion about services and disservices is in context of a shared-enterprise, with an identifiable overarching vision/purpose and identifiable stakeholders in that overall purpose:

Part of the role here for an enterprise-architect or the like would include:

- identify the vision/purpose that defines and delimits the shared-enterprise

- identify values and principles that provide guidance toward and evaluation for alignment to that purpose

- identify the stakeholder-groups, both explicit and implicit, respective to that purpose

- for a given service, identify the probable relations and roles of each stakeholder-group, as transactors, direct-interactors and/or indirect-interactors

- for that given service, identify the value-propositions towards each stakeholder-group within that shared-enterprise; the means to deliver those value-propositions to the respective stakeholder-groups; and the values, signals and metrics to identify and verify appropriate delivery to those value-propositions

- for that given service, identify the inter-responsibilities between all stakeholder-groups in that context, in order to identify and design for appropriate balance between stakeholders

So far, so mainstream (or mostly, anyway) for enterprise-architecture for service-design. Yet the point of this series is that it doesn’t stop there. Back in Part 1, we came up with set of useful distinctions around services and disservices:

- a service provides some form of practical support towards an identifiable need within an identifiable shared-enterprise

- (note: the offer of practical support is described in terms of a linked set of value-propositions that in effect describe the service’s promises of value to all stakeholders in the shared-enterprise – not solely the respective promise to subsets such as stakeholders ‘customer-transactors’, or financial investor/beneficiaries)

- a non-service occurs where a promise of service within the shared-enterprise would appear to have been made, but is not actually available (e.g. product does not exist, is no longer made, or not available in this country)

- a misservice occurs where a promise of service has been made, but is not actually available in the present circumstances (e.g. breakdown, out-of-stock, supply-chain delay, wrong item delivered)

- a disservice occurs where a promise of service has been made, but, either by accident or by intent, is delivered selectively or not at all (e.g. payment taken but service not delivered, skewed or unconscionable ‘service-agreements‘, confusions between money, price and value, or gross externalities or the ‘game’ of ‘commonize costs, privatize profits‘)

As we saw in Part 2, a service may be structured or restructured to benefit a separate set of stakeholders, inherently becoming a disservice for most of its nominal stakeholders; or as we saw in Part 5, a service may even be structured to cause actual harm to some of its stakeholders, and ultimately delivering a disservice to all. Not A Good Idea…

The reason why disservices are Not A Good Idea is that, whilst they might seem to be ‘profitable’ (in some sense or other) to certain stakeholders, in reality disservices represent inherent risks to the service-provider and to the shared-enterprise as a whole. These risks may be quite hard to recognise at first, such as hidden risks in business-models, reputational threats via social-media and the like, kurtosis-risks that can threaten ‘social licence to operate‘, or a ‘Pass The Grenade‘ risk shared across an entire industry.

Where enterprise-architects come into this picture is that we’re one of the very few groups of people within an organisation who are tasked with maintaining at least a whole-of-organisation view, and preferably a whole-of-enterprise view. In a quite literal sense, we’re often the only people who can even see such disservices and their concomitant risks – which means that it’s our responsibility to identify them, and describe and explain them to others.

It’s perhaps simplest here to think of the role of architect as ‘pre-whistleblower’. A whistleblower typically calls a warning about damage to enterprise after it’s done, with the aim to ensure that it doesn’t happen again; but a ‘pre-whistleblower’ warns of structural flaws so as to prevent damage to the enterprise from happening at all.

(There are plenty of other possible types of structural-flaws, of course, but risk of disservices is certainly a major category for which we do need be aware.)

Beyond unavoidable breakdowns and the like, there are just two key causes for any kind of disservice. A fundamental part of our ‘pre-whistleblower’ work, then, is to watch for those two core causes, and design our way around them.

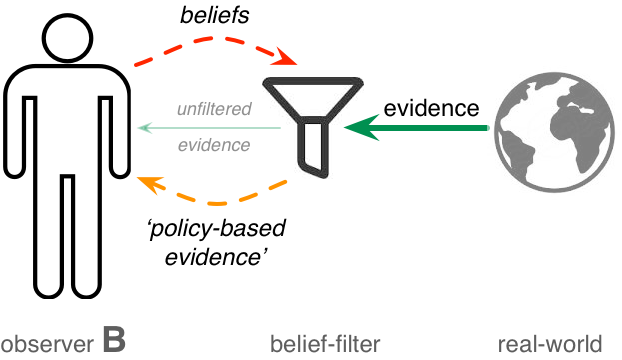

The first key cause of disservices, which we explored in some depth in Part 3 of this series, is cognitive-bias, particularly in a form I describe as ‘the echo-chamber‘:

An echo-chamber is, in essence, a misplaced, unexamined belief, reinforced via Gooch’s Paradox (“things not only have to be seen to be believed, but also have to be believed to be seen”), leading to ‘policy-based evidence‘ – a self-confirming loop that would typically seem credible enough on the surface, but in reality may be dangerously incomplete, or even downright delusory.

To assess if an echo-chamber is in play, use the diagnostics from Part 3. These include typical keywords or keyphrases to watch for in everyday language:

- ‘must be‘

- ‘it’s obvious‘

- ‘it’s self-evident‘

- ‘as everyone knows‘

Example sensemaking-techniques to identify potential or actual echo-chambers might include:

- alternates – what is an equivalent version of this in a different context?

- assumption-inversion – would the opposite also be true if specific assumptions were switched round?

- language-inversion – would the opposite also be true if, for example, gender-pronouns or role-relationships were switched round?

- scale up, then look down – what was missing from view at the initial scale?

- rescale to extremes – how does the view change from the perspective of a single individual, or of an entire world?

- switch cultures – what changes when we shift to a different culture’s assumptions and mores?

- look for what’s missing – given a logical set (family, group, industry etc), which parts of that set are over-emphasised, under-emphasised, ‘invisible’ or ‘undiscussable’?

Once we identify that an echo-chamber is in play, tactics to address and reduce echo-chambers might include:

- TRIZ for reframe

- rich-randomness for disruption of ‘pattern-entrainment’

- use of guiding-principles to connect back to the core enterprise-vision

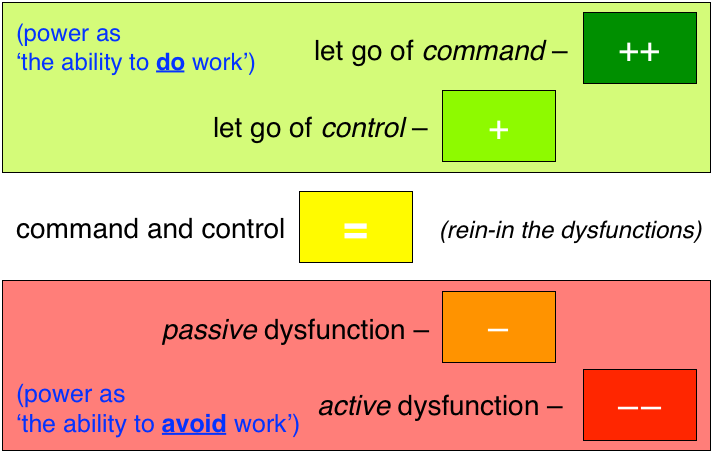

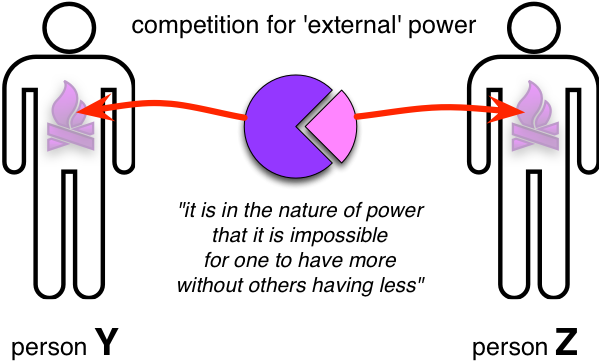

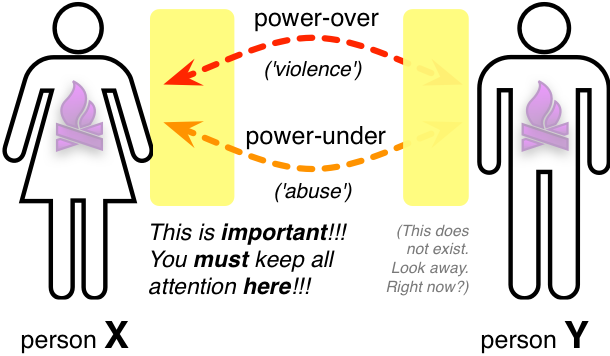

The second key cause of disservices, which we explored in Part 4 of this series, is that some stakeholders attempt to assert or impose priority and privilege above all others – or, more specifically, some form of priority or privilege over others that is unwarranted in terms of the overall purpose for the shared-enterprise. The driver behind this is usually a misconception of power not as ‘the ability to do work’, but as ‘the ability to avoid work’. This is often backed up by some decidedly-dysfunctional tactics that we could describe as ‘power-against’, in the sense of power being used against others rather than for the nominal shared-purpose:

This in turn is often strongly linked to dysfunctional concepts of power and power-relationships – typically, a notion that power is inherently ‘external’ to ourselves, and that there’s only so much power or resources or whatever to go around:

Power-against typically arises in various combinations of one or other of two distinct forms:

- power-under – offload responsibility onto the Other without their engagement or consent (passive-dysfunction, colloquially known as abuse)

- power-over – prop Self up by putting Other down (active-dysfunction, colloquially known as violence)

Which leads to an often tangled, tortuous, highly-’political’ jostling for position, each one vying to be ‘top dog’, the one who ‘wins’ the most, by making everyone else lose:

As for the echo-chamber risks, the diagnostics here would include keywords or keyphrases to watch for about the probable presence of power-against, such as:

- ‘entitled‘ or ‘entitlement‘

- ‘it’s my right‘

- ‘insubordination!‘

- ‘should‘ (especially as ‘you/they should have‘)

- any form of blame, whether of others or self

- ‘deserve‘ (especially as ‘you/They don’t deserve‘)

- ‘everyone’s doing it‘ (a classic indicator of a industry-wide ‘game’ of ‘Pass The Grenade‘)

Part 4 in the series also gave us a detailed checklist of modes of impact for power-against, and what to do to mitigate against the respective risks, which we could summarise as follows:

- A: Coercion and threats → Negotiation and fairness

- B: Intimidation → Non-threatening behaviour

- C: Economic abuse → Economic partnership

- D: Emotional abuse → Respect

- E: Misusing sexuality → Sexual respect and trust

- F: Priority and privilege → Shared responsibility

- G: Isolation → Trust and support

- H: Misusing children → Responsible (about) parenting

- J: Misusing others (third-party abuse) → Social self-responsibility

- K: Minimising, denying and blaming → Honesty and accountability

- L: Lying and dissembling → Truthfulness and transparency

So if, within the respective service-context, we see or hear any of those keywords above, or see significant evidence of any of those checklist-items, alarm-bells should go off straight away – because it’s very likely that disservices are being created somewhere within that context.

Those two key causes of disservice can often coincide or interact. This occurs perhaps especially in any context that is highly emotive and/or highly ‘political’. In Part 5 of this series we explored an example of this in social-policy, in which a set of arbitrary and unexamined assumptions, backed up by what might be described as a ‘full-house’ of forms of power-against, created a seriously-skewed view of a social problem – domestic-violence. That in turn resulted in a classic ‘mess’, within which some aspects of the problem were over-emphasised, and others de-emphasised or even actively-suppressed:

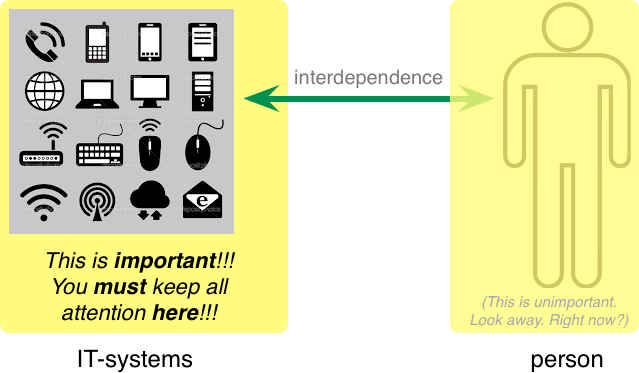

It’s true that, as yet, most enterprise-architects might not come across those specific types of contexts – though EAs working in government and the like should certainly be aware of them, and the risks that they represent to the respective shared-enterprise. Yet we see exactly the same problems in play, with the same types of mistakes, and the same causes, in contexts that most EAs do already deal with – such as the seeming-irresolvable messes created by IT-centrism:

I’ve written about this quite often on this blog, such as in the following posts:

- ‘How IT-centrism creeps into enterprise-architecture‘

- ‘IT-centrism, business-centrism and business-architecture‘

- ‘The dangers of specialism-trolls in enterprise-architecture‘

Yet perhaps the best description I’ve seen recently of this is in Kentaro Toyama’s book Geek Heresy – a ‘must-read’ for all enterprise-architects, in my opinion.

(There’s a good introduction to Toyama’s ideas and experiences in the Washington Post article ‘He was certain technology would save the world. Here’s what changed his mind.‘)

Toyama, like many tech-folk, had formerly held the belief that technology-oriented ‘prepackaged-interventions’ such as One Laptop Per Child were, in essence, ‘The Answer To All Of The World’s Problems™’ – or the respective part of the world’s problems, at least. What he found in practice, though, was that delivering the prepackaged-intervention was the easy part: the hard part – often the really-hard part – was the human side of the intervention, without which the prepackaged-intervention often turned out to be worse than useless. As the book’s blurb puts it:

After a decade designing technologies meant to address education, health, and global poverty, award-winning computer scientist Kentaro Toyama came to a difficult conclusion: Even in an age of amazing technology, social progress depends on human changes that gadgets just can’t deliver. … Geek Heresy is a heartwarming reminder that it’s human wisdom, not machines, that move our world forward.

One of the dreams of One Laptop Per Child and the like was that technology would act as a great leveller, bringing poorer people up to the same level as richer others. But the reality is that technology more typically acts not as a leveller but as an amplifier: if there are any inequalities in the context – social, political, economic or whatever – then the technology will tend not to reduce them, but to amplify them. Which is why technology-based interventions can indeed cause more harm than good – and certainly will, if not backed up and supported by the much harder work of person-to-person mentoring and more.

Hence why an enterprise-architecture, for example, must be about more than just technology alone: it must fully include all of the relevant people-themes, across the entire stakeholder base for the respective shared-enterprise.

So what can we do about all of this?

How can we nip those problems in the bud, before they end up creating destructive disservices? Most of the above is really just about ways of thinking, about mindset, and all of it is straightforward enough once we learn how to see it. But how do we get to see it, when the core of the problem is that, by definition, it’s very hard to see until we do see it? It’s kinda like a classic hen-and-egg problem, but a lot more pointed – and with the sharp pointy end pointing at us…

One answer that does seem to work (for me, anyway!) is to be deliberately-disruptive to ourselves, our own ways of thinking. In effect, we take on the intentional role of the business-anarchist, working directly with uncertainty – in contrast to the role of the business-analyst, who would more usually aim to eliminate it.

A key understanding here is that an echo-chamber gets started when someone (and that ‘someone’ may well be us) takes a theory or belief to be ‘the truth’ – or, worse, ‘The Truth’ (with, yes, those nice big audible capital-letters…). And since it’s The Truth, then obviously, and self-evidently, and as everyone knows, it must be that that The Truth applies everywhere. (You did recognise those keywords, yes?) But there’s a catch – as perhaps best illustrated by a handful of variously-well-known quotes:

- “all models are wrong, but some are useful” (George Box)

- “proof is often no more than a lack of imagination”(Edward de Bono)

- (alternative version: “certainty comes only from a feeble imagination” – harsher though often more-accurate…)

- “whatever theory you hold, I’ll find something that doesn’t fit” (Charles Fort)

- “without a fixed ideology, or the introduction of religious tendencies, the only approach which does not inhibit progress … is ‘anything goes'” (Paul Feyerabend)

Or, in short:

- the only absolute truth is that there are no absolute truths

Which is kinda difficult if we want to hold on to the comforting certainty of something-or-other as ‘The Truth’…

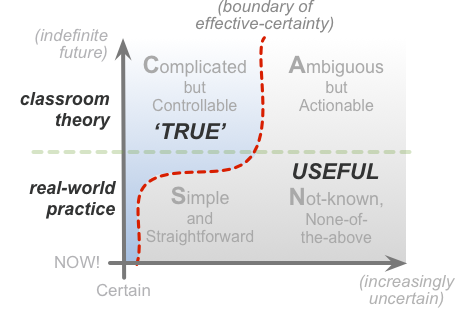

There are two key aspects to this. One is is a corollary of George Box’s quote above: that whilst a model may not be ‘true’ everywhere, it can still be useful even where it isn’t ‘true’. The trap there is that, with a little help from ‘policy-based evidence’, that fact about usefulness may delude people into thinking that because it’s useful, that therefore proves that it is ‘The Truth’ everywhere. We need to remember, always, that ‘true’ and ‘useful’ are not necessarily the same.

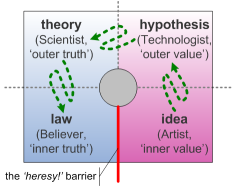

The other danger arises from an old Yogi Berra quip: “in theory there’s no difference between theory and practice; in practice, there is”. What seems so certain in theory, may not be so certain when we try to use that theory in the heat of action – especially in contexts that have a significant degree of inherent mass-uniqueness. In terms of the SCAN framework, notions of ‘The Truth’ tend in practice to apply only to a smallish subset of any overall context-space – with much less effective-certainty in real-time action than it might seem from the comfort of the armchair or the lab:

As Gene Hughson kindly reminded me earlier today, this is also illustrated by the respective impacts of Occam’s Razor and Hickam’s Dictum. In the ‘truth’-compatible segment of the context-space, Occam’s Razor would usually apply: in general, the theory with the fewest assumptions should ‘win’. But anywhere outside of that segment, and often even within it, we must always remember the warning of Hickam’s Dictum, that “Patients can have as many diseases as they darn well pleases”:

the principle of Hickam’s dictum asserts that at no stage should a particular diagnosis be excluded solely because it doesn’t appear to fit the principle of Occam’s razor

A related danger here is an over-reliance on supposed ‘science’. In enterprise-architecture, for example, several common notions of ‘science’ can be more than a bit misleading, with serious complexities around hypotheses, repeatability and more. Much of what we do in EA will necessarily will fall into the fuzzy realms of ‘lies-to-children‘: in fact there are even some strong arguments for suggesting that EA has more in common with alchemy than it has with ‘science’.

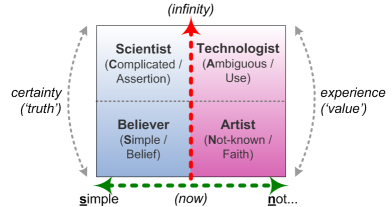

We can also usefully cross-map between SCAN and the swamp-metaphor, to see how different archetypal-roles emphasise different ‘ways of working’ within the overall context-space:

To make sense of a context-space, we need to switch continually between these archetypal roles, leveraging the advantages of each at the appropriate moments, and moving on so as to avoiding getting caught up in their disadvantages. It’s from that kind of dynamic shifting between models and mindsets that, for example, that we derive the classic notion of science as a progression from idea to hypothesis to theory to law:

Yet notice the booby-trap here – the ‘heresy!’ barrier. The whole point of this process is that we can move around freely within the context-space, switching between modes as the context requires. But for many people – for all of us, at times – it seems that once we reach the notion of ‘law’, we then get stuck in the mode of the Believer – dismissing everything that conflicts with its chosen law as ‘heresy’, forbidden, wrong. Which, unfortunately, strips us of any room for manoeuvre when Reality Department tries to warn us that we’ve hit some part of the context within which our ‘law’ itself is ‘wrong’…

The great advantage of the Believer mode is that it provides some real relief from uncertainty in real-world action: it provides an anchor of ‘inner-truth’ that can be embodied and applied at real-time without need for further thought or doubt. Its great disadvantage, though, is that that sense of certainty arises only because it’s completely locked itself within a ‘policy-based evidence’ loop. It defines itself as ‘The Truth’, but it has no means, within itself, to test whether its ‘The Truth’ is valid in that respective part of the context. And when Reality Department provides us with a context to which that ‘The Truth’ doesn’t fit – which happens a lot more in real-world practice than theory might suggest, as per that ‘True/Useful’ skew earlier above – then we tend to find ourselves pressed up against the edge of panic:

The appropriate response at that point is to let go briefly into the Artist mode, using the shared-purpose, principles and values of the overall shared-enterprise to guide us within the inherent-uncertainty of the ‘Not-known’, and bring us back over to the safer side of the edge-of-panic again with appropriate options to use. But what if the Believer can’t let go – that it must cling on to the emotional-safety of its simple, certain ‘The Truth’, and refuse to venture forth into the Not-known? What happens then is that it will reject the message that its ‘The Truth’ does not apply here, and instead declare that it is the real-world that is ‘wrong’ – not its ‘The Truth’. Very common, but Not A Good Idea…

In essence, those accusations of ‘heresy!‘ arise because someone either cannot, will not, or dare not face the fears that arise from being pressed up against the edge-of-panic. And in turn, that’s the deeper source for both of those key causes of disservices:

- a closed-loop echo-chamber arises whenever someone gives greater priority to their ‘The Truth’ than to the messages coming back from the real-world

- power-against (such as emotional-abuse, assertions of priority and privilege, and minimising, denying and blaming) arises whenever someone tries to ‘export’ their fears onto someone else

The word ‘heresy’ literally means ‘to think differently’: and, as in that example of Toyama’s book Geek Heresy, there will often be times and contexts where we do need to be able to ‘think different’. If we can’t do that, then we’re stuck – and angry assertions of ‘heresy!’ and suchlike will not help us at all to get out of that mess, and get back to the real-world.

We’ll notice, though, that there’s often a lot of emotion behind those accusations of ‘heresy!’ – often a strangely cold emotion at that, calmly-arrogant, over-certain, supercilious, dismissive of all others. Odd. In fact that’s one of the classic ‘give-away’ warnings to watch for here: when someone asserts that what they’re proposing is ‘science’ or suchlike, but there’s that odd emotional undercurrent behind it, it’s an almost certain sign that they’re stuck in the Believer – in effect, the realm of religion, not science. Again, it’s Not A Good Idea…

And the ‘someone’ who’s getting stuck there could easily be us, of course. Which is why we, as enterprise-architects and the like, need to take especial care to develop and practice the ‘swamp-metaphor’ modes and disciplines – the Artist, Scientist, Technologist, and Believer too – for working with and moving around within a conceptual context-space:

As mentioned back in Part 3 of this series, the post ‘Sensemaking – modes and disciplines‘ gives a practical point-by-point guide on how to do this. Each domain within the frame represents a distinct mode or ‘way of working’ within the overall context-space, each bounded by different assumptions, different priorities, different focus-concerns. We then ‘move around’ within the context-space, switching between modes in a deliberate and disciplined manner, using the respective descriptions for each mode as a guide.

In the framework, the guidance for each mode is laid out in a consistent format, under the following headings:

- This mode is available when…

- The role of this mode is…

- This mode manages…

- This mode responds to the context through… (i.e. prioritises, for sensemaking)

- This mode has a typical action-loop of…

- Use this mode for…

- We are in this mode when…

- ‘Rules’ in this mode include…

- Warning-signs of dubious discipline in this mode include…

- To bridge to [other mode], focus on…

The consistency of the framework, and the clarity on how and when and why to switch between modes, makes it probably one of the simplest means for us to minimise the risks of creating disservices, and help others do the same.

Within each mode, it’s especially important to watch for warning-signs that we’ve lost track of discipline. If we don’t watch for these signs, we’re likely to find ourselves walking straight into the echo-chamber trap, or some other crucial methodological-mistake, without realising that we’ve done so. The most-common ways to get it wrong – the seven sins of dubious discipline – are:

- The Hype Hubris – getting caught up in ‘a triumph of marketing over technical-expertise’, in which style takes priority over substance.

- The Golden-Age Game – another glamour-trap formed by a bizarre blend of pseudo-science and pseudo-religion, combining all the disadvantages of both modes with the sense of neither.

- The Newage Nuisance – often-wilful ‘ignore-ance’ of any form of discipline, so named because it’s so characteristic of so much so-called ‘New Age’ philosophy and practice, but all too common elsewhere as well.

- The Meaning Mistake – allowing ideas and interpretations to become ‘half-baked’, ‘overcooked’, or both.

- The Possession Problem – fear of uncertainty leading to misguided notions about possessing ‘The Truth’.

- The Reality Risk – failing to recognise that in certain types of sensemaking, things may be both ‘imaginary’ and ‘real’ at the same time.

- Lost in the Learning Labyrinth – becoming misled by any of the various traps and ‘gotchas’ in the skills-learning process.

In enterprise-architecture and service-design, we’ll see all of those ‘sins’ in action all too often – and often two or more of those sins at once, too…

I’d better stop here, so I won’t give more detail on those ‘sins’ right now. But it does need more explanation, so I’ll do a follow-up post somewhen soon, using the fundamentally-misguided concept of ‘natural rights’ as a worked-example.

So let’s bring to a close this series on services and disservices: hope you’ve found it useful, anyway. Over to you for comments, perhaps?

Leave a Reply